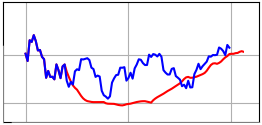

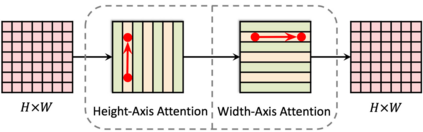

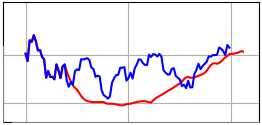

Recurrent Neural Networks were, until recently, one of the best ways to capture the timely dependencies in sequences. However, with the introduction of the Transformer, it has been proven that an architecture with only attention-mechanisms without any RNN can improve on the results in various sequence processing tasks (e.g. NLP). Multiple studies since then have shown that similar approaches can be applied for images, point clouds, video, audio or time series forecasting. Furthermore, solutions such as the Perceiver or the Informer have been introduced to expand on the applicability of the Transformer. Our main objective is testing and evaluating the effectiveness of applying Transformer-like models on time series data, tackling susceptibility to anomalies, context awareness and space complexity by fine-tuning the hyperparameters, preprocessing the data, applying dimensionality reduction or convolutional encodings, etc. We are also looking at the problem of next-frame prediction and exploring ways to modify existing solutions in order to achieve higher performance and learn generalized knowledge.

翻译:经常的神经网络直到最近一直是在序列中捕捉及时依赖性的最佳方法之一。然而,随着变异器的引入,事实证明,一个只有注意机制而没有任何RNN的架构可以改进各种序列处理任务(如NLP)的结果。此后的多项研究表明,对图像、点云、视频、音频或时间序列的预测可以采用类似的方法。此外,还引入了 Perceiver或Intin等解决方案来扩大变异器的适用性。我们的主要目标是测试和评价在时间序列数据中应用类似变异器的模型的有效性,通过微调超分计、预处理数据、应用维度减法或变动编码等处理易变、环境意识和空间复杂性。我们还在研究下一个框架的预测问题,并探索修改现有解决方案的方法,以提高绩效和学习普遍知识。