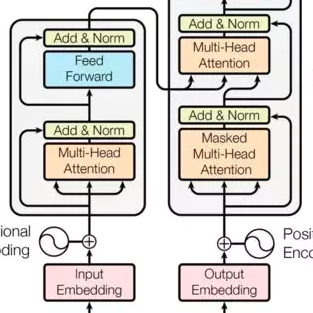

Transformer是谷歌发表的论文《Attention Is All You Need》提出一种完全基于Attention的翻译架构

知识荟萃

论文列表

原文:

- 《Attention is all you need》:https://papers.nips.cc/paper/7181-attention-is-all-you-need.pdf

相关论文

- 《Reformer: The Efficient Transformer》:https://arxiv.org/abs/2001.04451

开源代码

- Kyubyong/transformer (TF)

- huggingface/transformers (PyTorch)