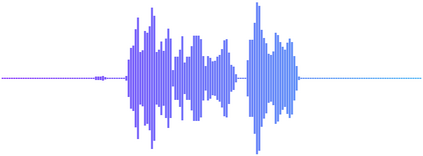

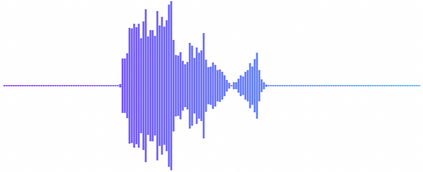

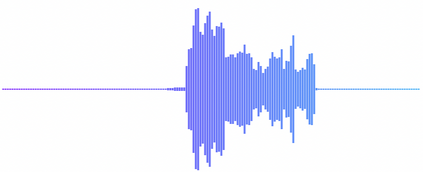

With the rise of deep learning and intelligent vehicles, the smart assistant has become an essential in-car component to facilitate driving and provide extra functionalities. In-car smart assistants should be able to process general as well as car-related commands and perform corresponding actions, which eases driving and improves safety. However, in this research field, most datasets are in major languages, such as English and Chinese. There is a huge data scarcity issue for low-resource languages, hindering the development of research and applications for broader communities. Therefore, it is crucial to have more benchmarks to raise awareness and motivate the research in low-resource languages. To mitigate this problem, we collect a new dataset, namely Cantonese In-car Audio-Visual Speech Recognition (CI-AVSR), for in-car speech recognition in the Cantonese language with video and audio data. Together with it, we propose Cantonese Audio-Visual Speech Recognition for In-car Commands as a new challenge for the community to tackle low-resource speech recognition under in-car scenarios.

翻译:随着深层学习和智能车辆的兴起,智能助理已成为便利驾驶和提供额外功能的基本随身设备的组成部分;驾驶智能助理应当能够处理一般的以及与汽车有关的指令并开展相应的行动,这样可以方便驾驶并改进安全;然而,在这一研究领域,大多数数据集都使用主要语言,如英语和汉语;低资源语言存在巨大的数据稀缺问题,阻碍了为更广泛的社区开展研究和应用;因此,必须制定更多的基准,以提高认识和激发对低资源语言的研究;为缓解这一问题,我们收集一套新的数据集,即用视频和音频数据在广东语中进行车内语音识别,即广州汽车内语音识别(CI-AVSR),以便用视频和音频数据进行车内语音识别;此外,我们提议,在车内指挥区,广域语音识别,作为社区应对车内低资源语音识别的新挑战。