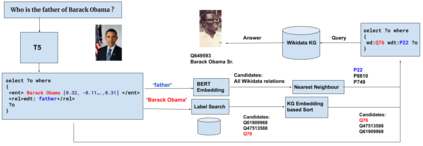

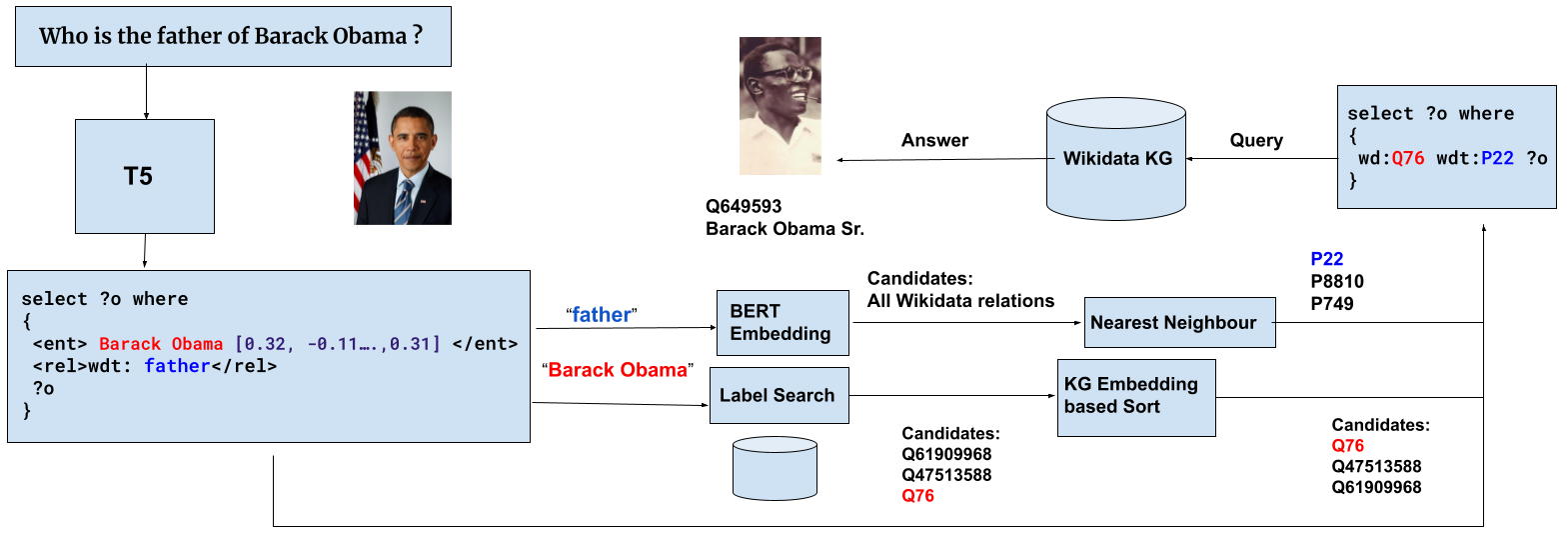

In this work, we present an end-to-end Knowledge Graph Question Answering (KGQA) system named GETT-QA. GETT-QA uses T5, a popular text-to-text pre-trained language model. The model takes a question in natural language as input and produces a simpler form of the intended SPARQL query. In the simpler form, the model does not directly produce entity and relation IDs. Instead, it produces corresponding entity and relation labels. The labels are grounded to KG entity and relation IDs in a subsequent step. To further improve the results, we instruct the model to produce a truncated version of the KG embedding for each entity. The truncated KG embedding enables a finer search for disambiguation purposes. We find that T5 is able to learn the truncated KG embeddings without any change of loss function, improving KGQA performance. As a result, we report strong results for LC-QuAD 2.0 and SimpleQuestions-Wikidata datasets on end-to-end KGQA over Wikidata.

翻译:----

在本文中,我们提出了一种名为GETT-QA的端到端知识图问答系统。GETT-QA使用T5,一种流行的文本到文本预训练语言模型。该模型以自然语言的形式接受问题作为输入,并生成简化形式的预期SPARQL查询。在简化形式中,该模型不直接生成实体和关系ID,而是生成相应的实体和关系标签。标签在随后的步骤中与KG实体和关系ID相结合。为了进一步改善结果,我们指导该模型生成每个实体的KG嵌入的截断版本。截断KG嵌入使细化搜索用于消歧。我们发现,T5能够在不改变损失函数的情况下学习截断的KG嵌入,从而提高了KGQA的性能。因此,我们在Wikidata的LC-QuAD 2.0和SimpleQuestions-Wikidata数据集上报告了端到端KGQA的强大结果。