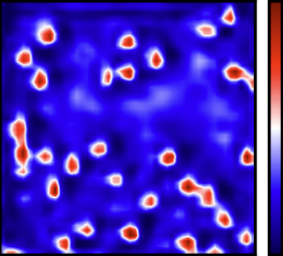

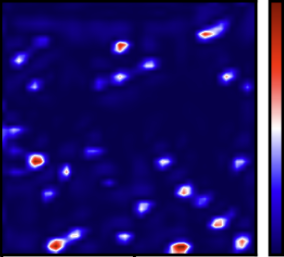

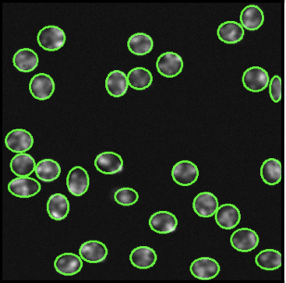

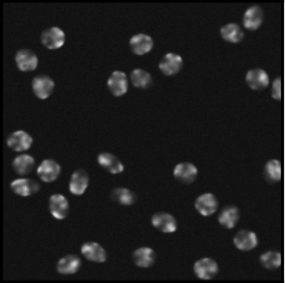

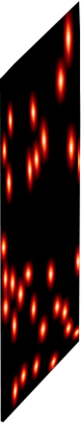

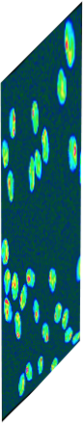

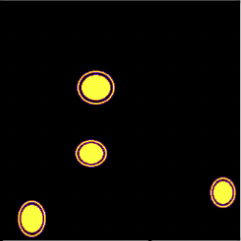

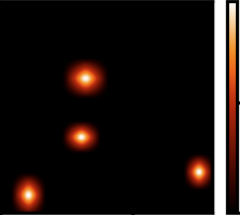

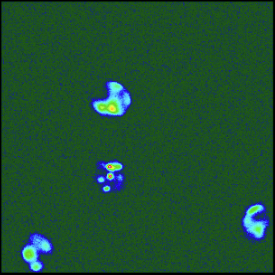

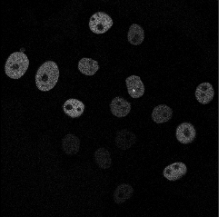

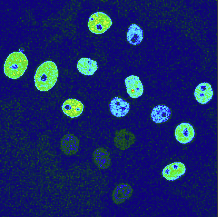

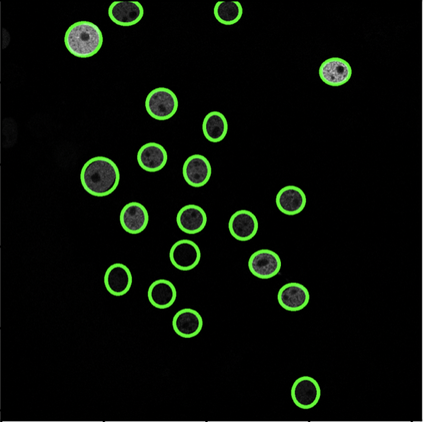

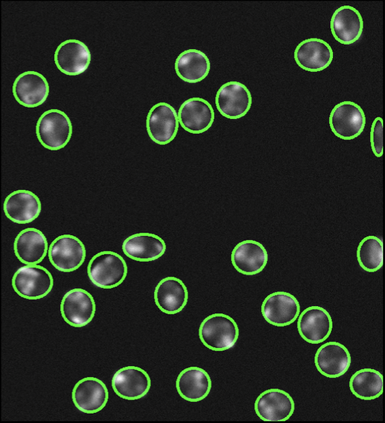

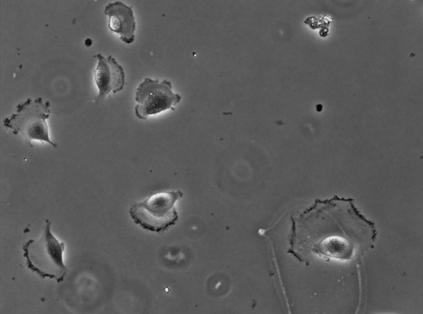

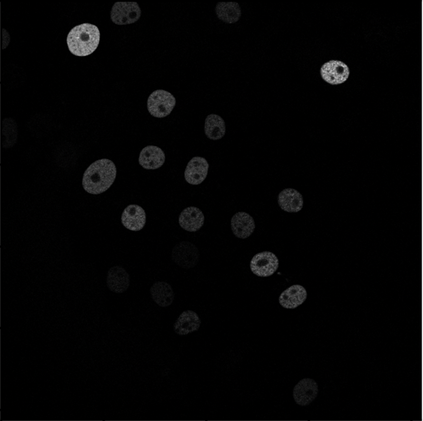

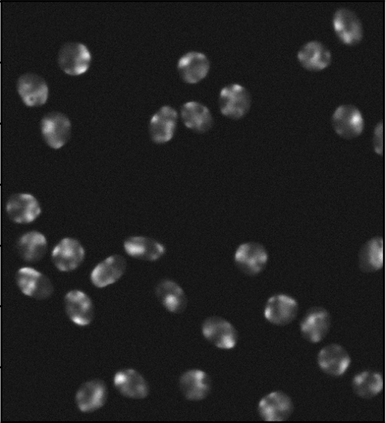

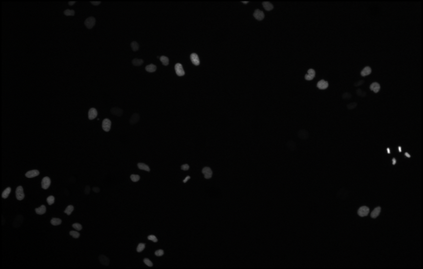

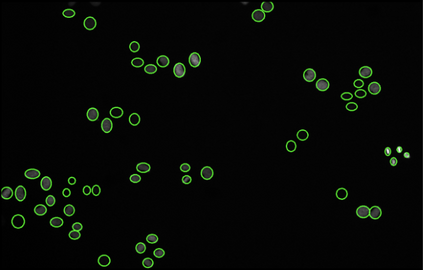

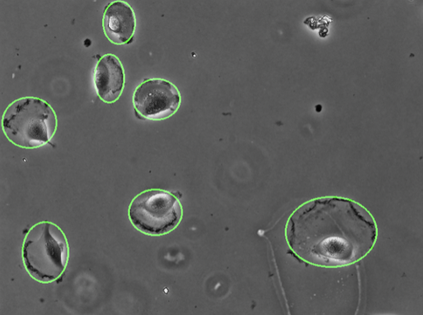

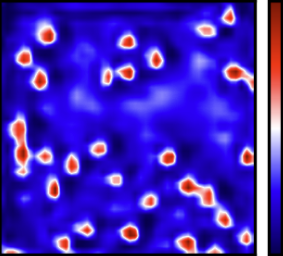

Cell detection in microscopy images is important to study how cells move and interact with their environment. Most recent deep learning-based methods for cell detection use convolutional neural networks (CNNs). However, inspired by the success in other computer vision applications, vision transformers (ViTs) are also used for this purpose. We propose a novel hybrid CNN-ViT model for cell detection in microscopy images to exploit the advantages of both types of deep learning models. We employ an efficient CNN, that was pre-trained on the ImageNet dataset, to extract image features and utilize transfer learning to reduce the amount of required training data. Extracted image features are further processed by a combination of convolutional and transformer layers, so that the convolutional layers can focus on local information and the transformer layers on global information. Our centroid-based cell detection method represents cells as ellipses and is end-to-end trainable. Furthermore, we show that our proposed model can outperform fully convolutional one-stage detectors on four different 2D microscopy datasets. Code is available at: https://github.com/roydenwa/cell-centroid-former

翻译:显微镜图像中的细胞检测对于研究细胞如何移动和与其环境互动十分重要。最近大多数基于深深学习的细胞检测方法都使用进化神经网络(CNNs) 。然而,由于其他计算机视觉应用的成功,也为此目的使用了视觉变压器(ViTs)。我们提出了一个新型的混合CNN-ViT模式,用于在显微镜图像中检测细胞,以利用这两种深层学习模型的优势。我们使用高效的CNN,在图像网数据集上预先培训,以提取图像特征并利用传输学习来减少所需培训数据的数量。提取的图像特征通过结合进化和变异的层进一步处理,以便进化层能够侧重于当地信息和变异器层次的全球信息。我们基于机器人的细胞检测方法代表细胞作为椭圆,并且是端到端可训练的。此外,我们展示了我们提议的模型可以在四个不同的2D显微镜数据集上超越完全进化的一阶段检测器。代码可用: https://github.com/roydenwacresserect-centententententcentcententent