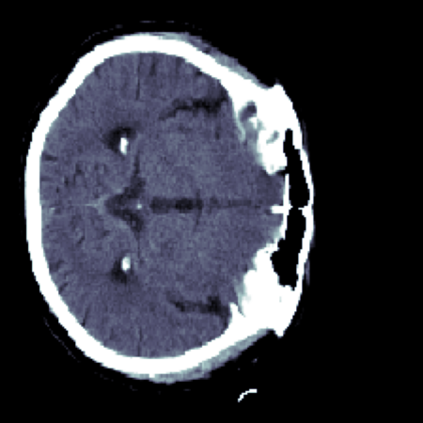

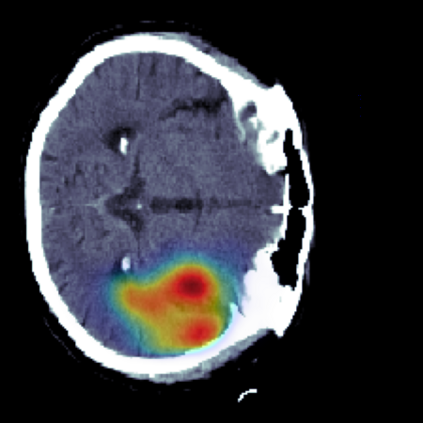

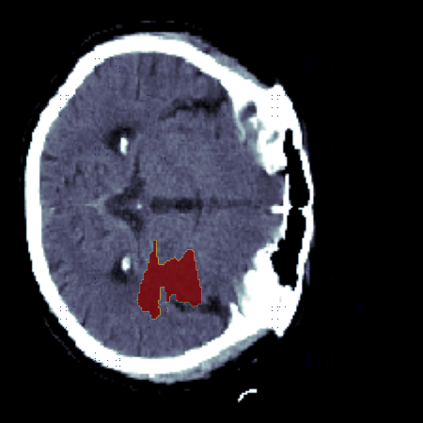

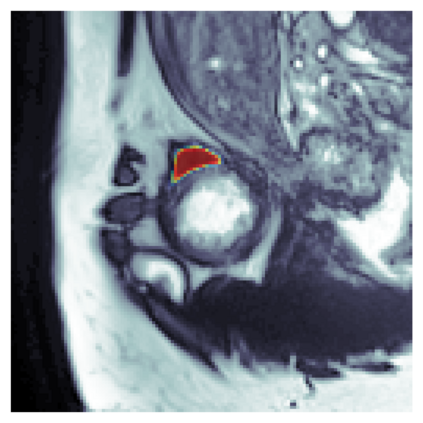

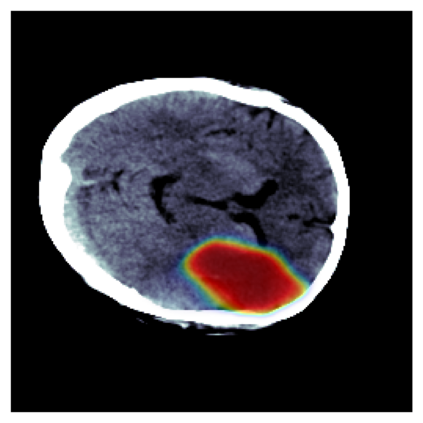

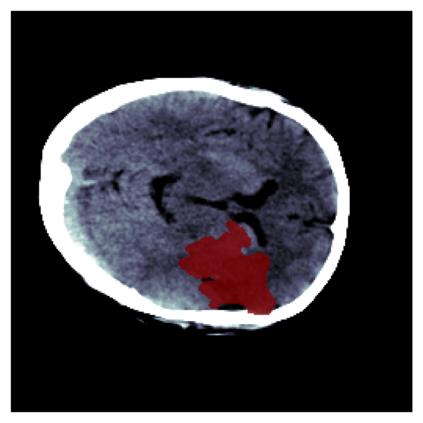

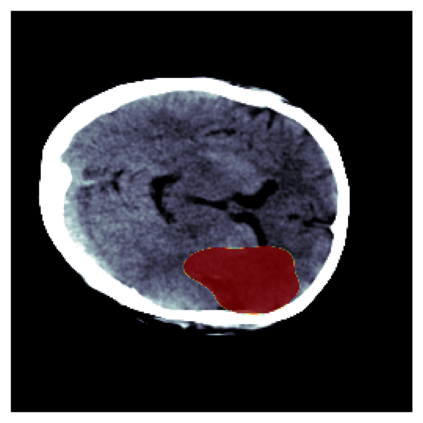

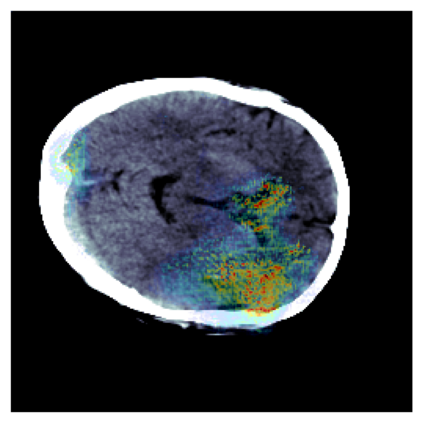

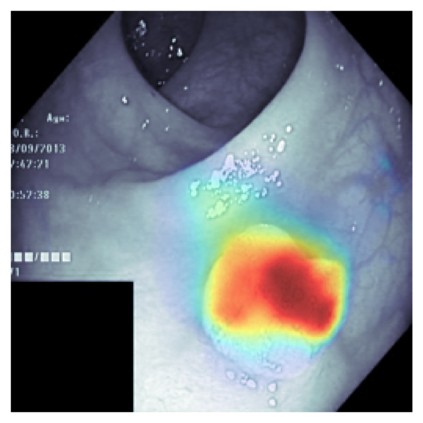

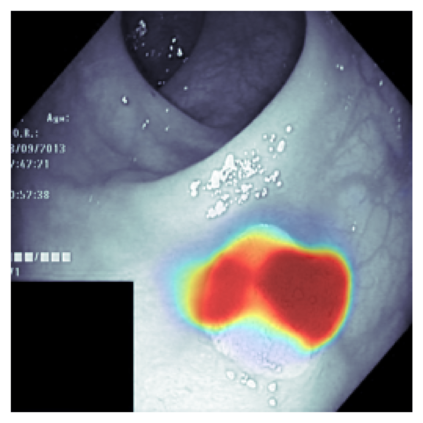

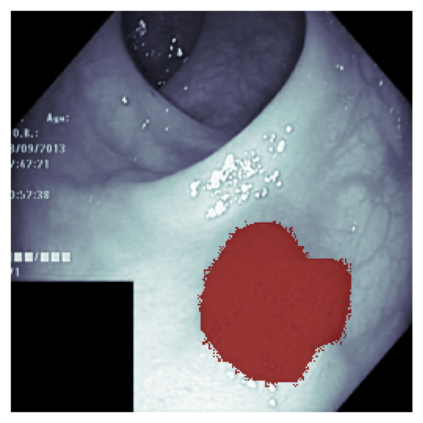

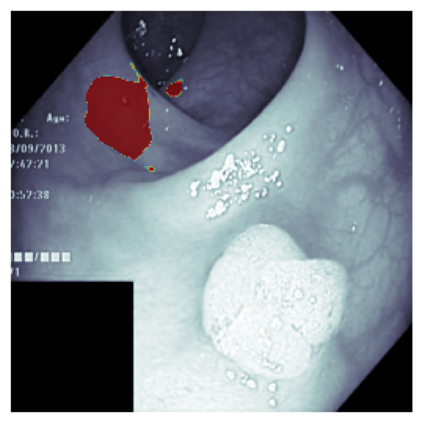

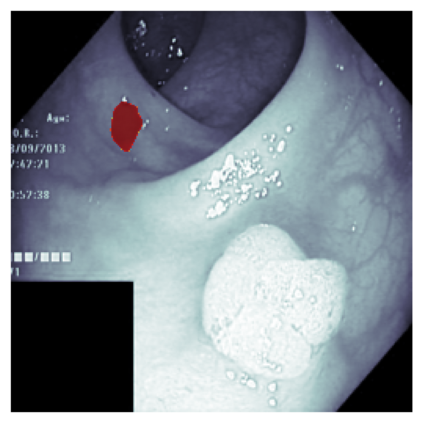

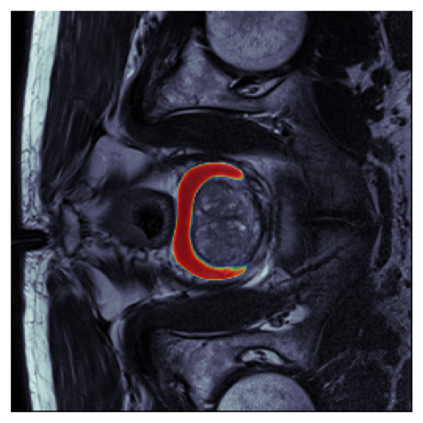

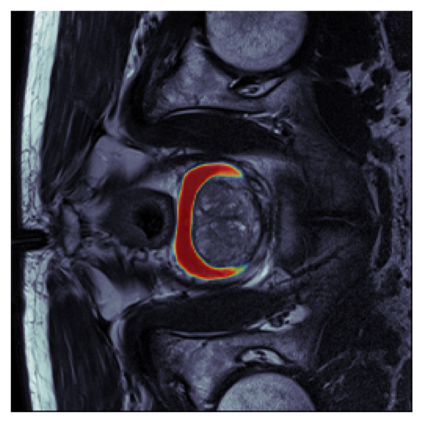

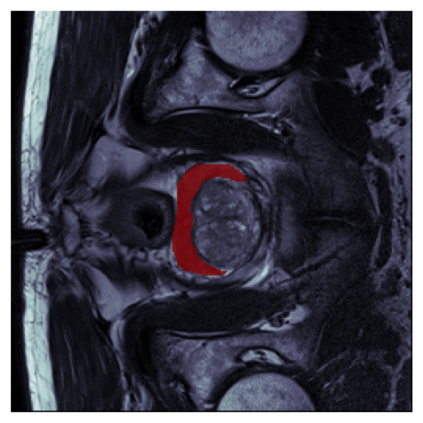

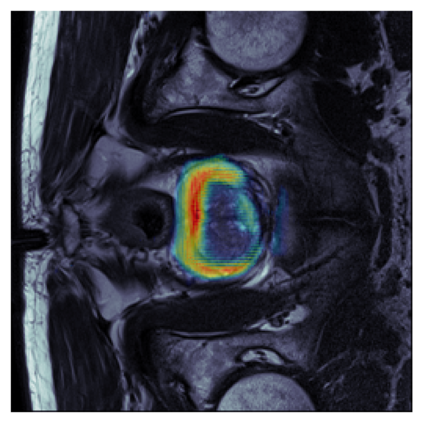

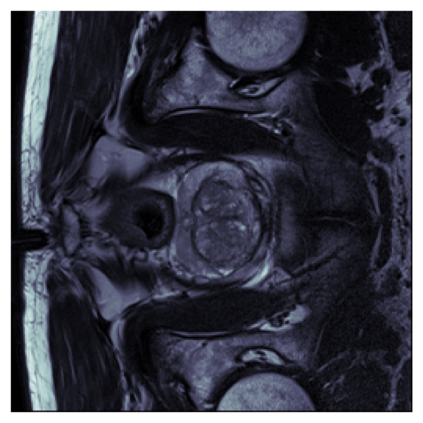

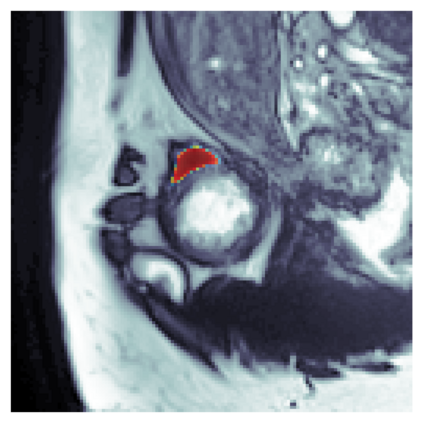

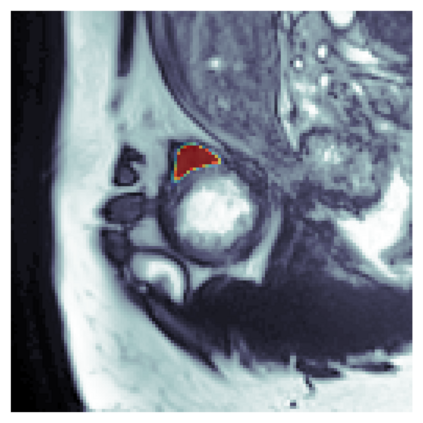

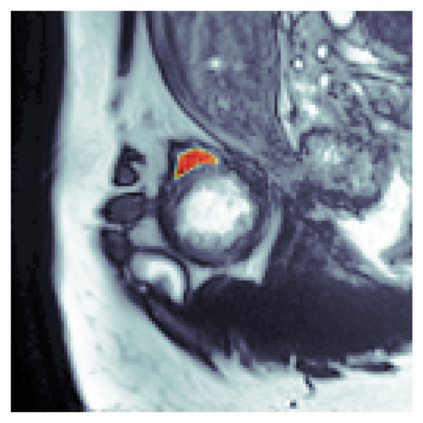

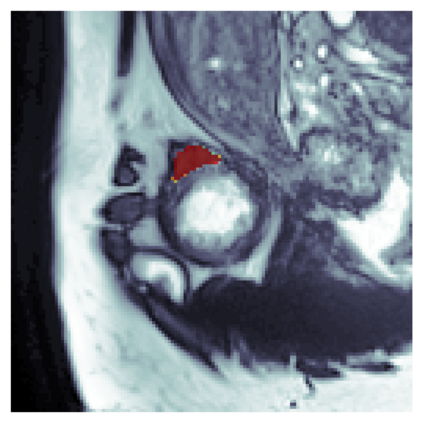

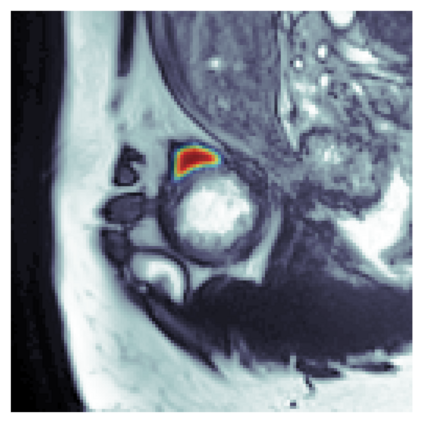

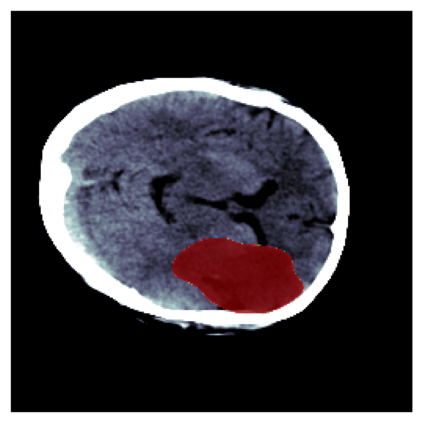

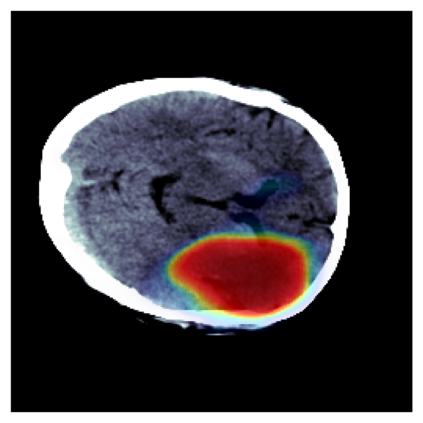

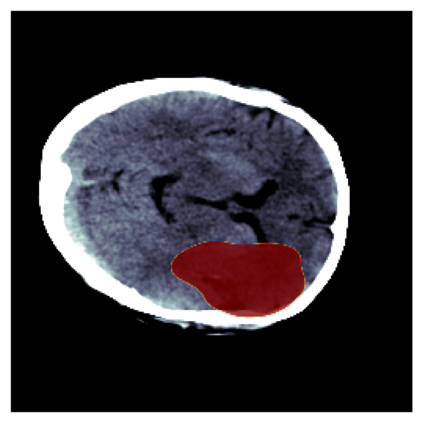

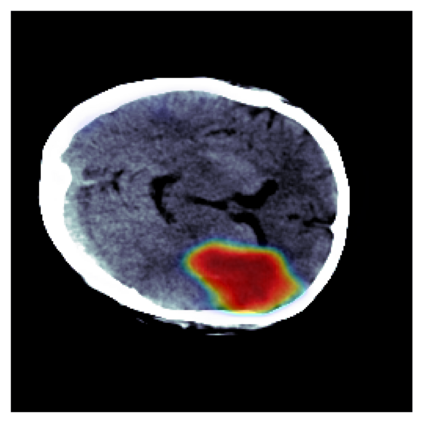

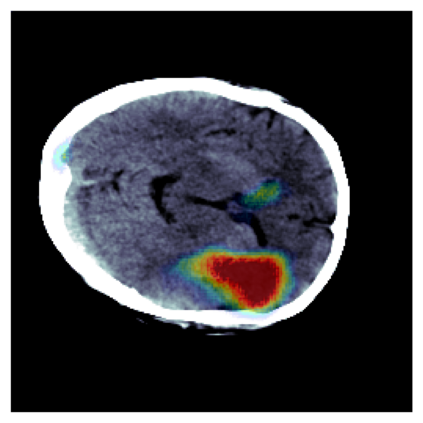

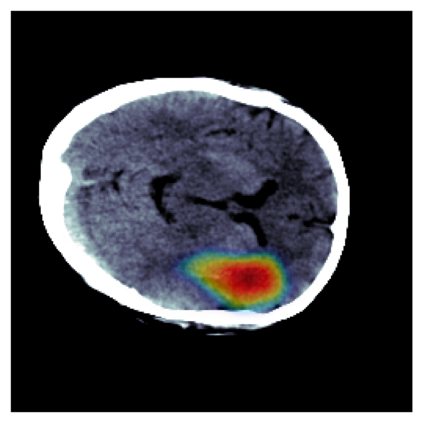

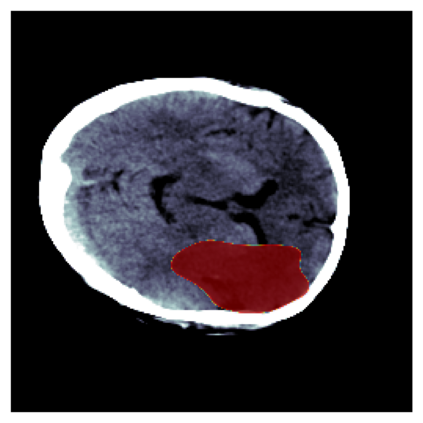

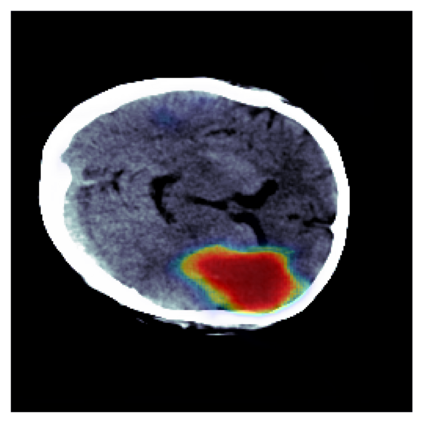

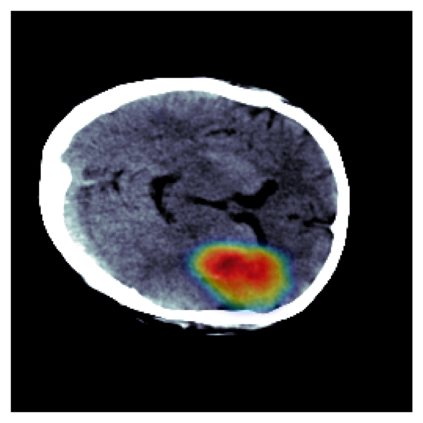

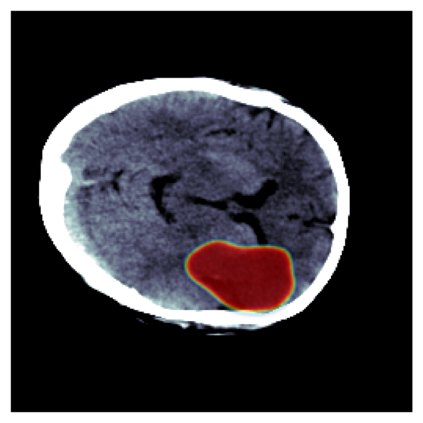

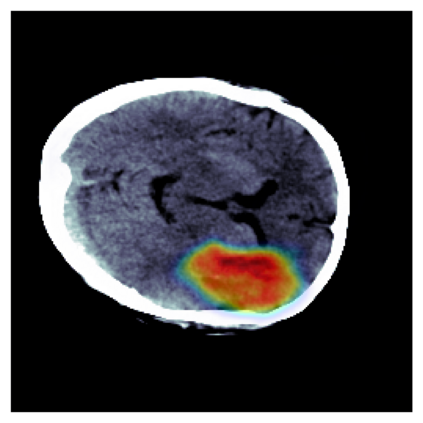

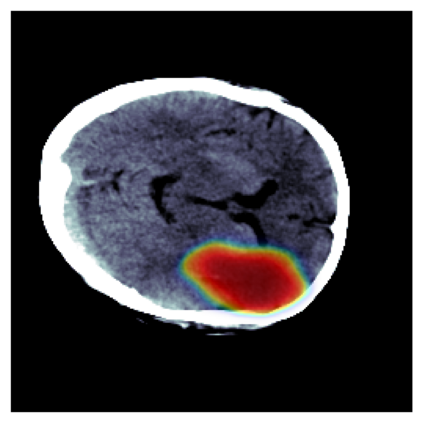

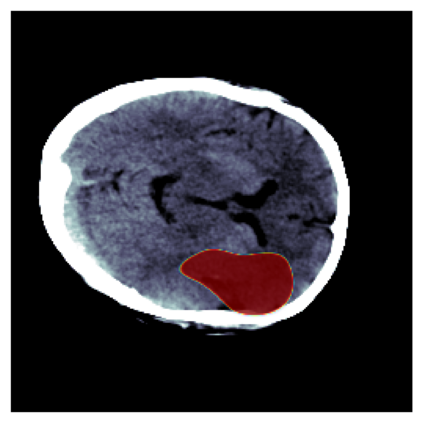

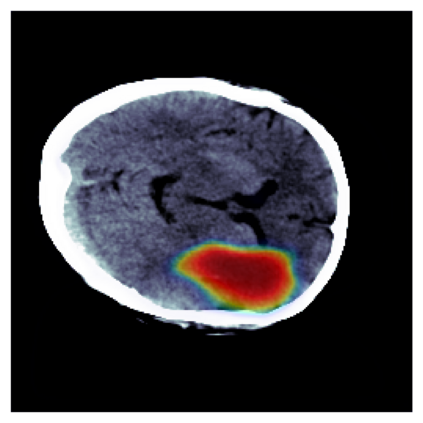

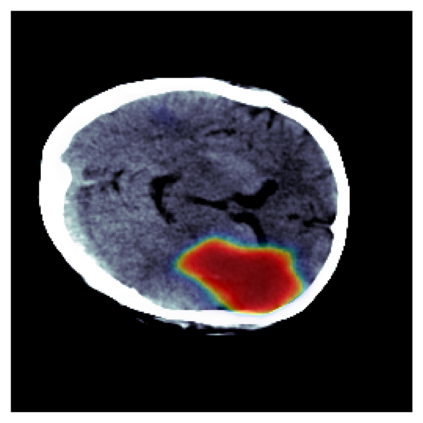

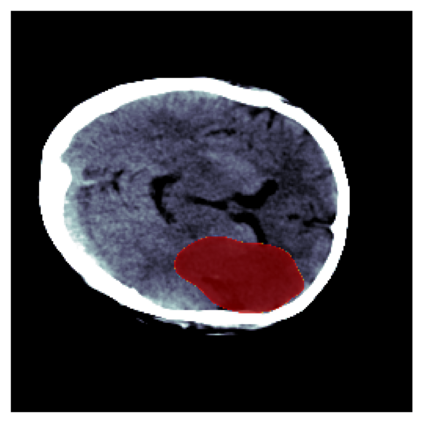

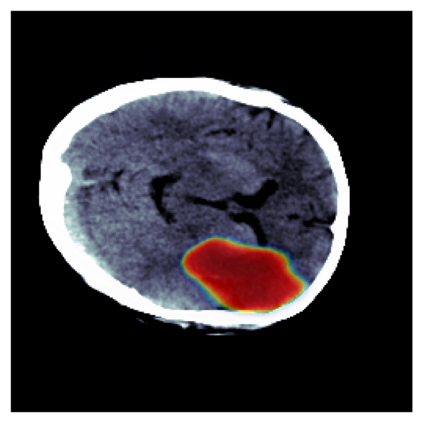

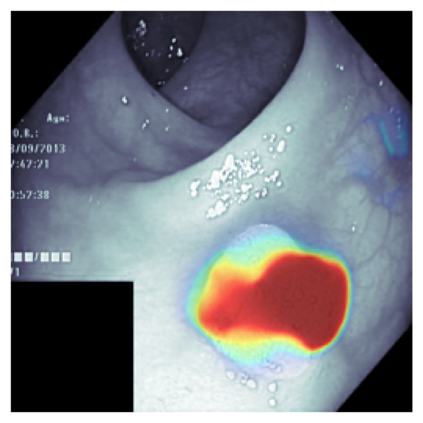

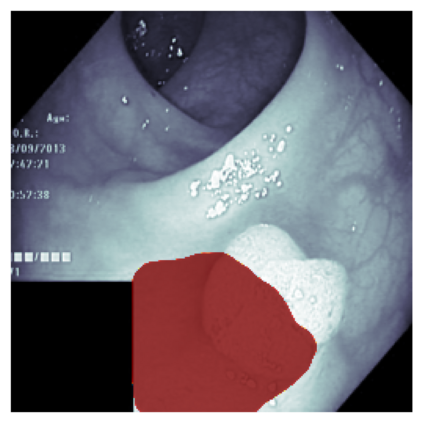

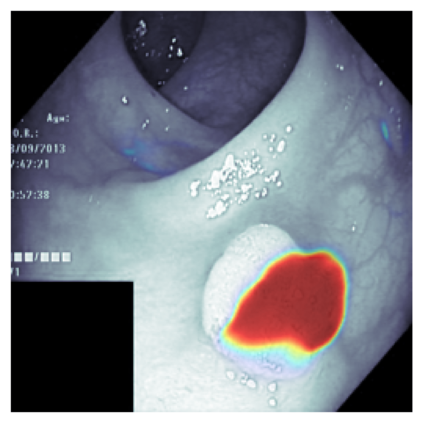

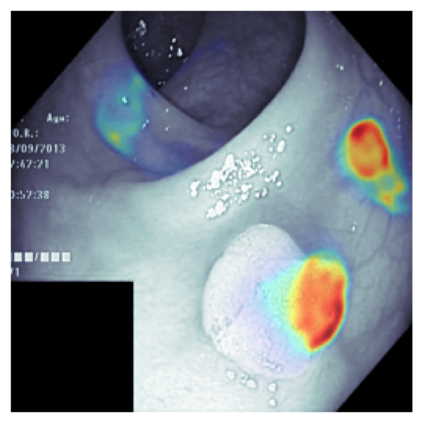

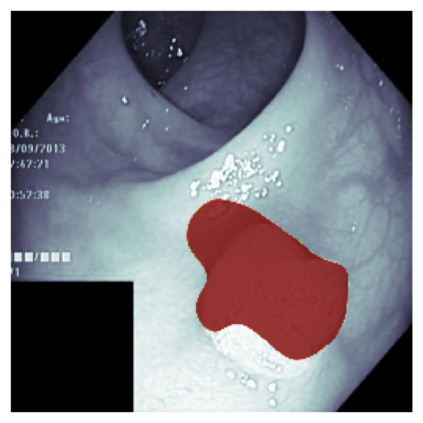

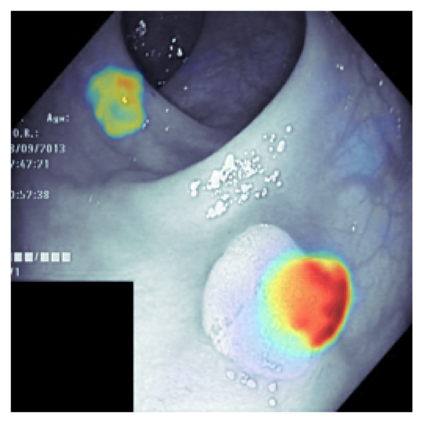

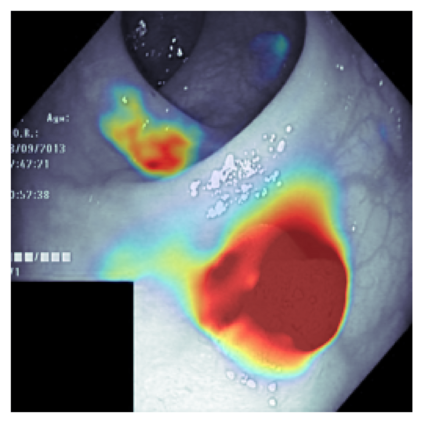

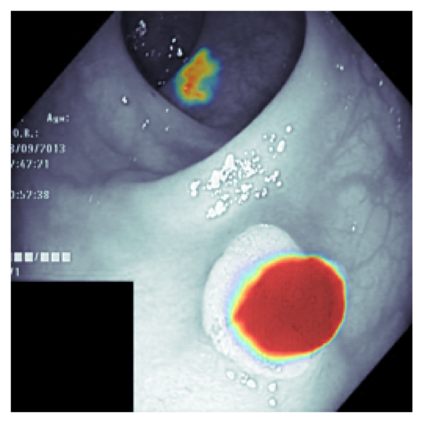

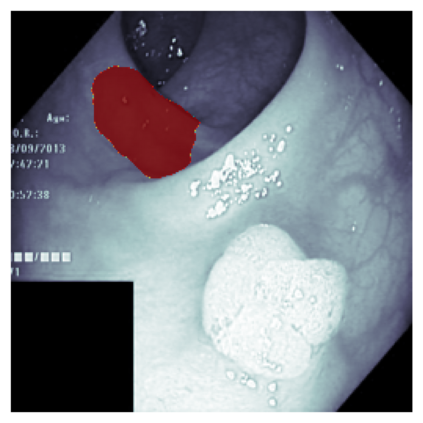

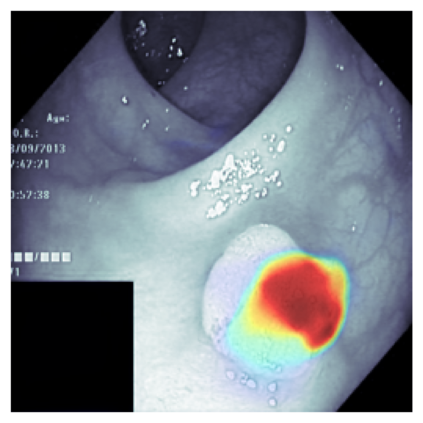

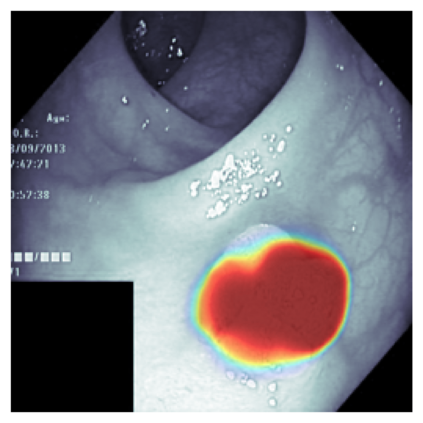

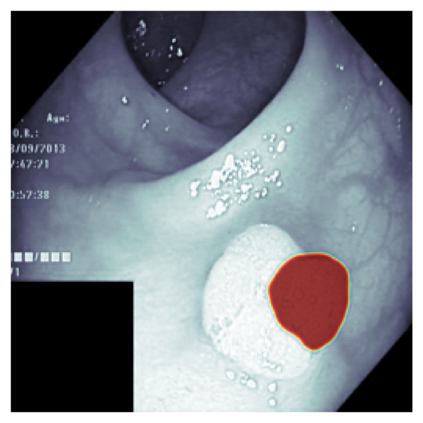

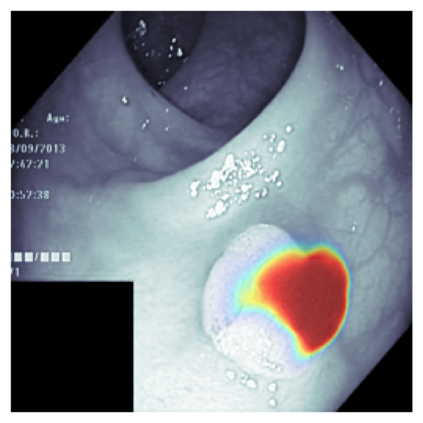

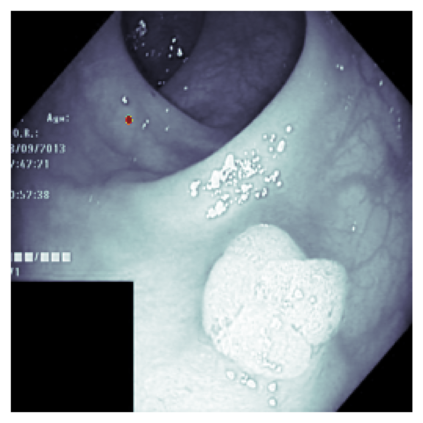

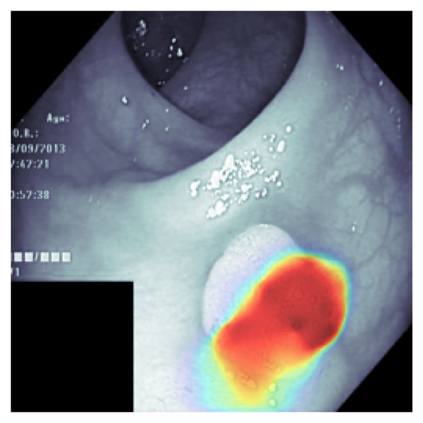

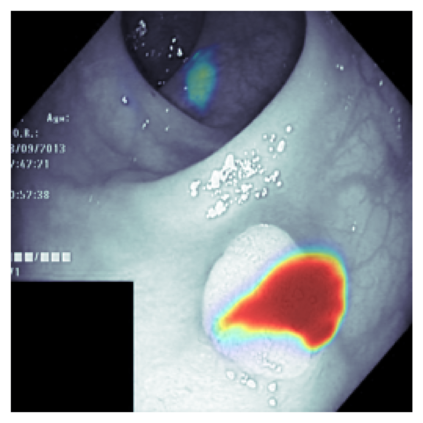

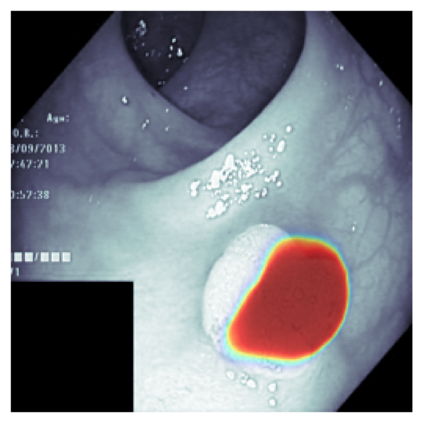

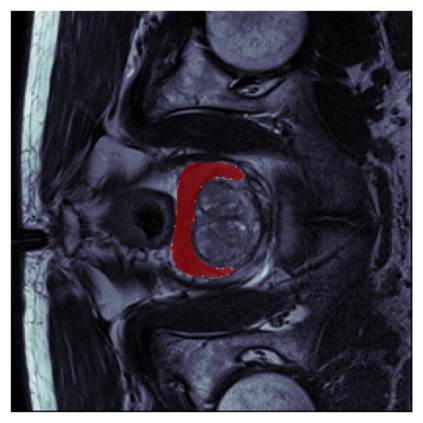

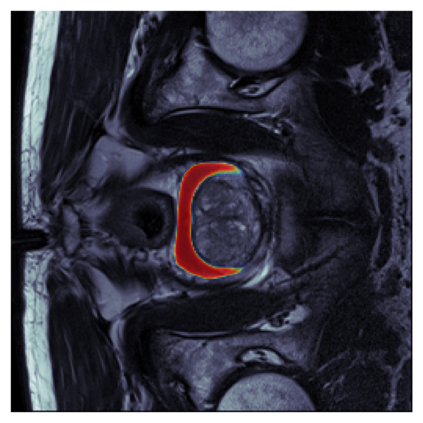

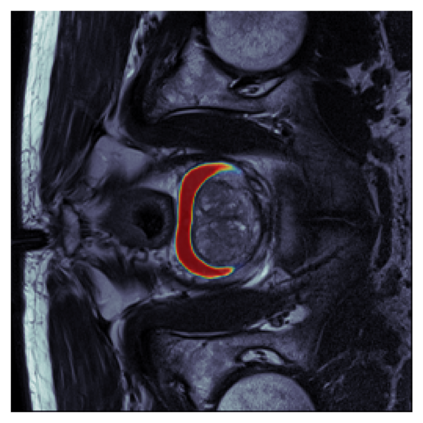

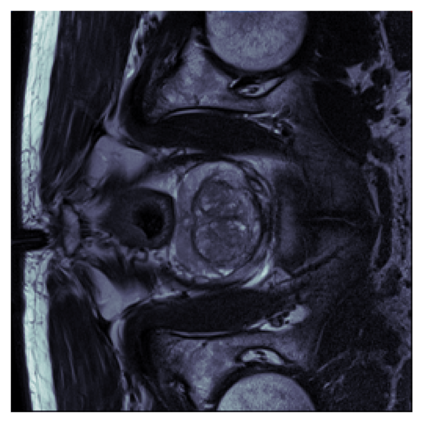

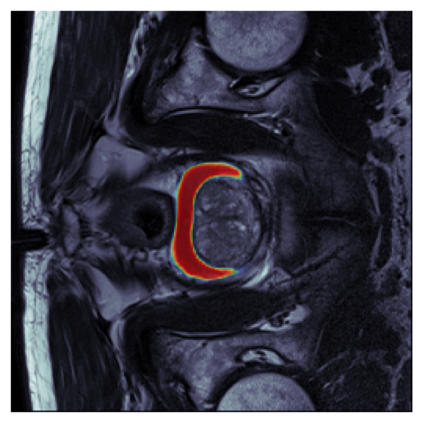

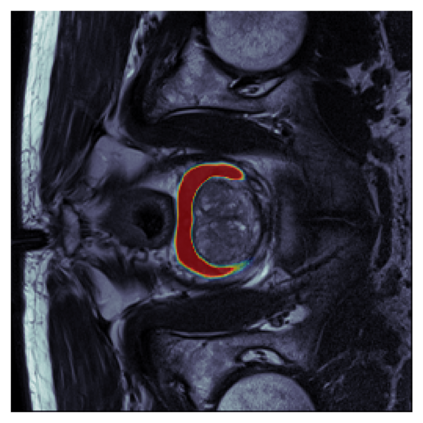

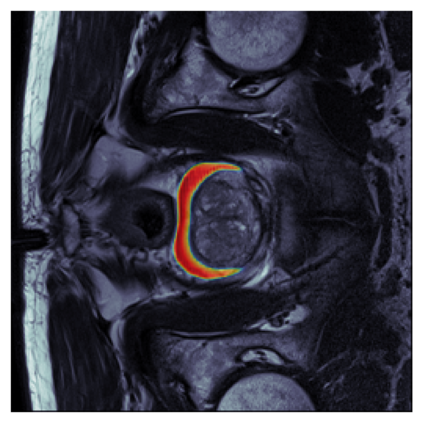

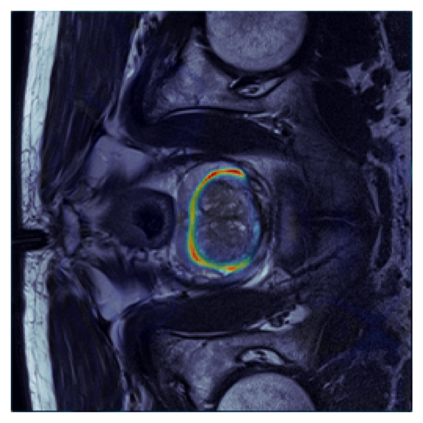

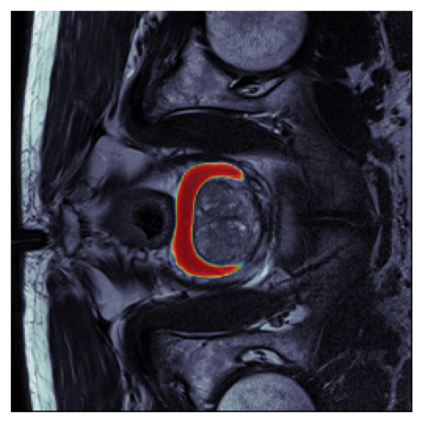

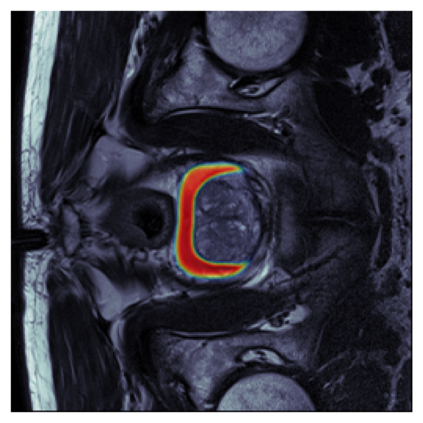

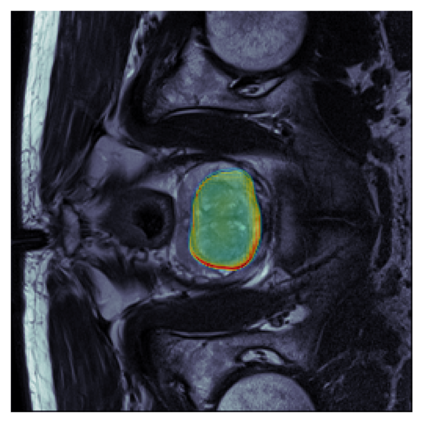

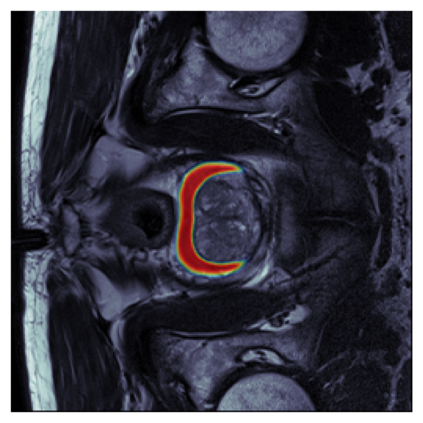

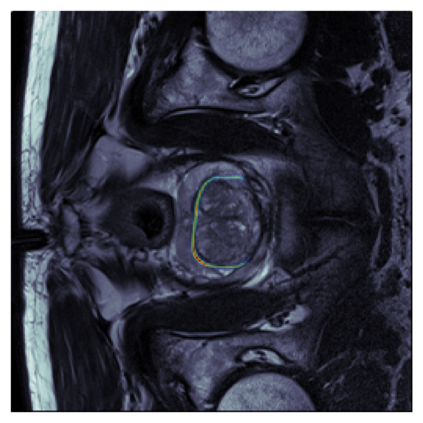

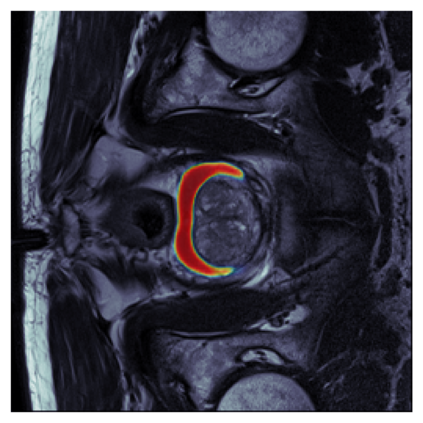

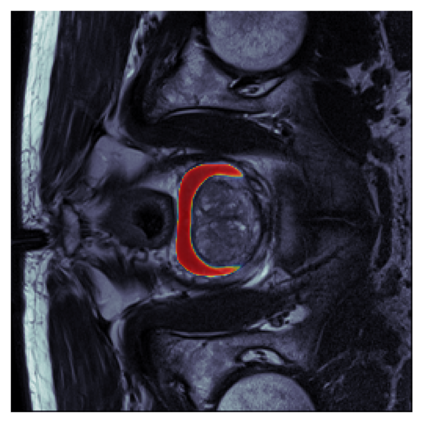

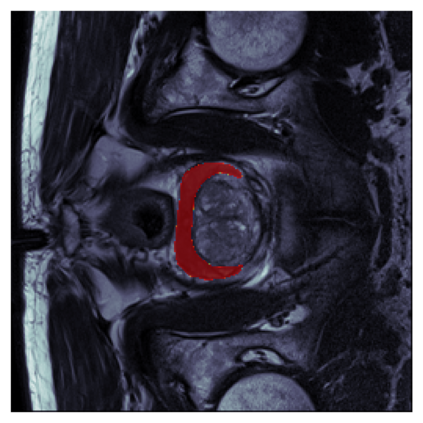

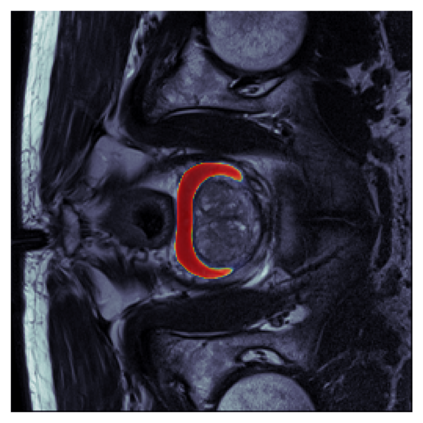

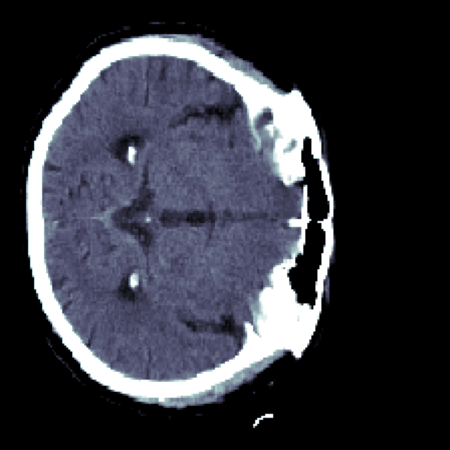

The sigmoid activation is the standard output activation function in binary classification and segmentation with neural networks. Still, there exist a variety of other potential output activation functions, which may lead to improved results in medical image segmentation. In this work, we consider how the asymptotic behavior of different output activation and loss functions affects the prediction probabilities and the corresponding segmentation errors. For cross entropy, we show that a faster rate of change of the activation function correlates with better predictions, while a slower rate of change can improve the calibration of probabilities. For dice loss, we found that the arctangent activation function is superior to the sigmoid function. Furthermore, we provide a test space for arbitrary output activation functions in the area of medical image segmentation. We tested seven activation functions in combination with three loss functions on four different medical image segmentation tasks to provide a classification of which function is best suited in this application scenario.

翻译:分子激活是神经网络二进制分类和分解中的标准输出激活功能。 尽管如此, 还有其他各种潜在的输出激活功能, 可能会改善医学图像分解的结果。 在这项工作中, 我们考虑不同输出激活和损失功能的无症状行为如何影响预测概率和相应的分解错误。 关于交叉昆虫, 我们显示, 激活功能的快速变化速度与更好的预测相关, 而更慢的改变速度可以改善概率的校准。 对于 dice 损失, 我们发现 弧度激活功能优于 sigmoid 函数。 此外, 我们为医学图像分解领域的任意输出激活功能提供了一个测试空间。 我们测试了四种不同的医学图像分解任务中的7个激活功能和3个损失函数, 以提供最适合此应用情景中函数的分类。