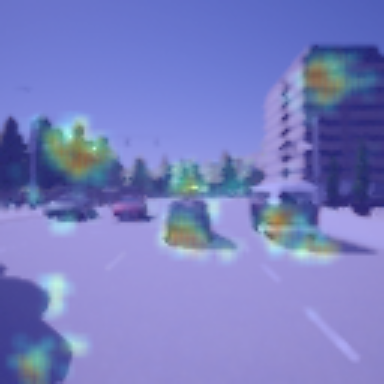

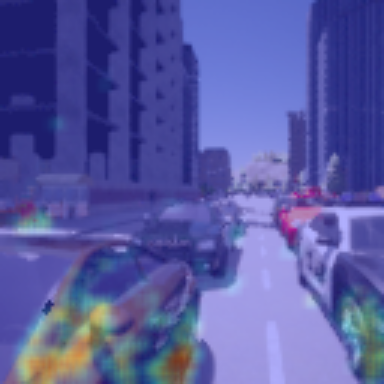

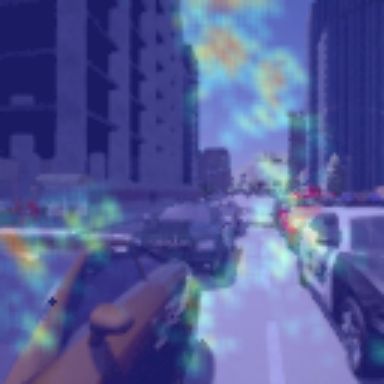

In this work, we evaluate the effectiveness of representation learning approaches for decision making in visually complex environments. Representation learning is essential for effective reinforcement learning (RL) from high-dimensional inputs. Unsupervised representation learning approaches based on reconstruction, prediction or contrastive learning have shown substantial learning efficiency gains. Yet, they have mostly been evaluated in clean laboratory or simulated settings. In contrast, real environments are visually complex and contain substantial amounts of clutter and distractors. Unsupervised representations will learn to model such distractors, potentially impairing the agent's learning efficiency. In contrast, an alternative class of approaches, which we call task-induced representation learning, leverages task information such as rewards or demonstrations from prior tasks to focus on task-relevant parts of the scene and ignore distractors. We investigate the effectiveness of unsupervised and task-induced representation learning approaches on four visually complex environments, from Distracting DMControl to the CARLA driving simulator. For both, RL and imitation learning, we find that representation learning generally improves sample efficiency on unseen tasks even in visually complex scenes and that task-induced representations can double learning efficiency compared to unsupervised alternatives. Code is available at https://clvrai.com/tarp.

翻译:在这项工作中,我们评价了在视觉复杂环境中决策的代表学习方法的有效性; 代表学习对于从高层次投入中有效强化学习(RL)至关重要; 基于重建、预测或对比学习的未经监督的代表学习方法表明,在学习效率方面取得了很大的提高; 然而,这些方法大多是在清洁实验室或模拟环境中评价的; 相反,真实环境在视觉上是复杂的,含有大量的杂乱和分散物; 未经监督的表述将学会模拟这种分散物,有可能损害代理人的学习效率; 相比之下,另一种替代方法,我们称之为任务引起的代表学习,利用先前任务中的奖励或演示等任务信息,侧重于与任务有关的场景部分和忽略分流器; 我们调查了从DMCM调控到CARLA驾驶模拟器等四个视觉复杂环境中的未经监督和任务引起的代表学习方法的有效性; 关于RL和模仿学习,我们发现,代表学习一般会提高即使是在视觉复杂场景中的无形任务中的抽样效率,任务由任务引起的演示能够重复学习效率,而不用监视的替代方法。