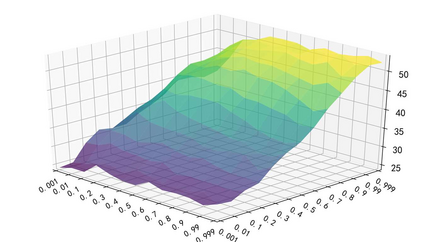

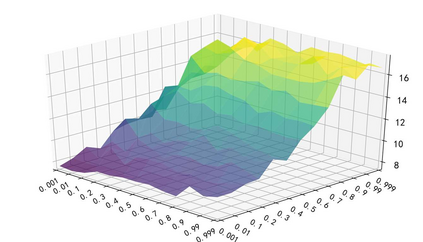

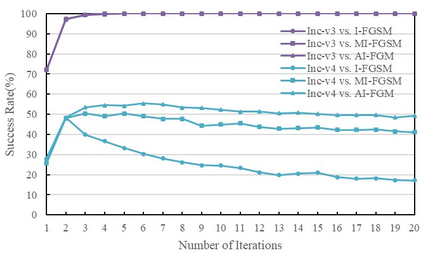

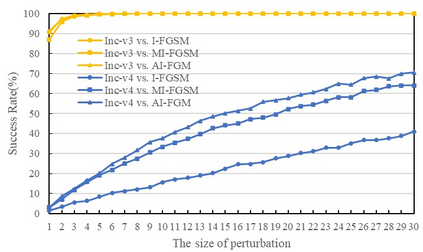

Convolutional neural networks have outperformed humans in image recognition tasks, but they remain vulnerable to attacks from adversarial examples. Since these data are produced by adding imperceptible noise to normal images, their existence poses potential security threats to deep learning systems. Sophisticated adversarial examples with strong attack performance can also be used as a tool to evaluate the robustness of a model. However, the success rate of adversarial attacks remains to be further improved in black-box environments. Therefore, this study combines an improved Adam gradient descent algorithm with the iterative gradient-based attack method. The resulting Adam Iterative Fast Gradient Method is then used to improve the transferability of adversarial examples. Extensive experiments on ImageNet showed that the proposed method offers a higher attack success rate than existing iterative methods. Our best black-box attack achieved a success rate of 81.9% on a normally trained network and 38.7% on an adversarially trained network.

翻译:进化神经网络在图像识别任务方面的表现超过了人类,但它们仍然容易受到来自对抗性实例的攻击。由于这些数据是通过在正常图像中添加无法察觉的噪音而生成的,因此它们的存在对深层学习系统构成了潜在的安全威胁。具有强力攻击性能的典型对抗性攻击性例子也可以作为一种工具来评价一个模型的坚固性。然而,在黑箱环境中,对抗性攻击的成功率仍有待进一步提高。因此,本研究将改进的亚当梯度下行算法与迭代梯度攻击法结合起来。由此产生的亚当迭代性快速渐进法随后被用来提高对抗性攻击性例子的可转移性。图象网的广泛实验表明,拟议的方法提供了比现有迭接方法更高的攻击性成功率。我们最好的黑箱攻击在通常受过训练的网络上取得了81.9%的成功率,在经过对抗性攻击的网络上则取得了38.7%的成功率。