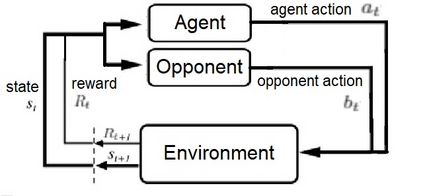

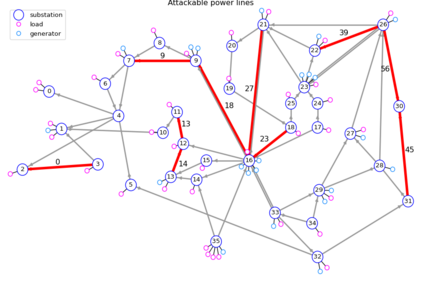

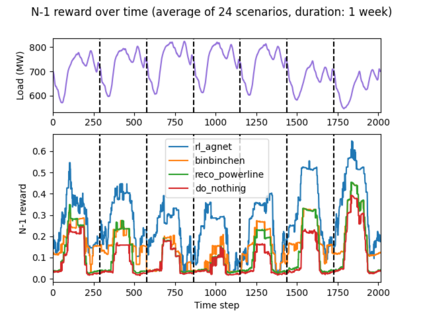

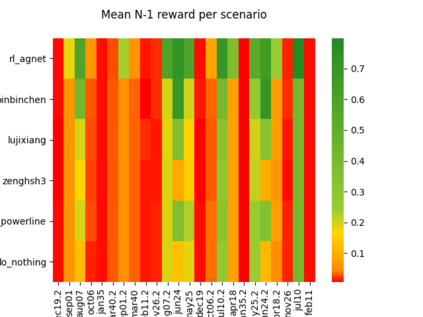

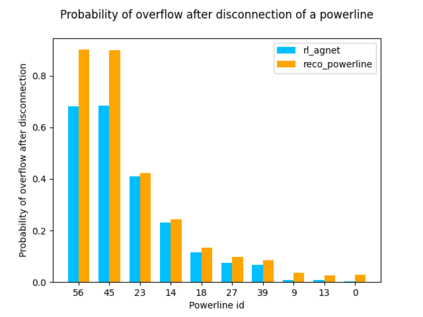

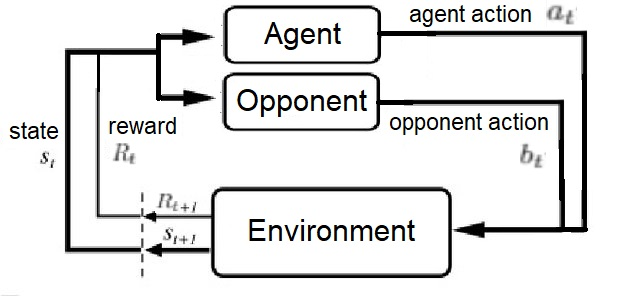

We propose a new adversarial training approach for injecting robustness when designing controllers for upcoming cyber-physical power systems. Previous approaches relying deeply on simulations are not able to cope with the rising complexity and are too costly when used online in terms of computation budget. In comparison, our method proves to be computationally efficient online while displaying useful robustness properties. To do so we model an adversarial framework, propose the implementation of a fixed opponent policy and test it on a L2RPN (Learning to Run a Power Network) environment. That environment is a synthetic but realistic modeling of a cyber-physical system accounting for one third of the IEEE 118 grid. Using adversarial testing, we analyze the results of submitted trained agents from the robustness track of the L2RPN competition. We then further assess the performance of those agents in regards to the continuous N-1 problem through tailored evaluation metrics. We discover that some agents trained in an adversarial way demonstrate interesting preventive behaviors in that regard, which we discuss.

翻译:我们提议在为即将到来的网络物理动力系统设计控制器时,采用新的对抗性培训方法,在设计控制器时注入强力。 以往的高度依赖模拟的方法无法应对日益复杂的问题,在计算预算方面,在网上使用时成本过高。 相比之下,我们的方法证明在网上计算有效,同时展示了有用的强力特性。 为此,我们以对抗性框架为模型,建议实施固定的对立政策,并在L2RPN(学习运行一个电力网络)环境中测试该政策。 这个环境是一个合成的、现实的网络物理系统模型,占IEEE 118电网的三分之一。我们通过对抗性测试,从L2RPN竞争的稳健轨道上分析已提交的经过培训的代理人的结果。 然后,我们通过有针对性的评价指标,进一步评估这些代理人在持续N-1问题方面的表现。 我们发现,一些受过对抗性培训的代理人在这方面表现出有趣的预防性行为,我们讨论过这一点。

相关内容

Source: Apple - iOS 8