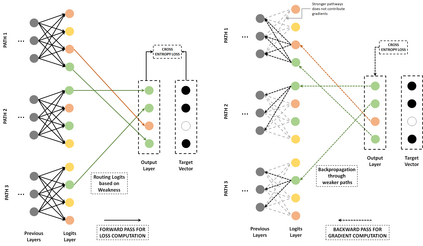

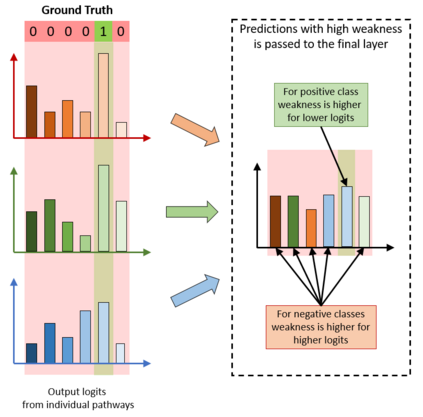

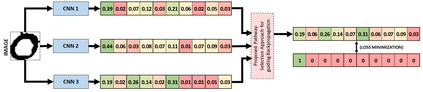

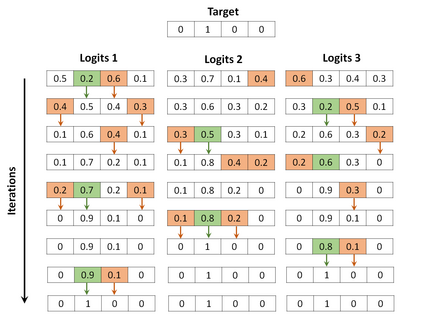

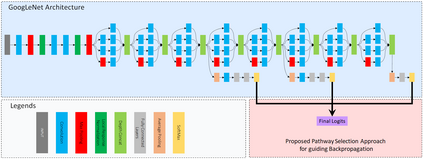

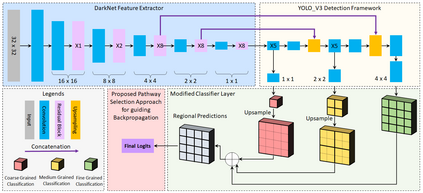

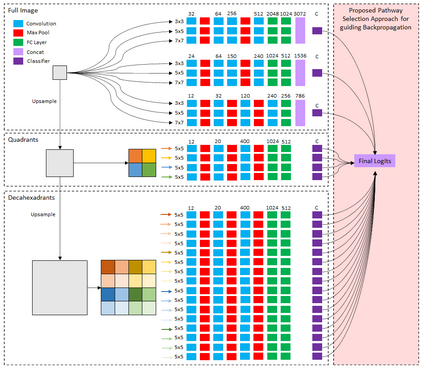

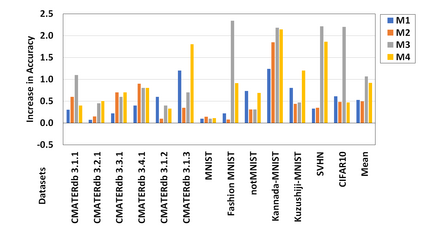

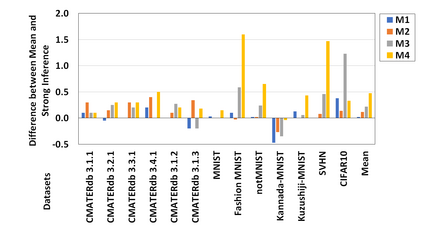

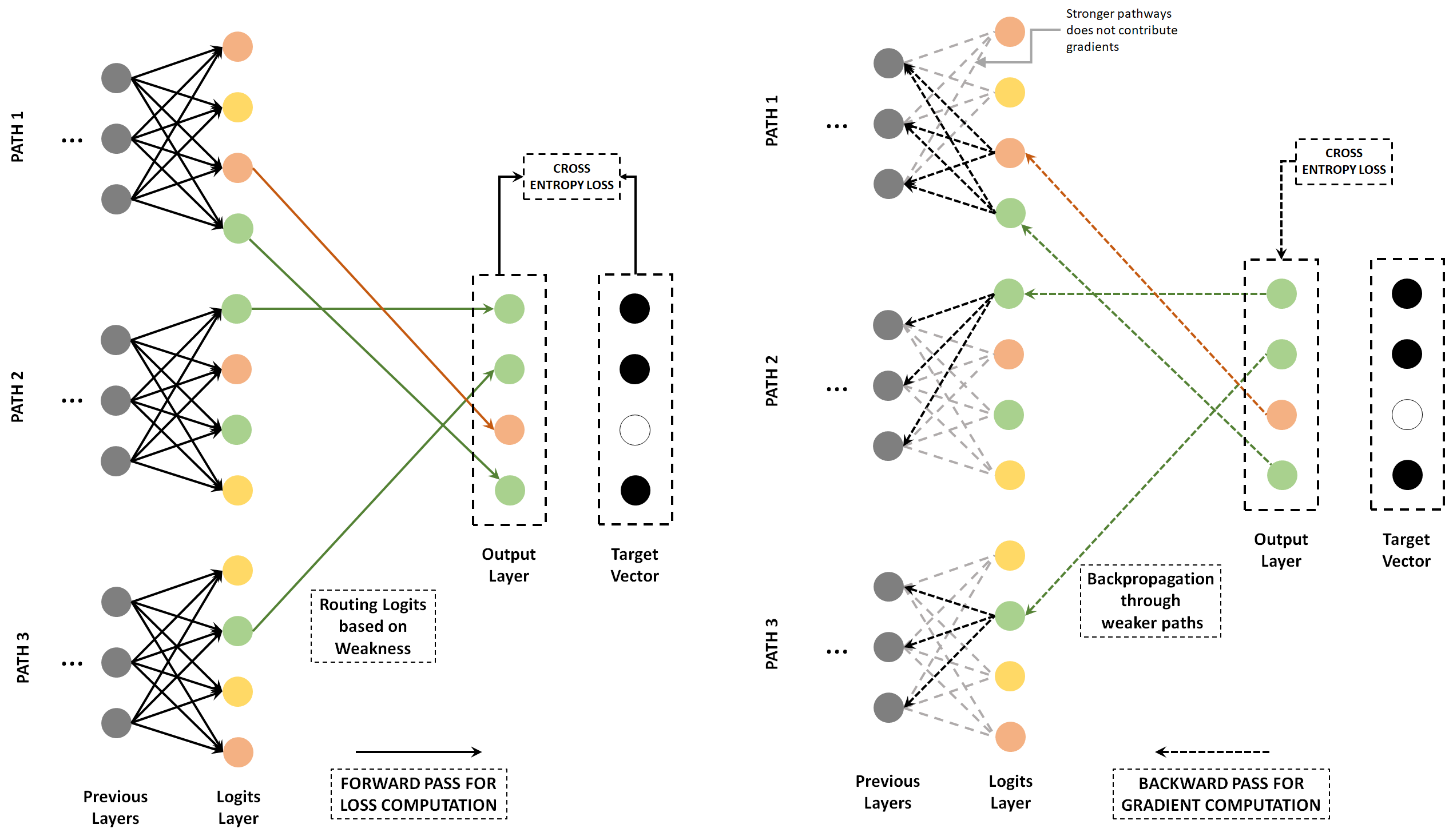

Convolutional neural networks often generate multiple logits and use simple techniques like addition or averaging for loss computation. But this allows gradients to be distributed equally among all paths. The proposed approach guides the gradients of backpropagation along weakest concept representations. A weakness scores defines the class specific performance of individual pathways which is then used to create a logit that would guide gradients along the weakest pathways. The proposed approach has been shown to perform better than traditional column merging techniques and can be used in several application scenarios. Not only can the proposed model be used as an efficient technique for training multiple instances of a model parallely, but also CNNs with multiple output branches have been shown to perform better with the proposed upgrade. Various experiments establish the flexibility of the learning technique which is simple yet effective in various multi-objective scenarios both empirically and statistically.

翻译:进化神经网络往往产生多个日志,并使用简单的技术,如增加或平均计算损失。但这样可以在所有路径中平均分布梯度。拟议方法指导了以最弱的概念表示的反向反向偏移梯度。一个弱点分数定义了单个路径的分类具体性能,然后用来创建一个能指导最弱路径沿梯度的对数。拟议方法比传统的柱合并技术表现得更好,并可用于几种应用情景。不仅可以将拟议模式用作培训模型平行的多个实例的有效技术,而且具有多个输出分支的CNN也证明在拟议升级中表现得更好。各种实验确立了学习技术的灵活性,在各种多目标情景中,无论是在经验上还是统计上,都简单而有效。