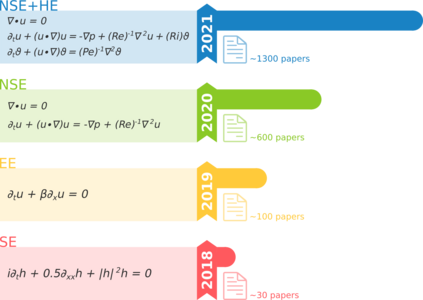

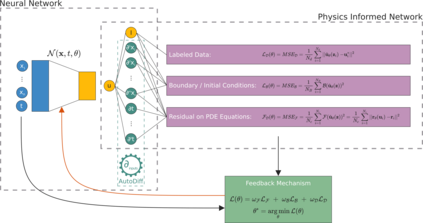

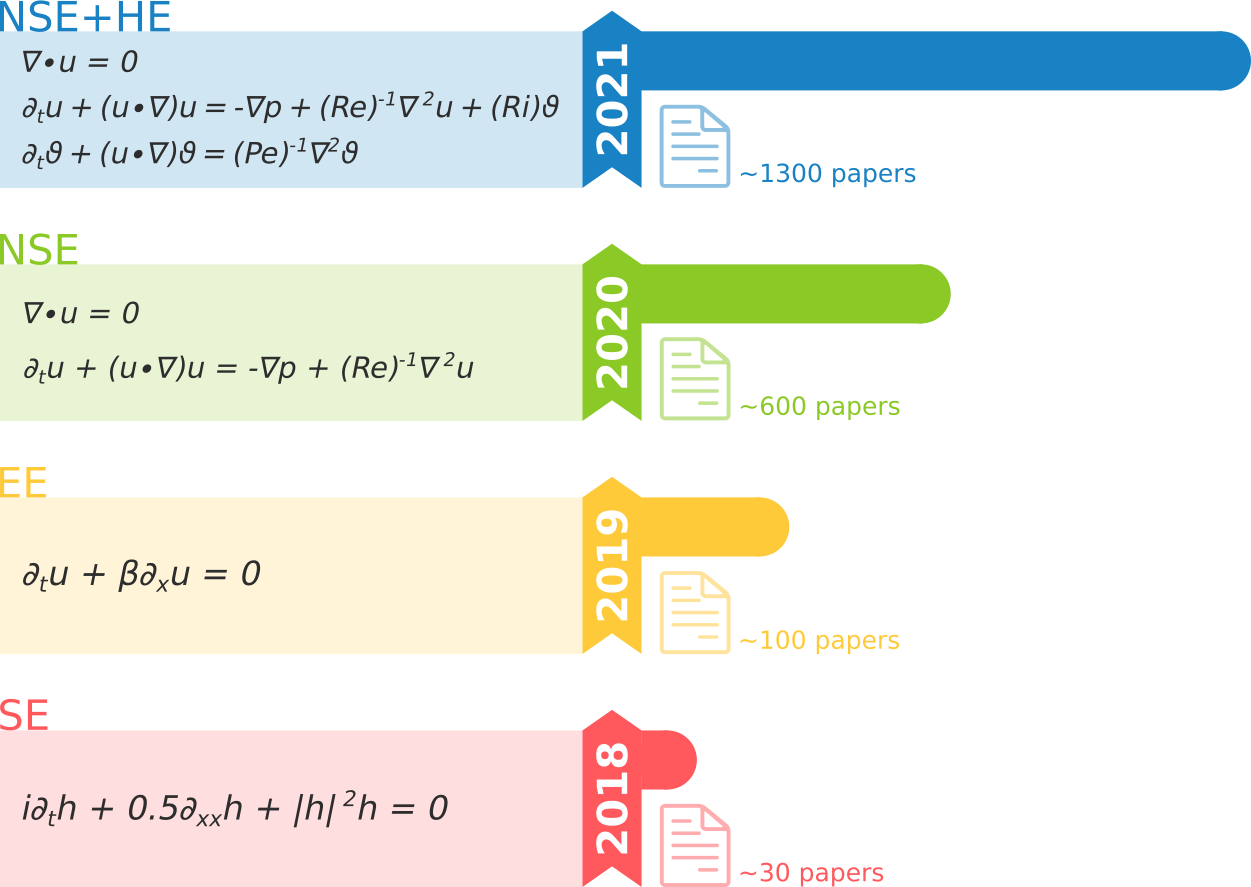

Physics-Informed Neural Networks (PINN) are neural networks (NNs) that encode model equations, like Partial Differential Equations (PDE), as a component of the neural network itself. PINNs are nowadays used to solve PDEs, fractional equations, and integral-differential equations. This novel methodology has arisen as a multi-task learning framework in which a NN must fit observed data while reducing a PDE residual. This article provides a comprehensive review of the literature on PINNs: while the primary goal of the study was to characterize these networks and their related advantages and disadvantages, the review also attempts to incorporate publications on a larger variety of issues, including physics-constrained neural networks (PCNN), where the initial or boundary conditions are directly embedded in the NN structure rather than in the loss functions. The study indicates that most research has focused on customizing the PINN through different activation functions, gradient optimization techniques, neural network structures, and loss function structures. Despite the wide range of applications for which PINNs have been used, by demonstrating their ability to be more feasible in some contexts than classical numerical techniques like Finite Element Method (FEM), advancements are still possible, most notably theoretical issues that remain unresolved.

翻译:物理进化神经网络(PINN)是将模型方程式编码的神经网络(NNS),例如部分差异方程式(PDE),作为神经网络本身的组成部分。现在,PINN用于解决PDE、分方程式和整体差异方程式。这种新颖的方法是一个多任务学习框架,NN必须适应观察到的数据,同时减少PDE的剩余数据。这篇文章全面审查了PINN的文献:虽然研究的主要目的是说明这些网络及其相关的利弊,但审查还试图将出版物纳入更广泛的问题,包括物理学限制的神经网络(PCNN),在这些问题上,初始或边界条件直接嵌入NNN的结构而不是损失功能。研究表明,大多数研究侧重于通过不同的激活功能、梯度优化技术、神经网络结构和损失功能结构定制PINN。尽管使用PINN的广泛应用,通过展示其能力,包括物理学限制的神经网络(PCNNN),从而显示其能力在最不易解决的理论性环境上仍然是最有可能的理论性方法,例如,SinF仍然是最不可行的理论性的方法。