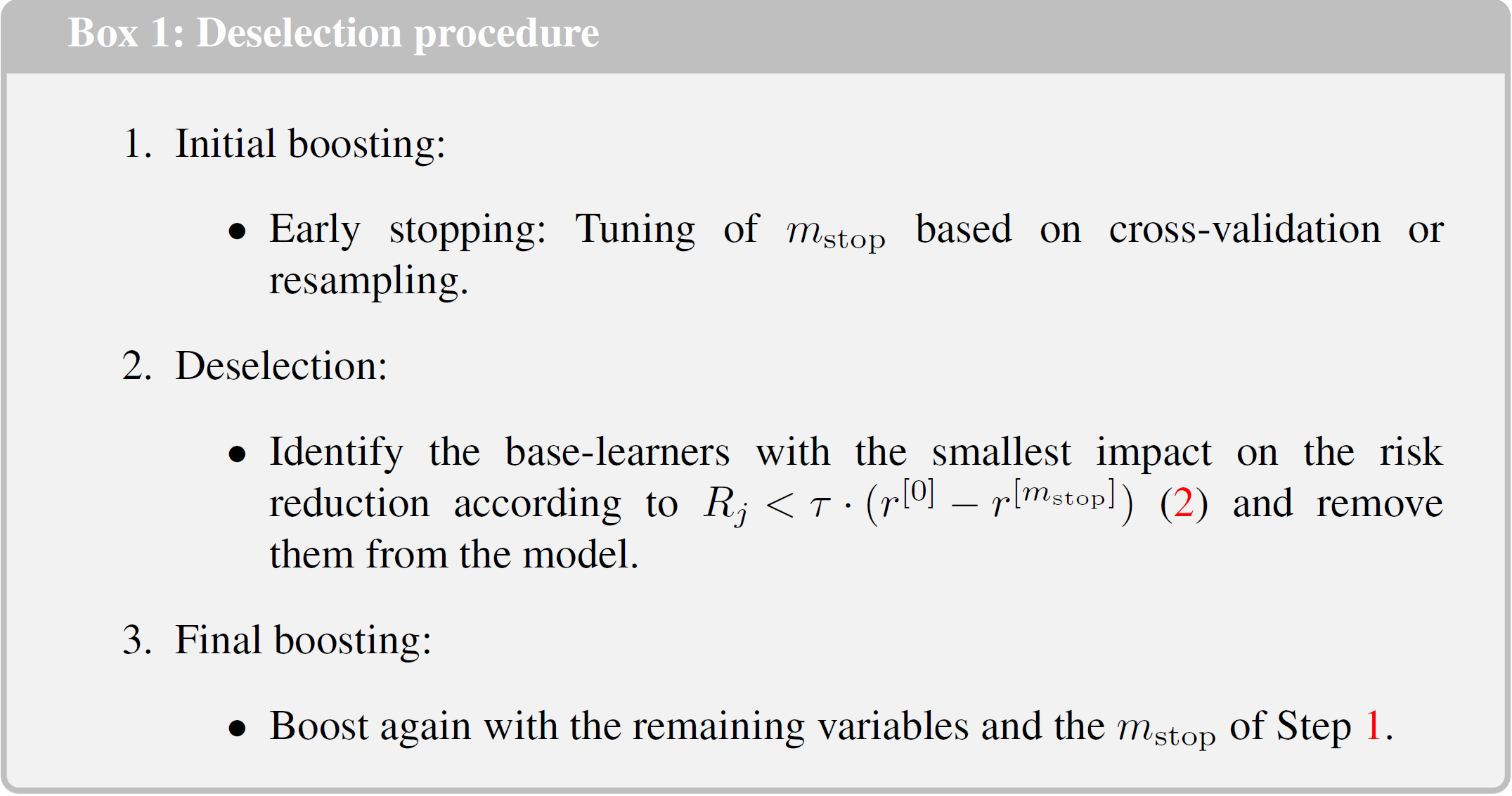

We present a new procedure for enhanced variable selection for component-wise gradient boosting. Statistical boosting is a computational approach that emerged from machine learning, which allows to fit regression models in the presence of high-dimensional data. Furthermore, the algorithm can lead to data-driven variable selection. In practice, however, the final models typically tend to include too many variables in some situations. This occurs particularly for low-dimensional data (p<n), where we observe a slow overfitting behavior of boosting. As a result, more variables get included into the final model without altering the prediction accuracy. Many of these false positives are incorporated with a small coefficient and therefore have a small impact, but lead to a larger model. We try to overcome this issue by giving the algorithm the chance to deselect base-learners with minor importance. We analyze the impact of the new approach on variable selection and prediction performance in comparison to alternative methods including boosting with earlier stopping as well as twin boosting. We illustrate our approach with data of an ongoing cohort study for chronic kidney disease patients, where the most influential predictors for the health-related quality of life measure are selected in a distributional regression approach based on beta regression.

翻译:我们为组件梯度推升提供了一种强化变量选择的新程序。 统计推升是一种计算方法,它产生于机器学习,它使得回归模型与高维数据相容。 此外,算法可以导致数据驱动变量选择。 然而,在实践中,最终模型通常在某些情况下包含过多变量。 特别是在低维数据(p<n),我们观测到一种缓慢的超适应推升行为。结果,更多的变量在不改变预测准确性的情况下被纳入最终模型。许多这些假正数与一个小系数相融合,因此具有小影响,但导致一个更大的模型。我们试图克服这一问题,给算法提供机会去掉基本利器,但重要性不大。我们分析了新方法对变量选择和预测性能的影响,与替代方法相比,包括用较早的停止和双振来加速。 我们用当前对慢性肾病病人组群研究的数据来说明我们的方法, 与健康有关生命质量的最具影响力的预测器是在一个基于贝形回归的分布式回归法中选择的。