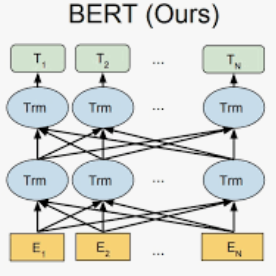

Masked language models (MLMs) such as BERT and RoBERTa have revolutionized the field of Natural Language Understanding in the past few years. However, existing pre-trained MLMs often output an anisotropic distribution of token representations that occupies a narrow subset of the entire representation space. Such token representations are not ideal, especially for tasks that demand discriminative semantic meanings of distinct tokens. In this work, we propose TaCL (Token-aware Contrastive Learning), a novel continual pre-training approach that encourages BERT to learn an isotropic and discriminative distribution of token representations. TaCL is fully unsupervised and requires no additional data. We extensively test our approach on a wide range of English and Chinese benchmarks. The results show that TaCL brings consistent and notable improvements over the original BERT model. Furthermore, we conduct detailed analysis to reveal the merits and inner-workings of our approach.

翻译:过去几年来,诸如BERT和ROBERTA等蒙面语言模型(MLMs)使自然语言理解领域发生了革命性的变化,但是,经过事先培训的MLMS往往会输出占整个代表空间一小部分的象征性表示物的异种分布。这种象征性表示物并不理想,特别是对于要求不同表示物具有歧视性的语义含义的任务来说更是如此。在这项工作中,我们提议TACL(Token-aware Contractical Learning),这是一种鼓励BERT学习非热带和歧视性象征性表示物分布的新的持续培训前方法。TACL完全不受监督,不需要额外数据。我们广泛测试了我们的方法在广泛的英文和中文基准上。结果显示,TACL对原始的BERT模型带来了一致和显著的改进。此外,我们进行了详细分析,以揭示我们方法的优点和内涵。