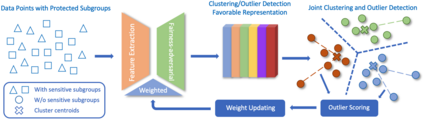

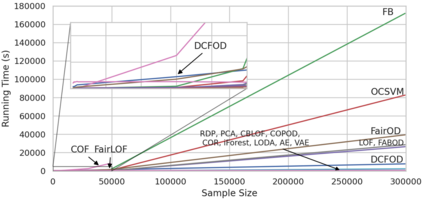

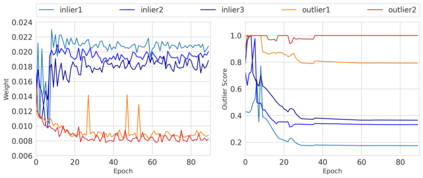

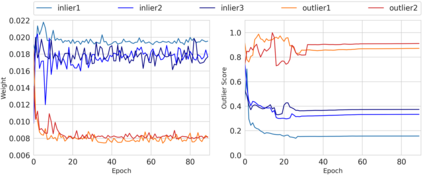

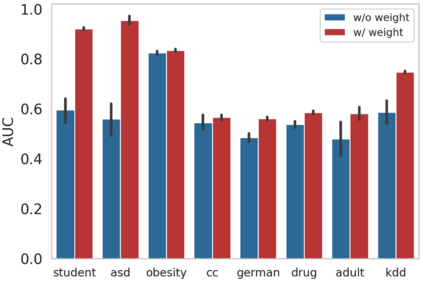

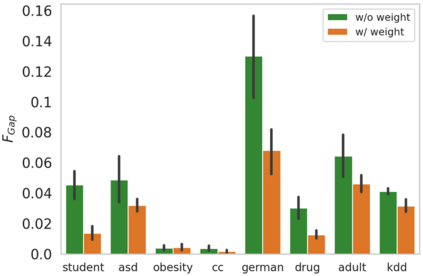

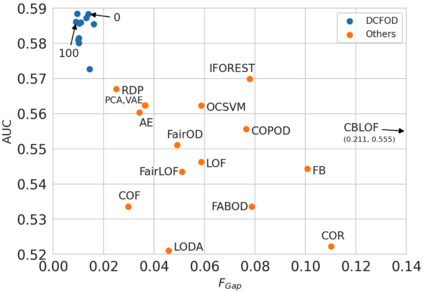

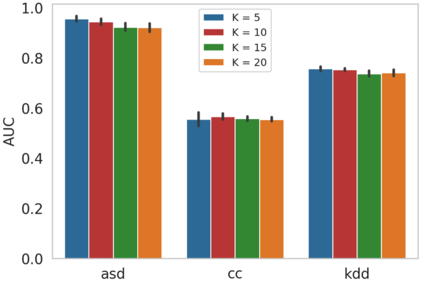

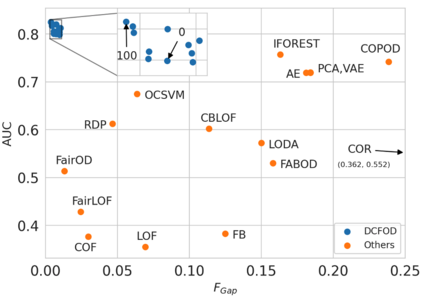

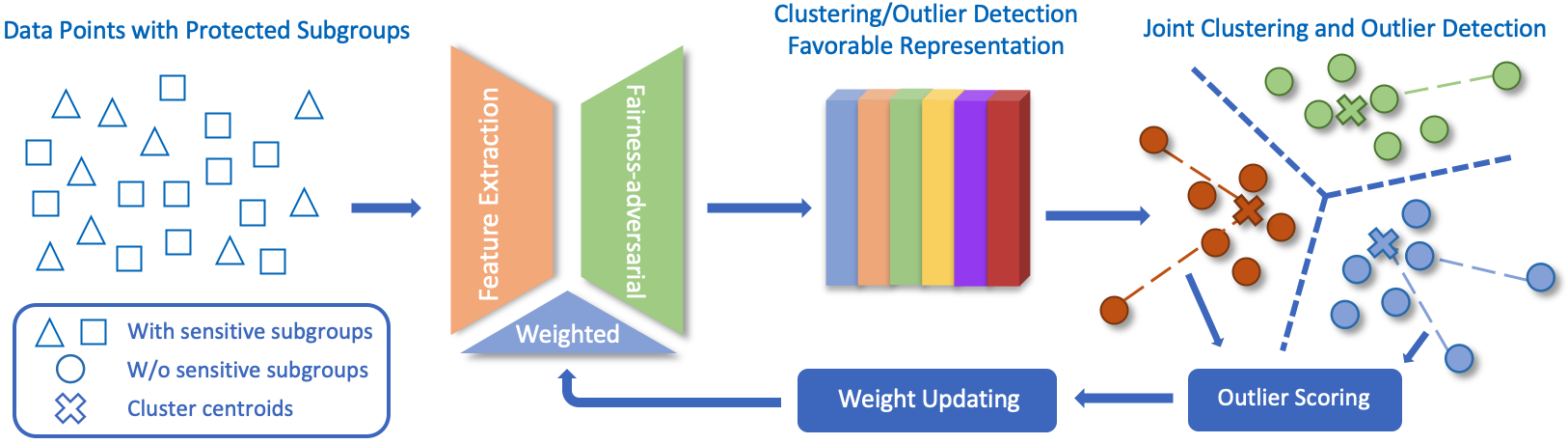

In this paper, we focus on the fairness issues regarding unsupervised outlier detection. Traditional algorithms, without a specific design for algorithmic fairness, could implicitly encode and propagate statistical bias in data and raise societal concerns. To correct such unfairness and deliver a fair set of potential outlier candidates, we propose Deep Clustering based Fair Outlier Detection (DCFOD) that learns a good representation for utility maximization while enforcing the learnable representation to be subgroup-invariant on the sensitive attribute. Considering the coupled and reciprocal nature between clustering and outlier detection, we leverage deep clustering to discover the intrinsic cluster structure and out-of-structure instances. Meanwhile, an adversarial training erases the sensitive pattern for instances for fairness adaptation. Technically, we propose an instance-level weighted representation learning strategy to enhance the joint deep clustering and outlier detection, where the dynamic weight module re-emphasizes contributions of likely-inliers while mitigating the negative impact from outliers. Demonstrated by experiments on eight datasets comparing to 17 outlier detection algorithms, our DCFOD method consistently achieves superior performance on both the outlier detection validity and two types of fairness notions in outlier detection.

翻译:在本文中,我们侧重于有关未经监督的外部探测的公平性问题。传统的算法,如果没有具体的算法公正设计,就可能隐含地编码和传播数据中的统计偏见,并引起社会关注。为了纠正这种不公平现象,并提供一套公平的潜在外部候选人,我们提议采用基于深度集群的公平外部探测(DCFOD)战略,在推广可学习的表示方式的同时,为效用最大化学习一种良好的代表性,同时实施可学习的表示方式,作为敏感属性的次类异性。考虑到集群和外部探测之间的结合和对等性质,我们利用深度集成来发现内在的集群结构和结构外的事例。与此同时,对抗性培训可以抹去公平适应情况的敏感模式。在技术上,我们建议采用实例级加权代表制学习战略,以加强联合深度集群和外部探测,其中动态重量模块在减少外部关系的消极影响的同时,重新强调可能的内线的贡献。从八个数据集与17个外部探测算法的实验中可以看出,我们的DCFOD方法在外部探测有效性和两种类型的公平性概念中始终都取得了优异性。