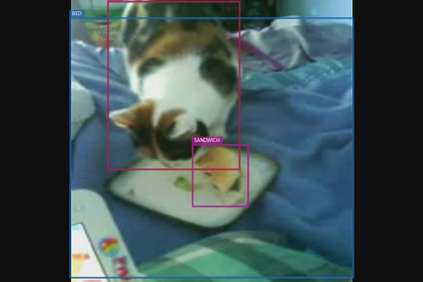

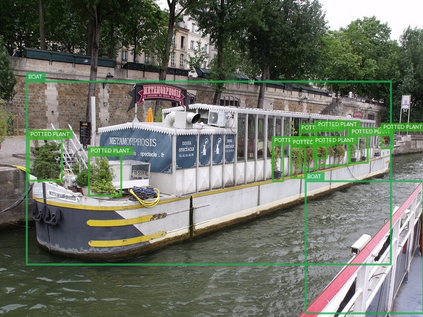

We propose a simple approach which combines the strengths of probabilistic graphical models and deep learning architectures for solving the multi-label classification task, focusing specifically on image and video data. First, we show that the performance of previous approaches that combine Markov Random Fields with neural networks can be modestly improved by leveraging more powerful methods such as iterative join graph propagation, integer linear programming, and $\ell_1$ regularization-based structure learning. Then we propose a new modeling framework called deep dependency networks, which augments a dependency network, a model that is easy to train and learns more accurate dependencies but is limited to Gibbs sampling for inference, to the output layer of a neural network. We show that despite its simplicity, jointly learning this new architecture yields significant improvements in performance over the baseline neural network. In particular, our experimental evaluation on three video activity classification datasets: Charades, Textually Annotated Cooking Scenes (TACoS), and Wetlab, and three multi-label image classification datasets: MS-COCO, PASCAL VOC, and NUS-WIDE show that deep dependency networks are almost always superior to pure neural architectures that do not use dependency networks.

翻译:我们提出一个简单的方法,将概率图形模型和深学习结构的优势结合起来,解决多标签分类任务,特别侧重于图像和视频数据。首先,我们表明,通过利用更强大的方法,例如迭接组合图形传播、整线性编程和1美元基于正规化的结构学习等,可以适度地改进将Markov随机场与神经网络相结合的先前方法的性能。然后,我们提出一个新的建模框架,称为深依赖性网络,扩大依赖性网络,这种模型易于培训和学习,但仅限于Gibs的推断抽样,限于神经网络的产出层。我们表明,尽管这种新结构简单,但共同学习在基线神经网络的性能方面带来显著的改进。特别是,我们对三个视频活动分类数据集的实验性评估:Charades、用文字附加说明的烹饪环境(TaCos)和Wetlab,以及三个多标签的图像分类数据集:MS-CO、PASAL VOC和NUS-WIDE,它们几乎总是使用更高级的依赖性网络。