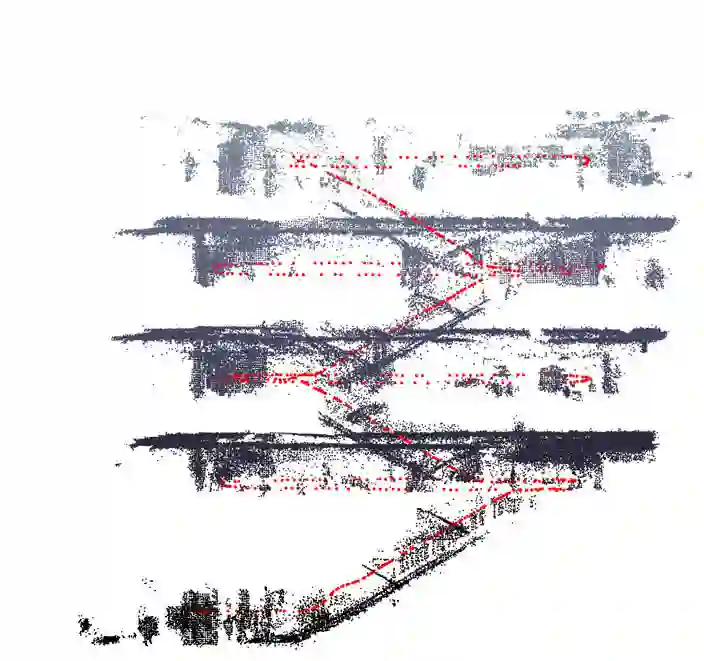

The lack of realistic and open benchmarking datasets for pedestrian visual-inertial odometry has made it hard to pinpoint differences in published methods. Existing datasets either lack a full six degree-of-freedom ground-truth or are limited to small spaces with optical tracking systems. We take advantage of advances in pure inertial navigation, and develop a set of versatile and challenging real-world computer vision benchmark sets for visual-inertial odometry. For this purpose, we have built a test rig equipped with an iPhone, a Google Pixel Android phone, and a Google Tango device. We provide a wide range of raw sensor data that is accessible on almost any modern-day smartphone together with a high-quality ground-truth track. We also compare resulting visual-inertial tracks from Google Tango, ARCore, and Apple ARKit with two recent methods published in academic forums. The data sets cover both indoor and outdoor cases, with stairs, escalators, elevators, office environments, a shopping mall, and metro station.

翻译:由于缺乏现实和开放的行人视觉内皮眼测量基准数据集,很难确定出版方法的差异。现有的数据集要么缺乏完全的六度自由地面实况,要么局限于光学跟踪系统的小空间。我们利用纯惯性导航的进步,为视觉内皮眼测量开发一套多功能和具有挑战性的现实世界计算机视觉基准数据集。为此目的,我们建造了一个装有iPhone、Google Pixel Android电话和Google Tango装置的测试设备。我们提供了各种原始传感器数据,几乎可以在任何现代智能手机上以及高质量的地面实况跟踪上查阅。我们还将Google Tango、ARCore和苹果Arkit的视觉内皮轨道与学术论坛最近公布的两种方法进行了比较。数据集覆盖了室内和室外案例,包括楼梯、扶梯、电梯、办公环境、购物商场和地铁站。