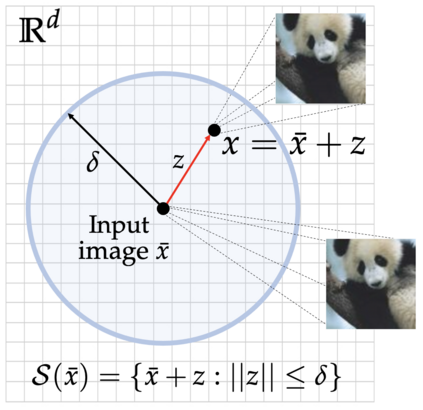

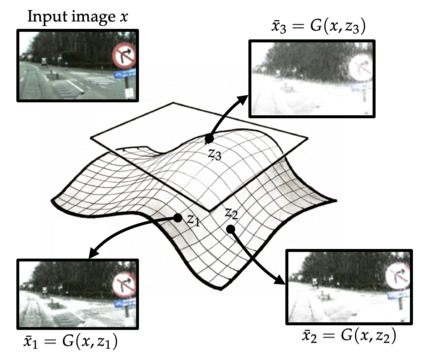

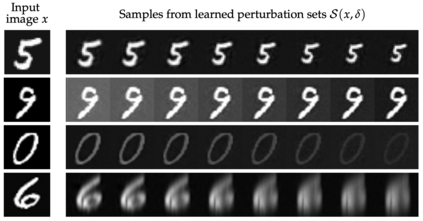

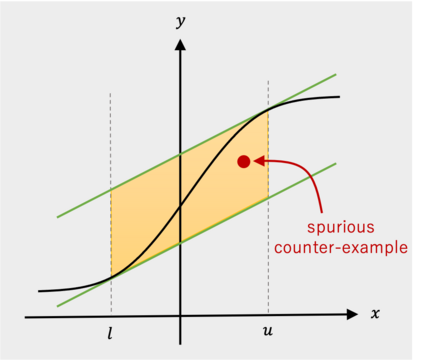

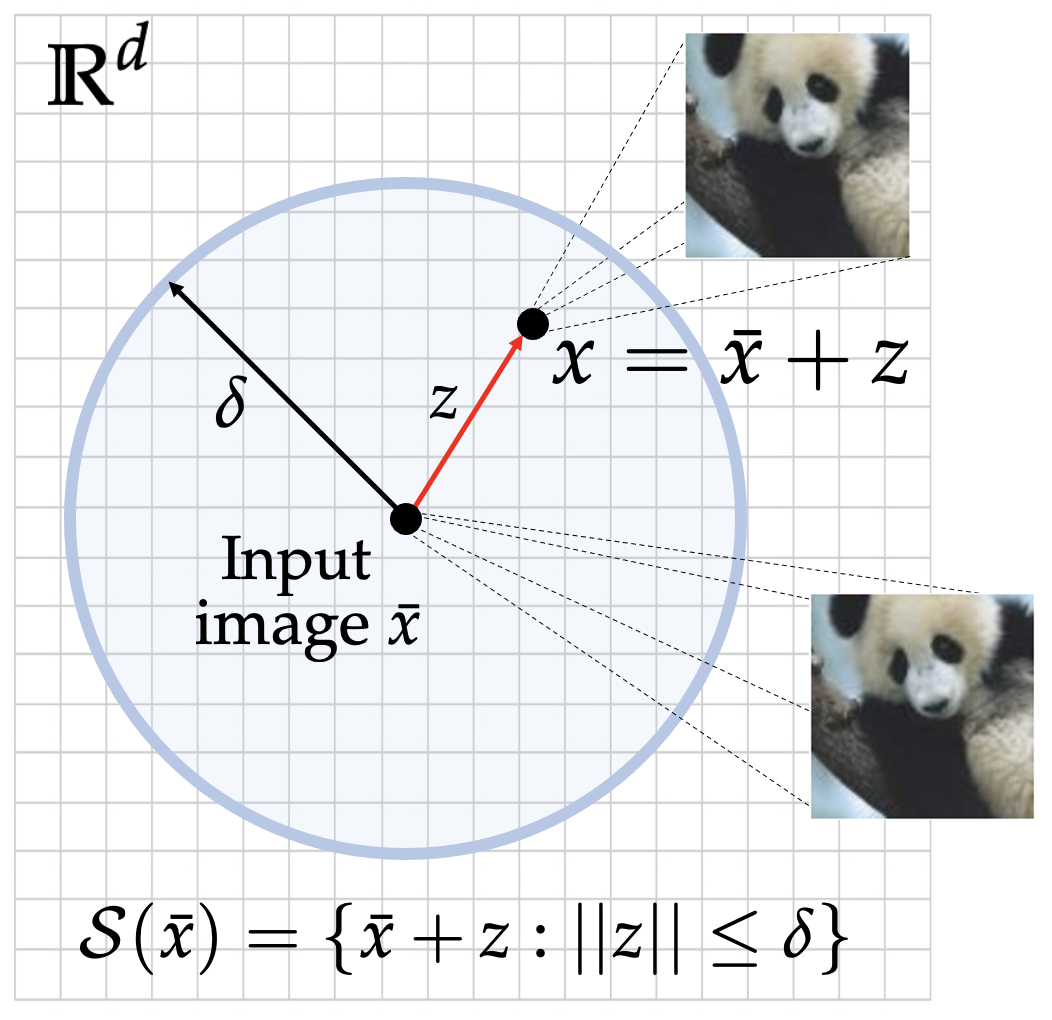

We consider the problem of certifying the robustness of deep neural networks against real-world distribution shifts. To do so, we bridge the gap between hand-crafted specifications and realistic deployment settings by proposing a novel neural-symbolic verification framework, in which we train a generative model to learn perturbations from data and define specifications with respect to the output of the learned model. A unique challenge arising from this setting is that existing verifiers cannot tightly approximate sigmoid activations, which are fundamental to many state-of-the-art generative models. To address this challenge, we propose a general meta-algorithm for handling sigmoid activations which leverages classical notions of counter-example-guided abstraction refinement. The key idea is to "lazily" refine the abstraction of sigmoid functions to exclude spurious counter-examples found in the previous abstraction, thus guaranteeing progress in the verification process while keeping the state-space small. Experiments on the MNIST and CIFAR-10 datasets show that our framework significantly outperforms existing methods on a range of challenging distribution shifts.

翻译:我们考虑了在现实世界分布变化中证明深神经网络的坚固性的问题。为此,我们提出一个新的神经-正调核查框架,以训练一种基因模型,从数据中学习扰动,并界定与所学模型产出有关的规格。这一环境所产生的一个独特挑战是,现有的核查人员无法紧紧地近似对许多最先进的基因化模型至关重要的模拟活化。为了应对这一挑战,我们提议了一种处理模拟活化的一般元值,用以利用反典型的制导抽象改进的经典概念。关键的想法是“贫化地”完善模拟功能的抽象,以排除上一个抽象的反标本,从而保证核查进程取得进展,同时保持国家空间小。对MNIST和CIFAR-10数据集的实验表明,我们的框架大大超越了在具有挑战性的分布变化范围上的现有方法。</s>