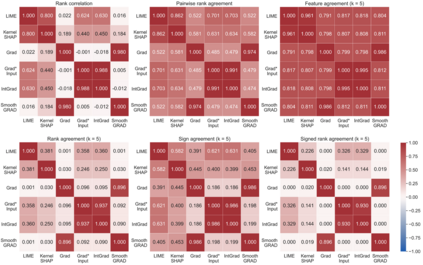

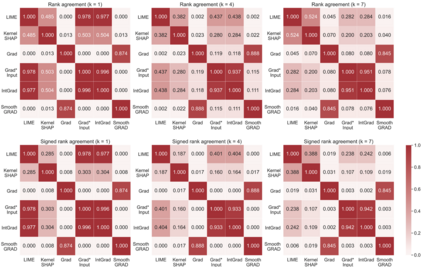

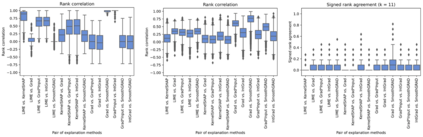

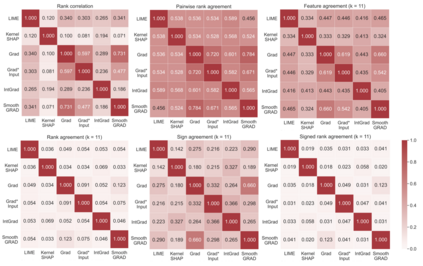

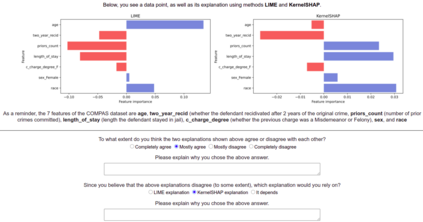

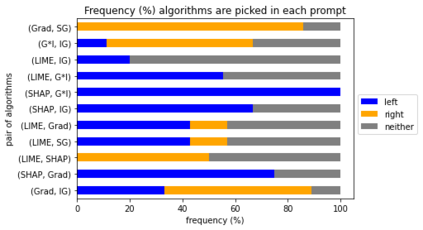

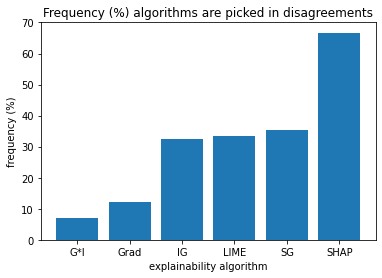

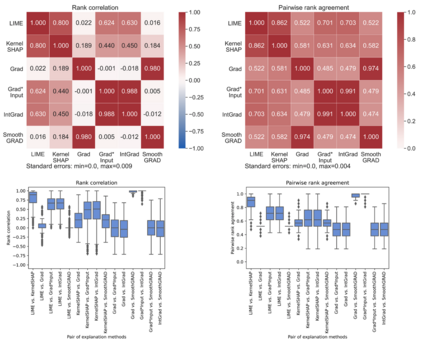

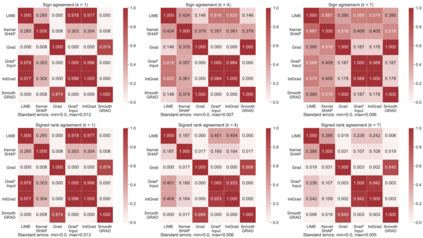

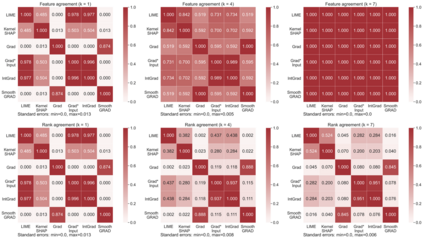

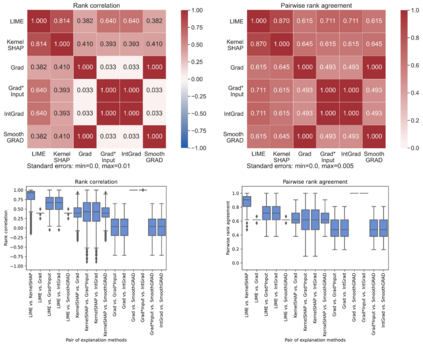

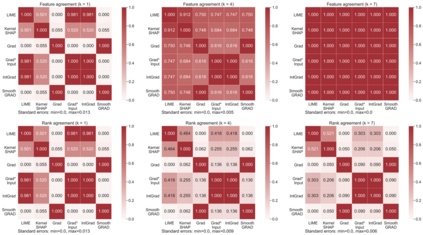

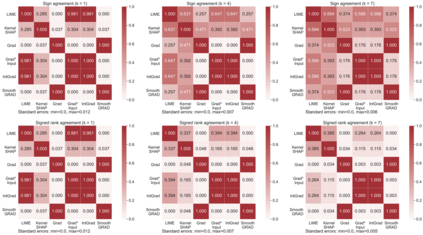

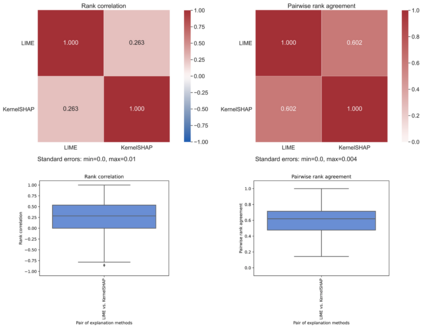

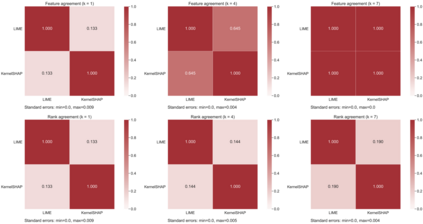

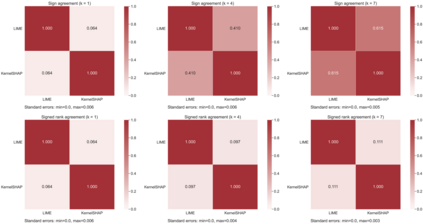

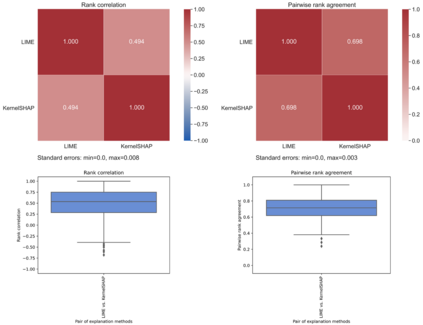

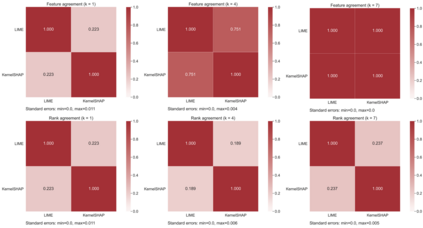

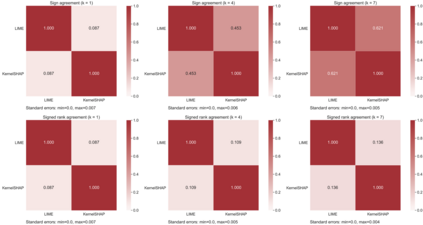

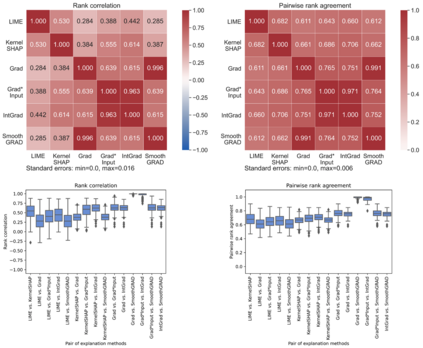

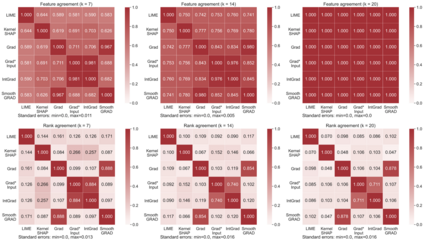

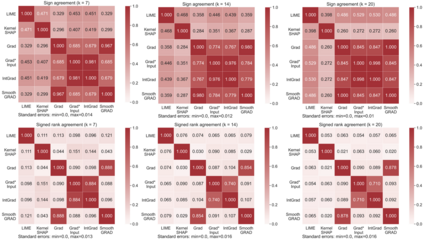

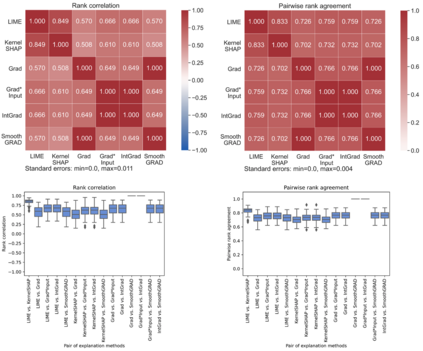

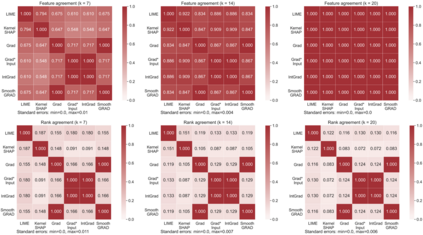

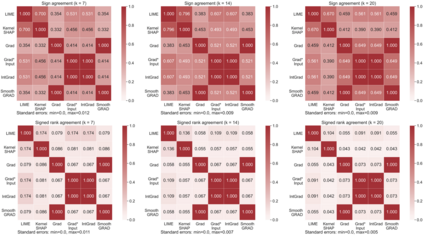

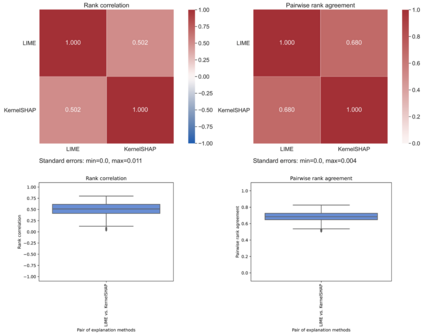

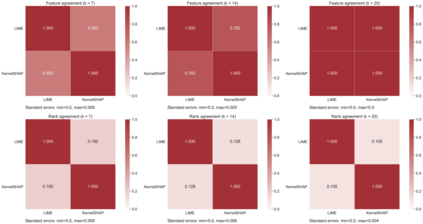

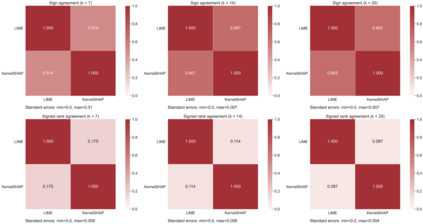

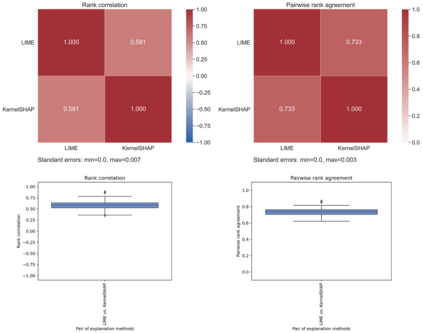

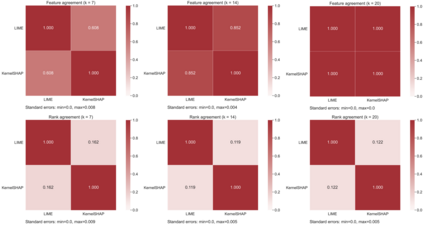

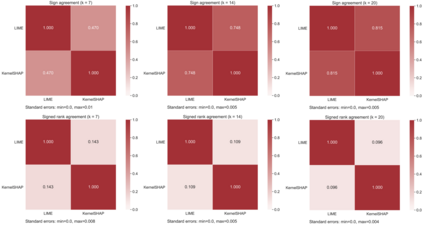

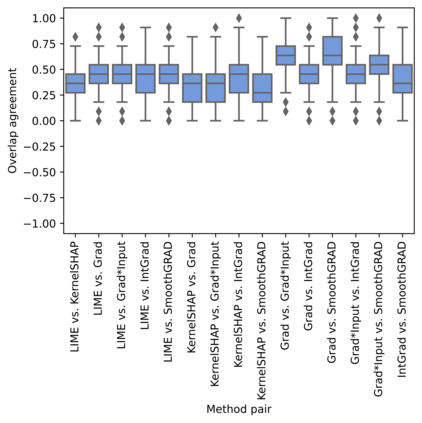

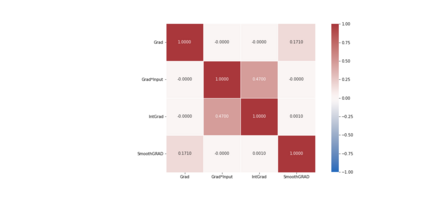

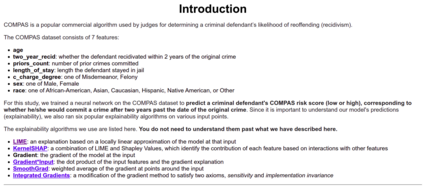

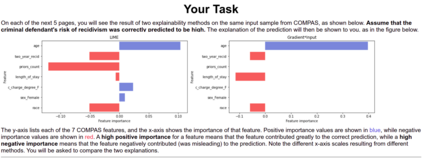

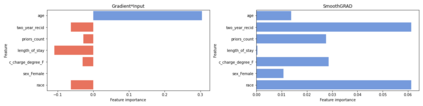

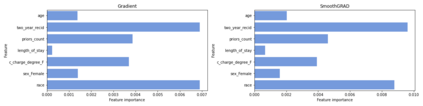

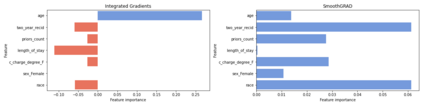

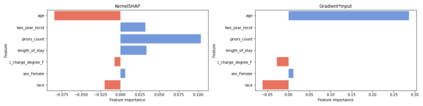

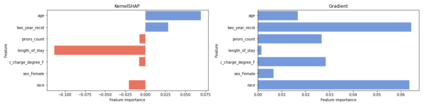

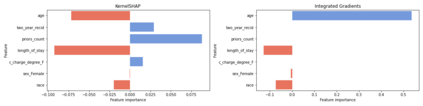

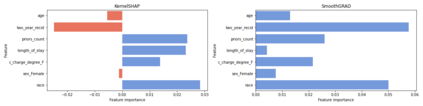

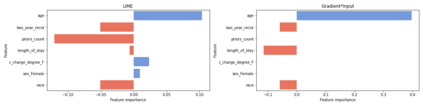

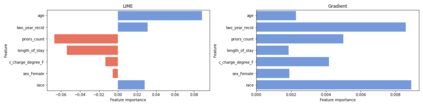

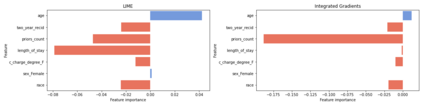

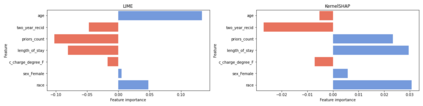

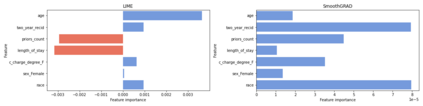

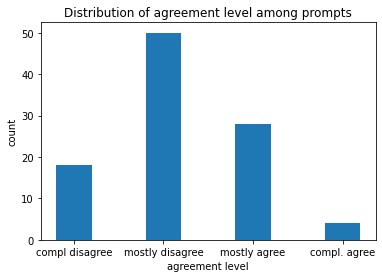

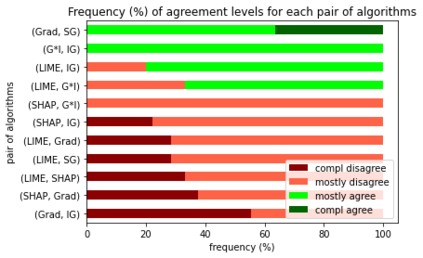

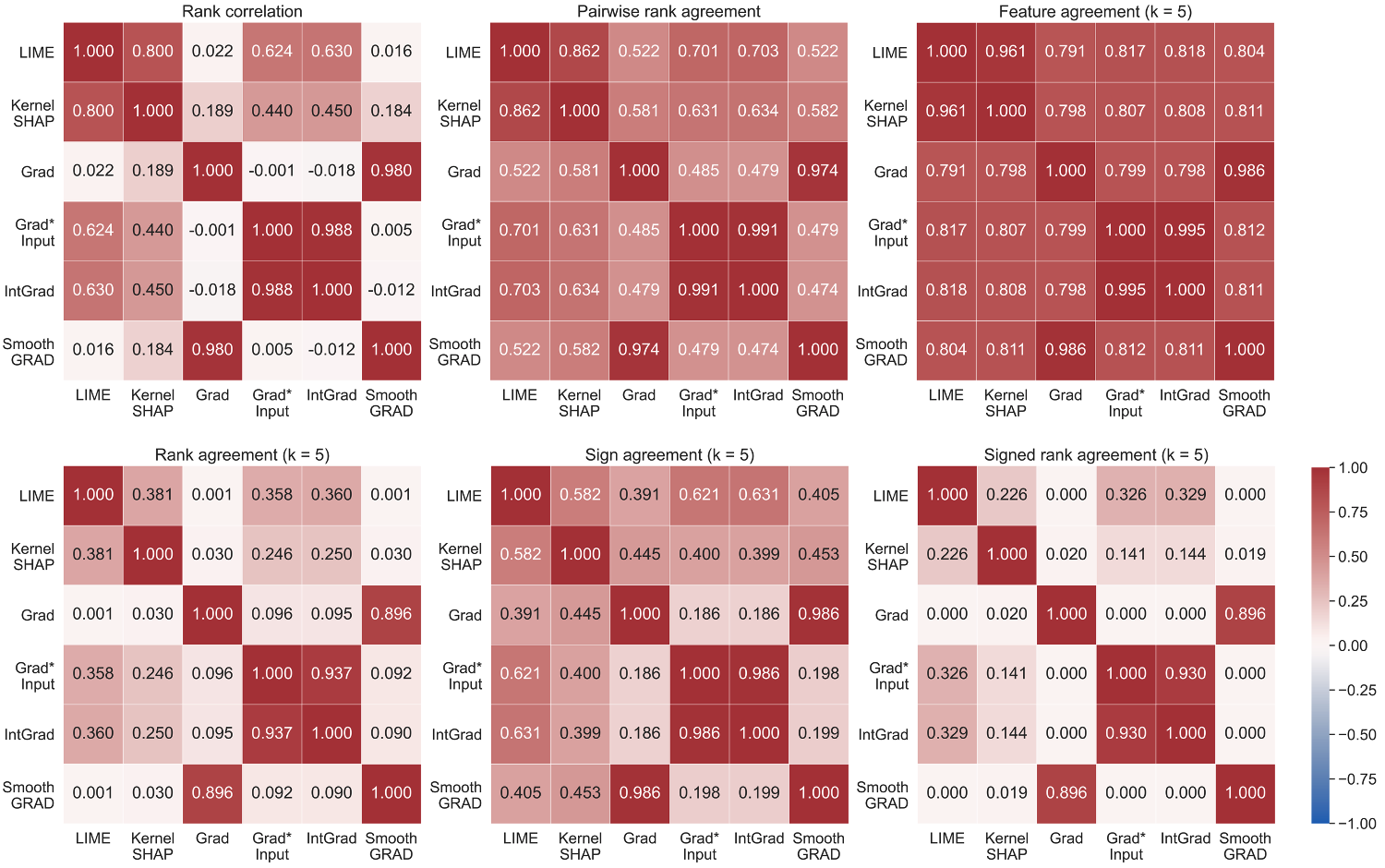

As various post hoc explanation methods are increasingly being leveraged to explain complex models in high-stakes settings, it becomes critical to develop a deeper understanding of if and when the explanations output by these methods disagree with each other, and how such disagreements are resolved in practice. However, there is little to no research that provides answers to these critical questions. In this work, we introduce and study the disagreement problem in explainable machine learning. More specifically, we formalize the notion of disagreement between explanations, analyze how often such disagreements occur in practice, and how do practitioners resolve these disagreements. To this end, we first conduct interviews with data scientists to understand what constitutes disagreement between explanations generated by different methods for the same model prediction, and introduce a novel quantitative framework to formalize this understanding. We then leverage this framework to carry out a rigorous empirical analysis with four real-world datasets, six state-of-the-art post hoc explanation methods, and eight different predictive models, to measure the extent of disagreement between the explanations generated by various popular explanation methods. In addition, we carry out an online user study with data scientists to understand how they resolve the aforementioned disagreements. Our results indicate that state-of-the-art explanation methods often disagree in terms of the explanations they output. Our findings underscore the importance of developing principled evaluation metrics that enable practitioners to effectively compare explanations.

翻译:由于越来越多地利用各种临时后的解释方法来解释在高取量环境中的复杂模型,因此,必须更深入地了解这些方法的解释产出是否和何时相互不一致,以及在实践中如何解决这种分歧。然而,几乎没有什么研究能够对这些关键问题提供答案。在这项工作中,我们提出并研究在可解释的机器学习中存在的分歧问题。更具体地说,我们正式确定解释之间分歧的概念,分析在实践中这种分歧的发生频率,以及从业人员如何解决这些分歧。为此,我们首先与数据科学家进行访谈,以了解什么是同一模型预测不同方法产生的解释之间的分歧,并采用新的量化框架来正式确定这一理解。我们随后利用这一框架,对四个真实世界数据集、六个最先进的后期解释方法和八个不同的预测模型进行严格的实证分析,以衡量各种大众解释方法产生解释的分歧程度。此外,我们与数据科学家进行在线用户研究,以了解他们如何解决上述分歧。我们的成果表明,对数据实践者进行的数据解释必须有效地强调我们的数据解释的重要性。