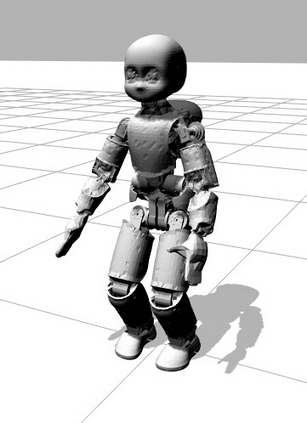

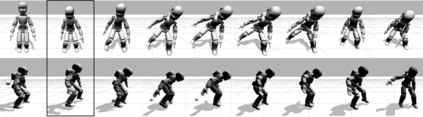

Balancing and push-recovery are essential capabilities enabling humanoid robots to solve complex locomotion tasks. In this context, classical control systems tend to be based on simplified physical models and hard-coded strategies. Although successful in specific scenarios, this approach requires demanding tuning of parameters and switching logic between specifically-designed controllers for handling more general perturbations. We apply model-free Deep Reinforcement Learning for training a general and robust humanoid push-recovery policy in a simulation environment. Our method targets high-dimensional whole-body humanoid control and is validated on the iCub humanoid. Reward components incorporating expert knowledge on humanoid control enable fast learning of several robust behaviors by the same policy, spanning the entire body. We validate our method with extensive quantitative analyses in simulation, including out-of-sample tasks which demonstrate policy robustness and generalization, both key requirements towards real-world robot deployment.

翻译:平衡和推力回收是使人造机器人能够解决复杂移动任务的基本能力。在这方面,古典控制系统往往以简化物理模型和硬编码战略为基础。虽然在具体情况下是成功的,但这种方法要求具体设计的控制器之间对参数进行调和并转换逻辑,以便处理更普遍的扰动。我们采用无模型的深层强化学习方法,在模拟环境中培训一般和强健的人类型推进回收政策。我们的方法针对高维全体人体控制,并在iCub人类类上验证。包含人类类控制专家知识的累进式组件能够通过同一政策快速学习好几种强势行为,贯穿整个身体。我们验证我们的方法,在模拟中进行广泛的定量分析,包括显示政策稳健性和普遍性的外表外任务,这两种关键要求都是真实世界机器人部署的关键要求。