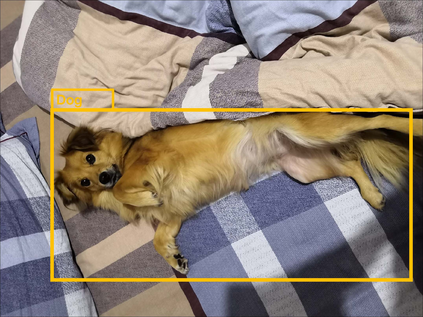

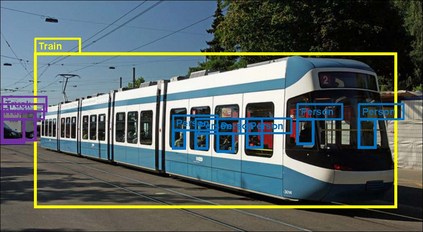

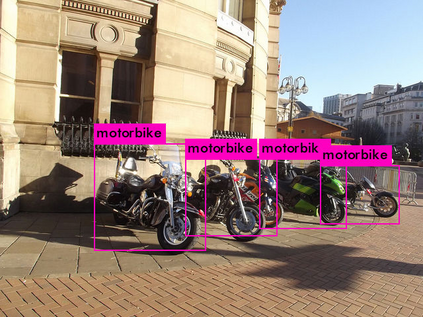

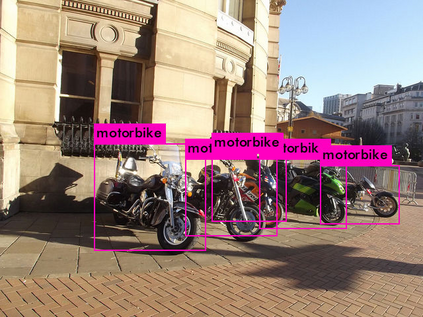

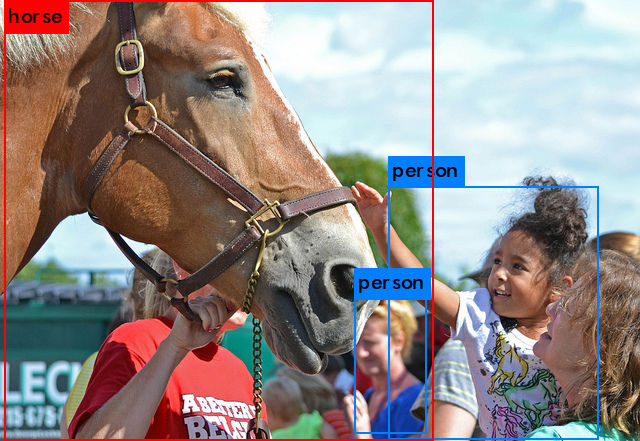

Bounding box regression is the crucial step in object detection. In existing methods, while $\ell_n$-norm loss is widely adopted for bounding box regression, it is not tailored to the evaluation metric, i.e., Intersection over Union (IoU). Recently, IoU loss and generalized IoU (GIoU) loss have been proposed to benefit the IoU metric, but still suffer from the problems of slow convergence and inaccurate regression. In this paper, we propose a Distance-IoU (DIoU) loss by incorporating the normalized distance between the predicted box and the target box, which converges much faster in training than IoU and GIoU losses. Furthermore, this paper summarizes three geometric factors in bounding box regression, \ie, overlap area, central point distance and aspect ratio, based on which a Complete IoU (CIoU) loss is proposed, thereby leading to faster convergence and better performance. By incorporating DIoU and CIoU losses into state-of-the-art object detection algorithms, e.g., YOLO v3, SSD and Faster RCNN, we achieve notable performance gains in terms of not only IoU metric but also GIoU metric. Moreover, DIoU can be easily adopted into non-maximum suppression (NMS) to act as the criterion, further boosting performance improvement. The source code and trained models are available at https://github.com/Zzh-tju/DIoU.

翻译:在现行方法中,虽然美元=美元=n-norm损失被广泛采用,用于捆绑框的回归,但并不适应评估指标,即UU的交叉体。最近,有人提议,IoU损失和通用IOU(GIOU)损失,以有利于IoU衡量标准,但仍然受到缓慢趋同和不准确回归问题的影响。在本文中,我们提议通过将预测的框和目标框与目标框之间的正常距离(在培训中比IoU和GIOU损失要快得多)来进行距离化损失。此外,本文概述了限制框回归、\ie、重叠区域、中点距离和方比率方面的三个几何因素,据此提出了完全IoU(CIOU)损失,从而导致更快的趋同和更好的表现。通过将DIU和CioU的损失纳入州-艺术对象检测算法,例如,YOLO v3,在培训的GIO-DI标准中,我们在不易改进的业绩标准中,我们只能达到IO-SDMA标准。