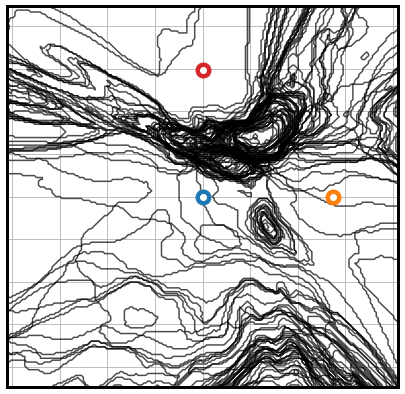

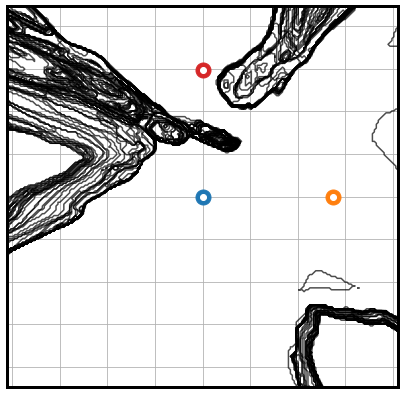

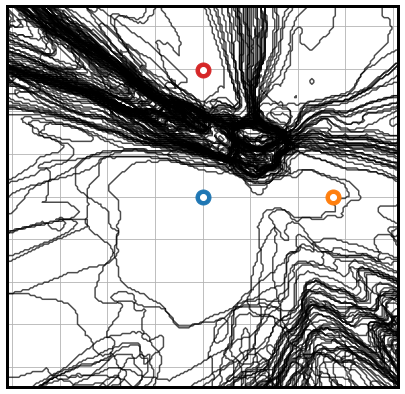

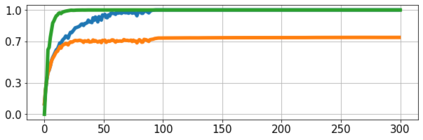

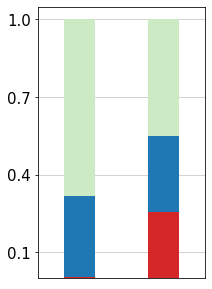

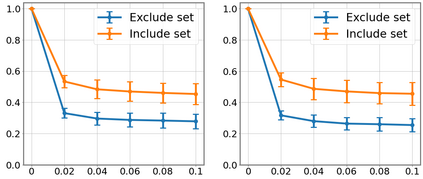

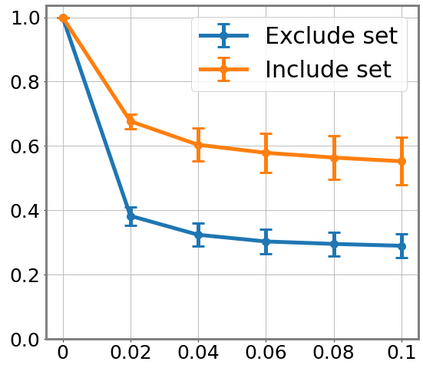

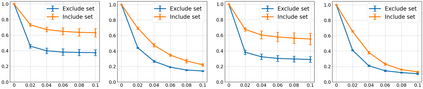

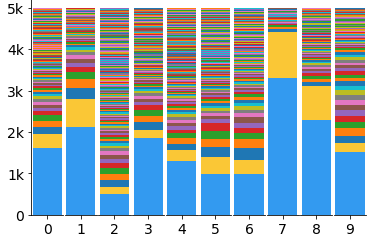

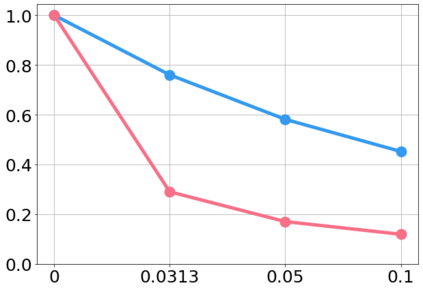

In general, Deep Neural Networks (DNNs) are evaluated by the generalization performance measured on unseen data excluded from the training phase. Along with the development of DNNs, the generalization performance converges to the state-of-the-art and it becomes difficult to evaluate DNNs solely based on this metric. The robustness against adversarial attack has been used as an additional metric to evaluate DNNs by measuring their vulnerability. However, few studies have been performed to analyze the adversarial robustness in terms of the geometry in DNNs. In this work, we perform an empirical study to analyze the internal properties of DNNs that affect model robustness under adversarial attacks. In particular, we propose the novel concept of the Populated Region Set (PRS), where training samples are populated more frequently, to represent the internal properties of DNNs in a practical setting. From systematic experiments with the proposed concept, we provide empirical evidence to validate that a low PRS ratio has a strong relationship with the adversarial robustness of DNNs. We also devise PRS regularizer leveraging the characteristics of PRS to improve the adversarial robustness without adversarial training.

翻译:一般来说,深神经网络(DNN)是用培训阶段所排除的无形数据衡量的通用性能进行评估的。在开发DNN的同时,一般性能与最新技术相吻合,很难仅仅根据这一指标来评价DNN。对抗性攻击的稳健性已被作为一种额外的衡量标准用来评估DNN的弱点。然而,在分析DNN的几何测量方面的对抗性强性方面,没有进行多少研究。在这项工作中,我们进行了一项经验性研究,分析DNN的内在特性,这种特性在对抗性攻击中影响到模型的稳健性。特别是,我们提出了《流行区域集》的新概念,即培训样品在其中更频繁地使用,以便在实际环境中代表DNN的内在特性。从对拟议概念的系统试验中,我们提供了经验证据,以证实低PRS比率与DNN的对抗性强性关系很强。我们还利用PRS的特性来利用PRS的特性,在没有对抗性训练的情况下改进对抗性强性。