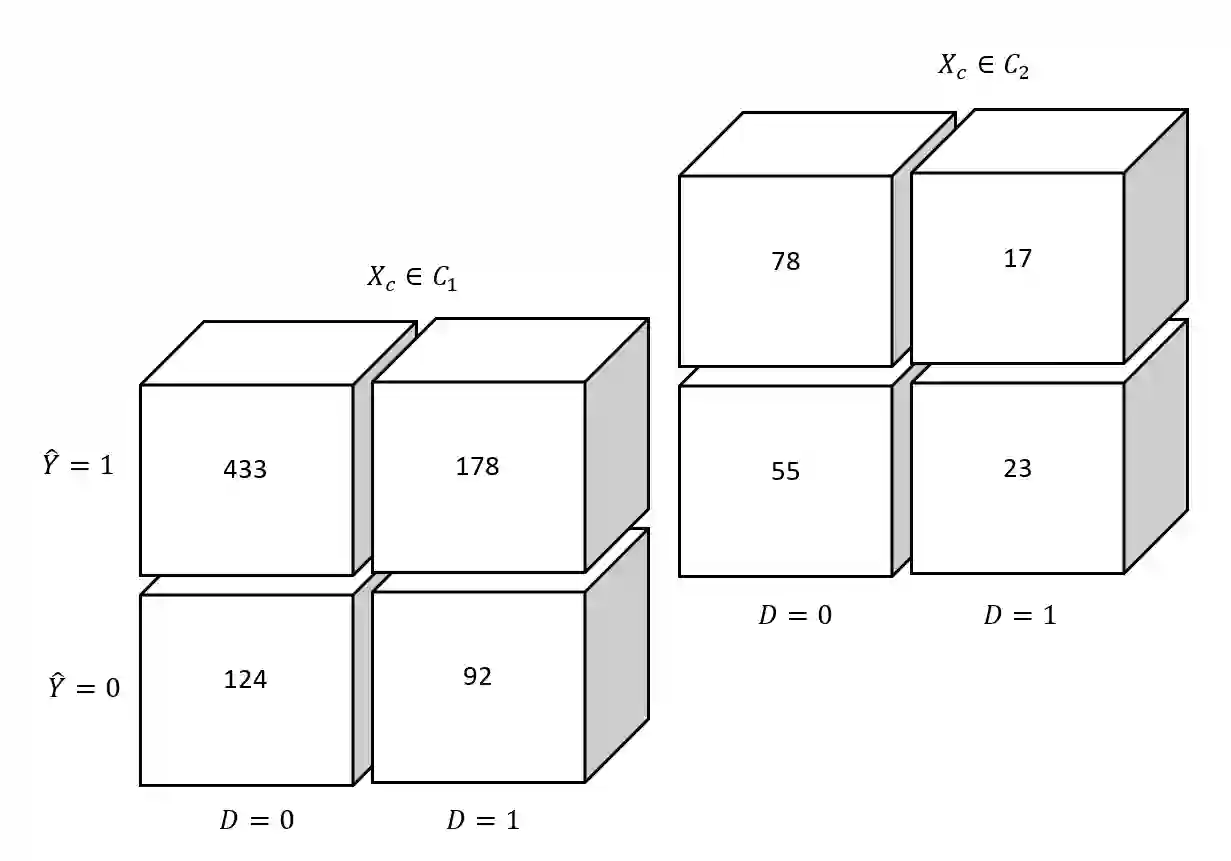

In credit markets, screening algorithms aim to discriminate between good-type and bad-type borrowers. However, when doing so, they also often discriminate between individuals sharing a protected attribute (e.g. gender, age, racial origin) and the rest of the population. In this paper, we show how (1) to test whether there exists a statistically significant difference between protected and unprotected groups, which we call lack of fairness and (2) to identify the variables that cause the lack of fairness. We then use these variables to optimize the fairness-performance trade-off. Our framework provides guidance on how algorithmic fairness can be monitored by lenders, controlled by their regulators, and improved for the benefit of protected groups.

翻译:在信贷市场,筛选算法旨在区分好式借款人和坏式借款人,然而,在这样做时,它们也常常对分享受保护属性的个人(例如性别、年龄、种族出身)和其他人口加以区分,在本文中,我们展示了如何(1) 检验受保护群体和无保护群体之间是否存在统计上的重大差异,我们称之为缺乏公平,(2) 查明导致不公平的变量,然后我们利用这些变量优化公平-业绩权衡。我们的框架就如何由放款人监督其监管者控制并改进算法公平以造福受保护群体提供了指导。