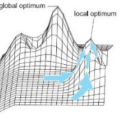

Machine Learning algorithms have been extensively researched throughout the last decade, leading to unprecedented advances in a broad range of applications, such as image classification and reconstruction, object recognition, and text categorization. Nonetheless, most Machine Learning algorithms are trained via derivative-based optimizers, such as the Stochastic Gradient Descent, leading to possible local optimum entrapments and inhibiting them from achieving proper performances. A bio-inspired alternative to traditional optimization techniques, denoted as meta-heuristic, has received significant attention due to its simplicity and ability to avoid local optimums imprisonment. In this work, we propose to use meta-heuristic techniques to fine-tune pre-trained weights, exploring additional regions of the search space, and improving their effectiveness. The experimental evaluation comprises two classification tasks (image and text) and is assessed under four literature datasets. Experimental results show nature-inspired algorithms' capacity in exploring the neighborhood of pre-trained weights, achieving superior results than their counterpart pre-trained architectures. Additionally, a thorough analysis of distinct architectures, such as Multi-Layer Perceptron and Recurrent Neural Networks, attempts to visualize and provide more precise insights into the most critical weights to be fine-tuned in the learning process.

翻译:在过去十年中,对机器学习算法进行了广泛研究,在图像分类和重建、物体识别和文本分类等广泛应用方面取得了前所未有的进展。然而,大多数机器学习算法都是通过衍生型优化器培训的,如Stochatistic 梯子,导致可能的当地最佳诱捕,并阻止它们取得适当的性能。一种被称为超重的传统优化技术的生物激励替代法因其简单和避免当地最佳监禁的能力而得到了极大关注。在这项工作中,我们提议使用超重技术来微调预先训练的重量,探索更多的搜索空间区域并提高其效力。实验性评价包括两个分类任务(图像和文本),并按四个文献数据集进行评估。实验结果显示,自然激励算法在探索预先训练过重的附近,取得优于其预先训练前结构的优异结果。此外,对不同结构的透彻分析,如多拉里·佩里伦和核心神经网络的微重度,以便更精确的洞察过程,尝试视觉和视觉化。