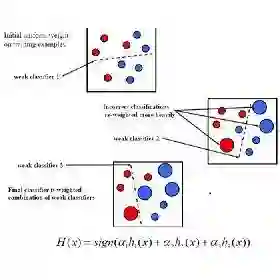

We study boosting algorithms from a new perspective. We show that the Lagrange dual problems of AdaBoost, LogitBoost and soft-margin LPBoost with generalized hinge loss are all entropy maximization problems. By looking at the dual problems of these boosting algorithms, we show that the success of boosting algorithms can be understood in terms of maintaining a better margin distribution by maximizing margins and at the same time controlling the margin variance.We also theoretically prove that, approximately, AdaBoost maximizes the average margin, instead of the minimum margin. The duality formulation also enables us to develop column generation based optimization algorithms, which are totally corrective. We show that they exhibit almost identical classification results to that of standard stage-wise additive boosting algorithms but with much faster convergence rates. Therefore fewer weak classifiers are needed to build the ensemble using our proposed optimization technique.

翻译:我们从新的角度研究提高算法。 我们显示, AdaBoost 、 LogitBoost 和 LPBoost 的软边际的双重问题, 以及通用链链损失, 全都是通缩最大化问题。 通过研究这些促进算法的双重问题, 我们表明, 提高算法的成功可以被理解为通过最大限度地增加利润和同时控制差值差异来保持更好的差值分布。 我们还从理论上证明, AdaBoost 大约将平均差值最大化, 而不是最小差值。 双重性配方还使我们能够开发基于柱体生成的优化算法, 而这些算法是完全纠正性的。 我们显示, 它们显示出几乎相同的分类结果, 与标准的分阶段添加法加速算法一样, 但速度要快得多 。 因此, 使用我们提议的优化技术来构建共和体, 需要更少的薄弱分类器来减少 。