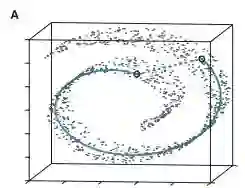

A fundamental task in robotics is to navigate between two locations. In particular, real-world navigation can require long-horizon planning using high-dimensional RGB images, which poses a substantial challenge for end-to-end learning-based approaches. Current semi-parametric methods instead achieve long-horizon navigation by combining learned modules with a topological memory of the environment, often represented as a graph over previously collected images. However, using these graphs in practice typically involves tuning a number of pruning heuristics to avoid spurious edges, limit runtime memory usage and allow reasonably fast graph queries. In this work, we present One-4-All (O4A), a method leveraging self-supervised and manifold learning to obtain a graph-free, end-to-end navigation pipeline in which the goal is specified as an image. Navigation is achieved by greedily minimizing a potential function defined continuously over the O4A latent space. Our system is trained offline on non-expert exploration sequences of RGB data and controls, and does not require any depth or pose measurements. We show that O4A can reach long-range goals in 8 simulated Gibson indoor environments, and further demonstrate successful real-world navigation using a Jackal UGV platform.

翻译:机器人学领域的一个基本任务就是在两个位置之间导航。尤其是在现实世界中,需要使用高维度的RGB图像进行长期规划,这对端到端学习方法构成了重大挑战。当前半参数方法通过将学习的模块与环境的拓扑记忆相结合来实现长期导航,通常表示为先前收集的图像上的图表。然而,在实践中使用这些图表通常需要调整许多修剪启发式算法以避免虚假边缘、限制运行时内存使用并允许合理快速的图形查询。在本文中,我们提出了One-4-All(O4A),一种利用自监督和流形学习来获取无图表的端到端导航管道的方法,其中目标被指定为图像。导航是通过贪婪地最小化在O4A潜空间连续定义的势函数来实现的。我们的系统是在非专家勘探RGB数据和控制的序列上离线训练的,并且不需要任何深度或姿态测量。我们展示了O4A可以在8个模拟Gibson室内环境中到达远程目标,并进一步演示了在Jackal UGV平台上成功实现的现实世界导航。