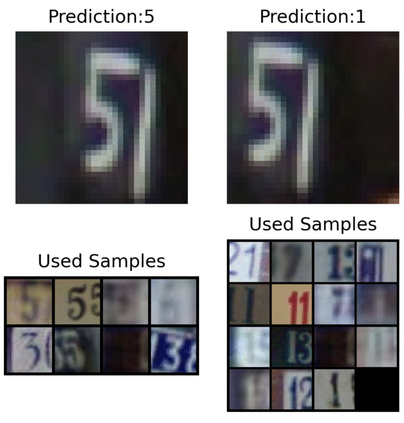

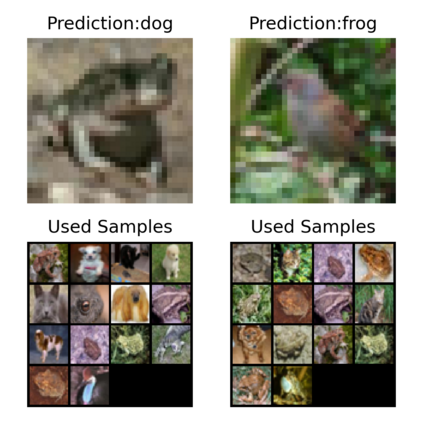

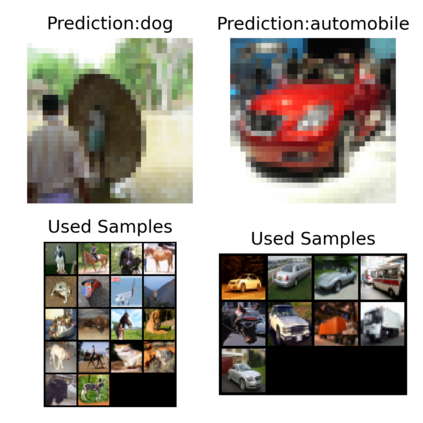

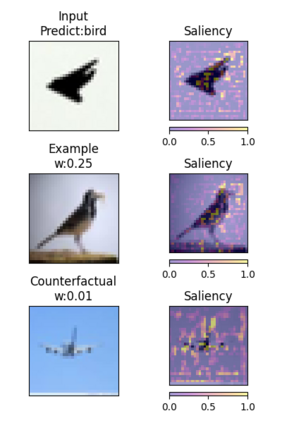

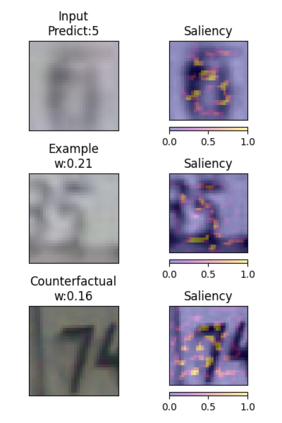

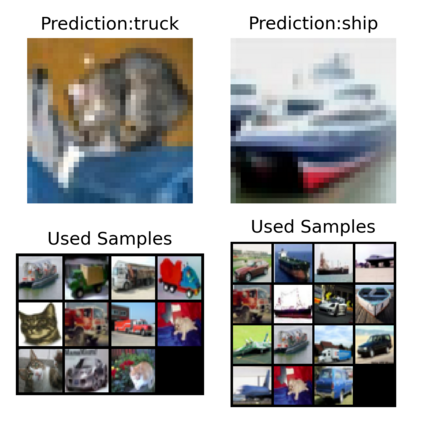

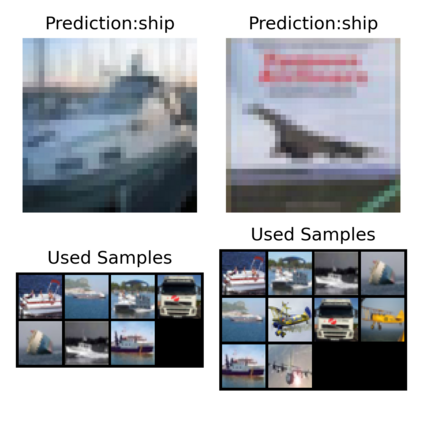

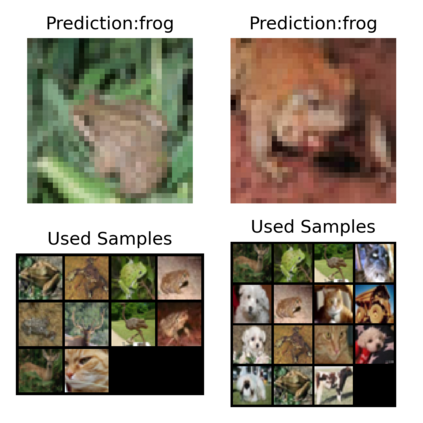

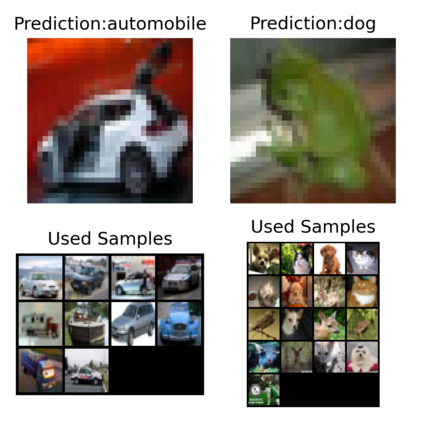

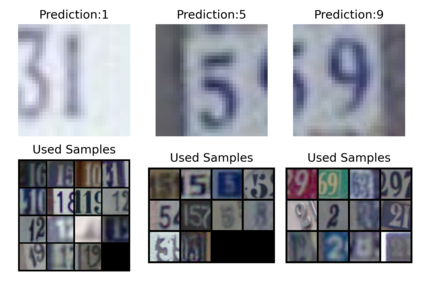

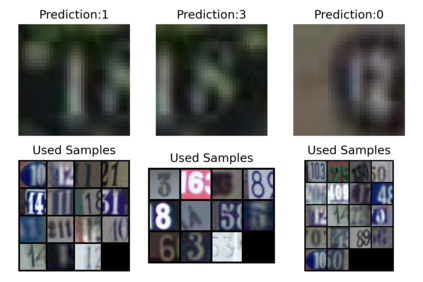

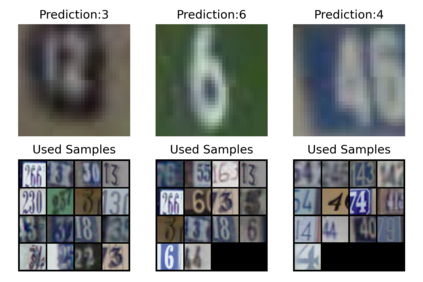

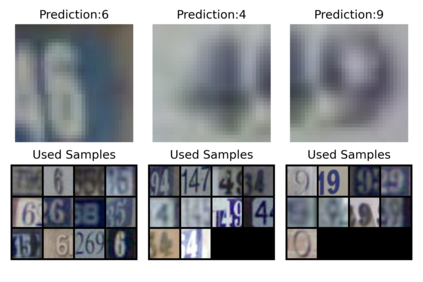

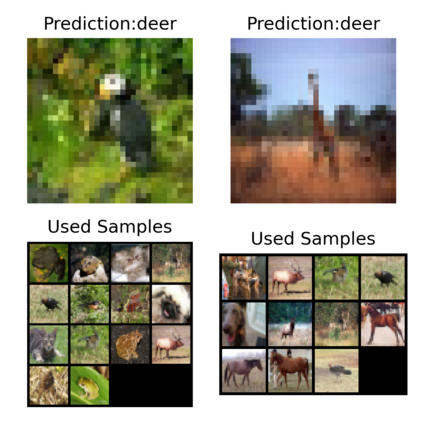

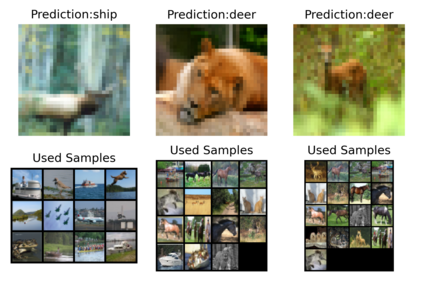

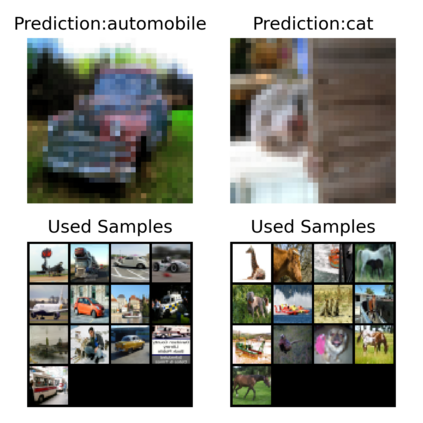

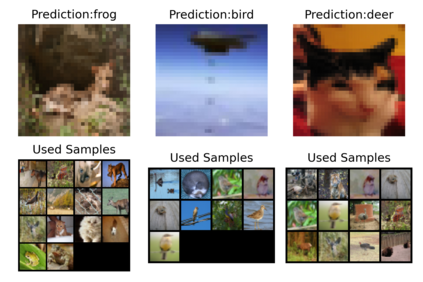

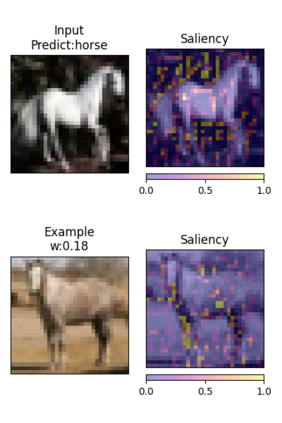

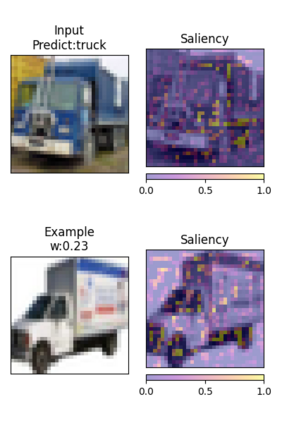

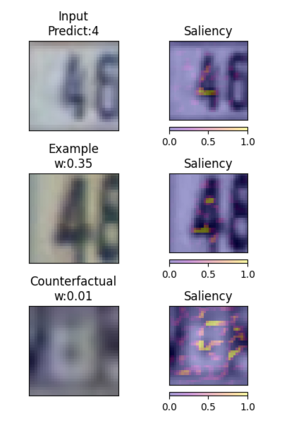

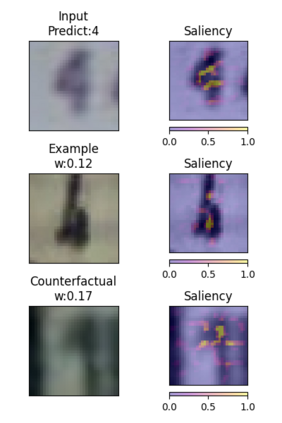

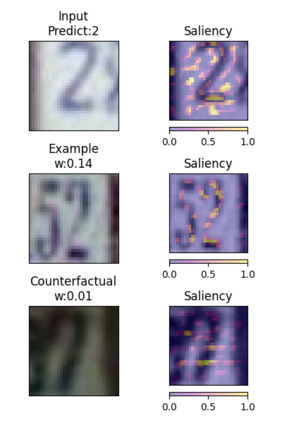

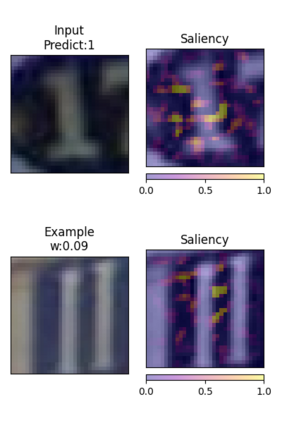

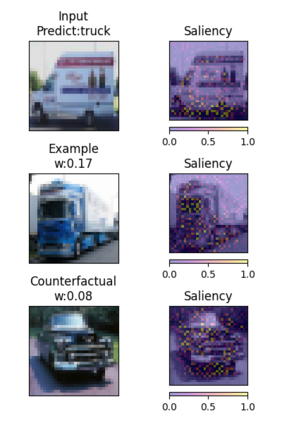

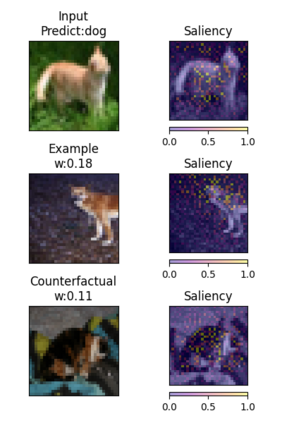

Due to their black-box and data-hungry nature, deep learning techniques are not yet widely adopted for real-world applications in critical domains, like healthcare and justice. This paper presents Memory Wrap, a plug-and-play extension to any image classification model. Memory Wrap improves both data-efficiency and model interpretability, adopting a content-attention mechanism between the input and some memories of past training samples. We show that Memory Wrap outperforms standard classifiers when it learns from a limited set of data, and it reaches comparable performance when it learns from the full dataset. We discuss how its structure and content-attention mechanisms make predictions interpretable, compared to standard classifiers. To this end, we both show a method to build explanations by examples and counterfactuals, based on the memory content, and how to exploit them to get insights about its decision process. We test our approach on image classification tasks using several architectures on three different datasets, namely CIFAR10, SVHN, and CINIC10.

翻译:由于其黑盒和数据饥饿的性质,深层学习技术尚未被广泛用于医疗保健和司法等关键领域的现实应用。 本文展示了记忆包,这是任何图像分类模型的插件和播放扩展。 记忆包提高了数据效率和模型的解释性, 在输入和以往培训样本的某些记忆之间采用了内容注意机制。 我们显示, 记忆包在从有限的数据集中学习时, 符合标准分类标准, 当它从完整的数据集中学习时, 它达到可比较的性能。 我们讨论其结构和内容注意机制如何使预测可以解释, 与标准分类器相比。 为此, 我们既展示了一种根据记忆内容建立实例解释和反事实解释的方法, 也展示了如何利用它们来了解其决策过程。 我们用三个不同的数据集( CIFAR10、 SVHN 和 CINIC10) 上的若干结构测试我们关于图像分类任务的方法。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem