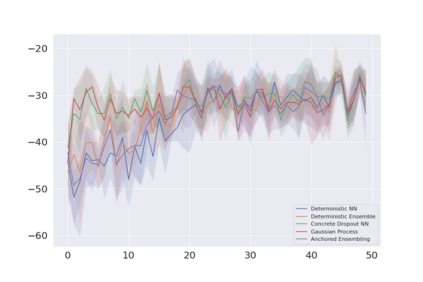

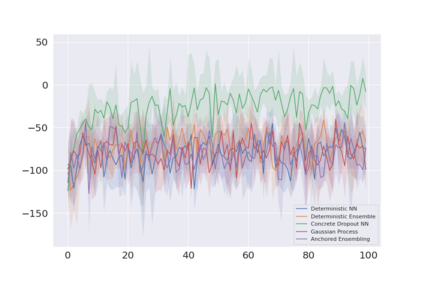

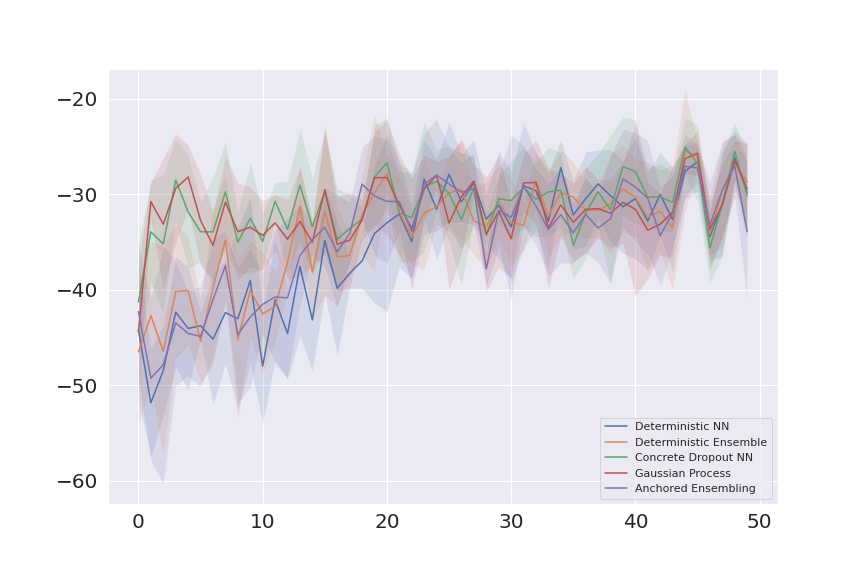

The need for algorithms able to solve Reinforcement Learning (RL) problems with few trials has motivated the advent of model-based RL methods. The reported performance of model-based algorithms has dramatically increased within recent years. However, it is not clear how much of the recent progress is due to improved algorithms or due to improved models. While different modeling options are available to choose from when applying a model-based approach, the distinguishing traits and particular strengths of different models are not clear. The main contribution of this work lies precisely in assessing the model influence on the performance of RL algorithms. A set of commonly adopted models is established for the purpose of model comparison. These include Neural Networks (NNs), ensembles of NNs, two different approximations of Bayesian NNs (BNNs), that is, the Concrete Dropout NN and the Anchored Ensembling, and Gaussian Processes (GPs). The model comparison is evaluated on a suite of continuous control benchmarking tasks. Our results reveal that significant differences in model performance do exist. The Concrete Dropout NN reports persistently superior performance. We summarize these differences for the benefit of the modeler and suggest that the model choice is tailored to the standards required by each specific application.

翻译:对能够解决强化学习(RL)问题的算法的需要很少,这促使出现了基于模型的RL方法。所报告的基于模型的算法表现近年来急剧增加。然而,尚不清楚最近的进展有多少是由于改进算法或改进模型造成的。虽然在采用基于模型的方法时有不同的建模选项可供选择,但不同模型的特性和特殊长处并不清楚。这项工作的主要贡献恰恰在于评估模型对RL算法的性能的影响。为了模型比较的目的,建立了一套通用的模型。其中包括Neural 网络(NNN)、NNN的集合、BNN的两个不同的近似点(BNN),即混凝土流出NNNN和Anchored Ensembling和Gossian进程(GPs)。模型比较是在一套持续的控制基准任务的基础上进行的。我们的结果显示,模型的性能确实存在重大差异。具体流出模型报告持续优异之处。我们根据每个标准总结了这些差异,以便根据具体应用。