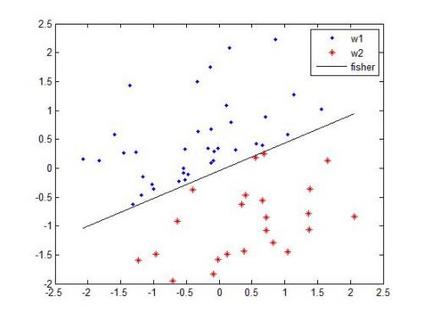

Unlike its intercept, a linear classifier's weight vector cannot be tuned by a simple grid search. Hence, this paper proposes weight vector tuning of a generic binary linear classifier through the parameterization of a decomposition of the discriminant by a scalar which controls the trade-off between conflicting informative and noisy terms. By varying this parameter, the original weight vector is modified in a meaningful way. Applying this method to a number of linear classifiers under a variety of data dimensionality and sample size settings reveals that the classification performance loss due to non-optimal native hyperparameters can be compensated for by weight vector tuning. This yields computational savings as the proposed tuning method reduces to tuning a scalar compared to tuning the native hyperparameter, which may involve repeated weight vector generation along with its burden of optimization, dimensionality reduction, etc., depending on the classifier. It is also found that weight vector tuning significantly improves the performance of Linear Discriminant Analysis (LDA) under high estimation noise. Proceeding from this second finding, an asymptotic study of the misclassification probability of the parameterized LDA classifier in the growth regime where the data dimensionality and sample size are comparable is conducted. Using random matrix theory, the misclassification probability is shown to converge to a quantity that is a function of the true statistics of the data. Additionally, an estimator of the misclassification probability is derived. Finally, computationally efficient tuning of the parameter using this estimator is demonstrated on real data.

翻译:与其拦截不同, 线性分类器的重量矢量无法通过简单的网格搜索来调换。 因此, 本文建议, 通过通过一个标量的分解参数对通用二进制线性线性分类器进行重量矢量调整, 该标量通过一个标量来控制相互冲突的信息和吵闹的条件之间的权衡取舍。 不同的参数, 原始的重量矢量会以有意义的方式修改。 在各种数据维度和样本大小设置下, 对一些线性分类器应用这个方法, 显示由于非最佳本地超常参数的分类性能损失可以通过重量矢量调整来补偿。 由于拟议调试算法降低到调控量的分量的分量分解分解分解的参数, 计算法的分解率的分解法将产生计算节余。 使用精确度的精确度分析法, 使用精确度数据分解的精确度的精确度分析法, 使用精确度的精确度模型, 使用精确度的精确度的精确度分析法系, 将数据推算的精确度的精确度 。