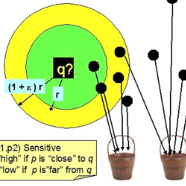

The analysis of the compression effects in generative adversarial networks (GANs) after training, i.e. without any fine-tuning, remains an unstudied, albeit important, topic with the increasing trend of their computation and memory requirements. While existing works discuss the difficulty of compressing GANs during training, requiring novel methods designed with the instability of GANs training in mind, we show that existing compression methods (namely clipping and quantization) may be directly applied to compress GANs post-training, without any additional changes. High compression levels may distort the generated set, likely leading to an increase of outliers that may negatively affect the overall assessment of existing k-nearest neighbor (KNN) based metrics. We propose two new precision and recall metrics based on locality-sensitive hashing (LSH), which, on top of increasing the outlier robustness, decrease the complexity of assessing an evaluation sample against $n$ reference samples from $O(n)$ to $O(\log(n))$, if using LSH and KNN, and to $O(1)$, if only applying LSH. We show that low-bit compression of several pre-trained GANs on multiple datasets induces a trade-off between precision and recall, retaining sample quality while sacrificing sample diversity.

翻译:虽然现有工作讨论了在培训期间压缩GAN的难度,要求采用与GAN培训不稳定的新方法,但我们表明,现有的压缩方法(即剪辑和定量)可直接用于压缩GAN的后培训,而无需作任何进一步改动。 高压缩水平可能会扭曲生成的数据集,可能导致外源增加,可能对现有的Kearest邻居(KNN)的计算和记忆要求的总体评估产生负面影响。 我们建议采用基于对地敏感的大麻(LSH)的两种新的精确度和回顾度量度,除了提高外源性强性外,还可以降低评估样本的复杂度,从美元(n)至美元(log)的参考样本,从美元到美元(n),如果使用LSH和KNNN,则可能增加外源,从而可能对基于现有Kearestest 邻居(KNNN)的计量标准的总体评估产生消极影响。我们建议采用两种新的精确度和回顾基于对地敏感度的大麻(LSH)的测量度的测量度标准。 我们只用低比和高额的GAN的精确度来进行精确度。