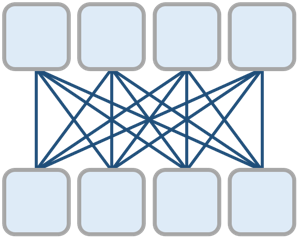

Foundation models have received much attention due to their effectiveness across a broad range of downstream applications. Though there is a big convergence in terms of architecture, most pretrained models are typically still developed for specific tasks or modalities. In this work, we propose to use language models as a general-purpose interface to various foundation models. A collection of pretrained encoders perceive diverse modalities (such as vision, and language), and they dock with a language model that plays the role of a universal task layer. We propose a semi-causal language modeling objective to jointly pretrain the interface and the modular encoders. We subsume the advantages and capabilities from both causal and non-causal modeling, thereby combining the best of two worlds. Specifically, the proposed method not only inherits the capabilities of in-context learning and open-ended generation from causal language modeling, but also is conducive to finetuning because of the bidirectional encoders. More importantly, our approach seamlessly unlocks the combinations of the above capabilities, e.g., enabling in-context learning or instruction following with finetuned encoders. Experimental results across various language-only and vision-language benchmarks show that our model outperforms or is competitive with specialized models on finetuning, zero-shot generalization, and few-shot learning.

翻译:基础模型因其在一系列广泛的下游应用中的有效性而得到了很大关注。尽管在结构方面存在着很大的趋同性,但大多数预先培训的模型通常仍然为特定任务或模式而开发。在这项工作中,我们提议使用语言模型作为各种基础模型的通用界面。一组经过培训的编码器对多种模式(例如愿景和语言)有不同的看法,它们与一种发挥普遍任务层作用的语言模型交汇在一起。我们提议了一个半因果语言模型目标,以联合预设界面和模块化编码器。我们从因果和非因果模型中吸收了优势和能力,从而将两个世界的最佳模型结合起来。具体地说,拟议方法不仅继承了文中学习和从因果语言模型中产生开放的一代的能力,而且由于双向任务层编码器的作用,还有助于进行微调。更重要的是,我们的方法无缝合了上述能力的组合,例如,能够以微调的模型和不因果的模型为基础进行内流学习或教学,从而将两个世界的最佳模型结合起来。具体地说,拟议的方法不仅继承了文系学习能力,而且还有助于调整了我们各种通用的视野和一般的模型。