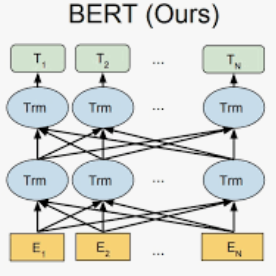

Embeddings, which compress information in raw text into semantics-preserving low-dimensional vectors, have been widely adopted for their efficacy. However, recent research has shown that embeddings can potentially leak private information about sensitive attributes of the text, and in some cases, can be inverted to recover the original input text. To address these growing privacy challenges, we propose a privatization mechanism for embeddings based on homomorphic encryption, to prevent potential leakage of any piece of information in the process of text classification. In particular, our method performs text classification on the encryption of embeddings from state-of-the-art models like BERT, supported by an efficient GPU implementation of CKKS encryption scheme. We show that our method offers encrypted protection of BERT embeddings, while largely preserving their utility on downstream text classification tasks.

翻译:将原始文本中的信息压缩成保留语义低维矢量的原始文本,这种嵌入式信息因其功效而被广泛采用。然而,最近的研究表明,嵌入式可能会泄漏关于文本敏感属性的私人信息,在某些情况下,可能会被倒置以恢复原始输入文本。为了应对这些日益增长的隐私挑战,我们提议了一个基于同质加密的嵌入式私营机制,以防止在文本分类过程中任何部分信息可能渗漏。特别是,我们的方法对从最先进的模型(如BERT)中嵌入的加密进行文本分类,并辅之以高效的GPU实施 CKKS加密计划。我们表明,我们的方法为BERT嵌入式提供了加密保护,同时基本上保留其在下游文本分类工作中的实用性。