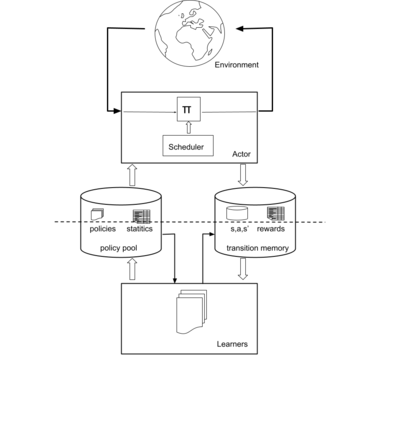

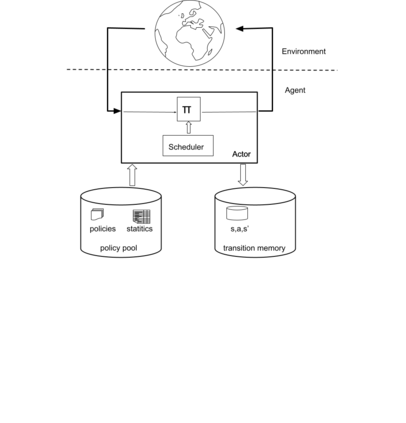

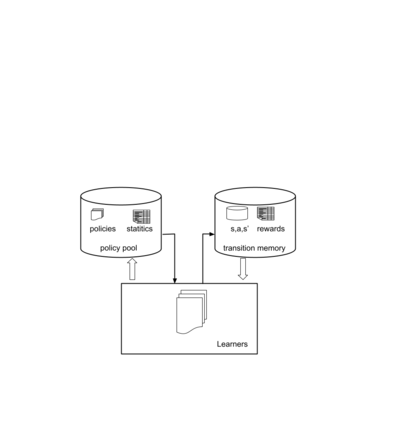

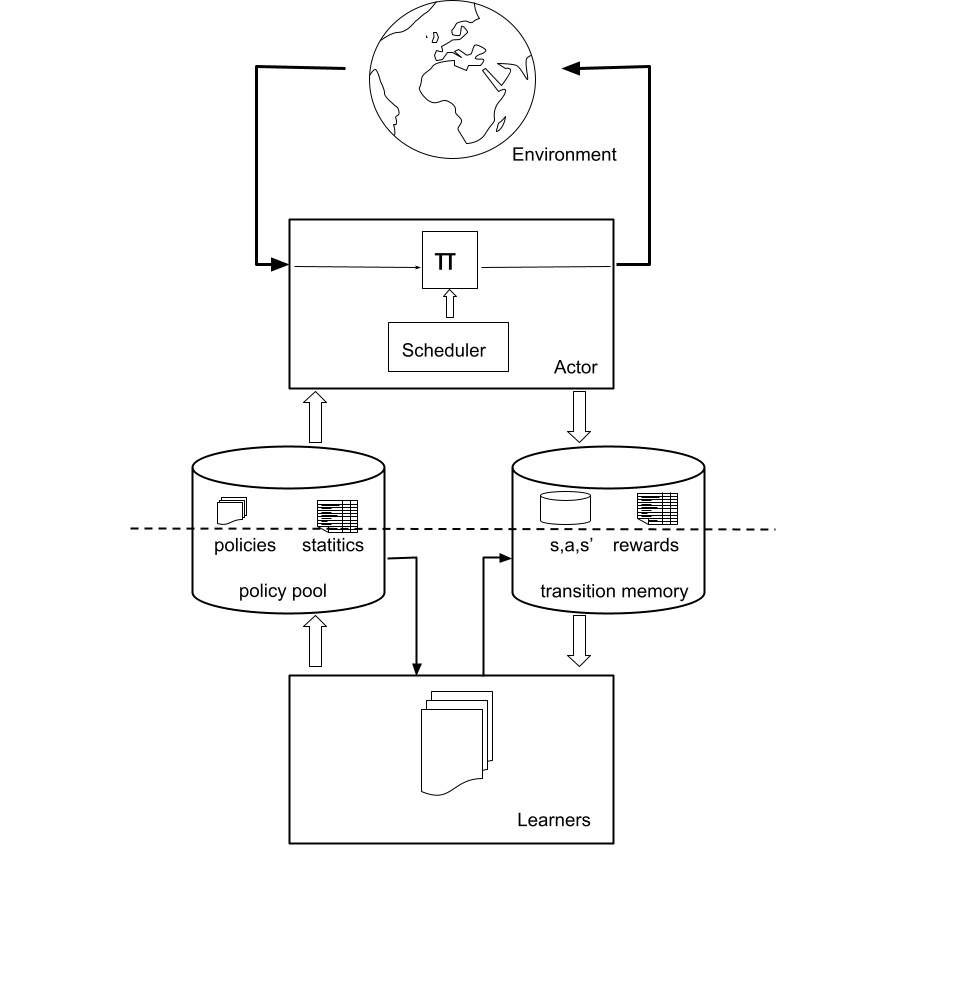

This position paper proposes a fresh look at Reinforcement Learning (RL) from the perspective of data-efficiency. Data-efficient RL has gone through three major stages: pure on-line RL where every data-point is considered only once, RL with a replay buffer where additional learning is done on a portion of the experience, and finally transition memory based RL, where, conceptually, all transitions are stored and re-used in every update step. While inferring knowledge from all explicitly stored experience has lead to a tremendous gain in data-efficiency, the question of how this data is collected has been vastly understudied. We argue that data-efficiency can only be achieved through careful consideration of both aspects. We propose to make this insight explicit via a paradigm that we call 'Collect and Infer', which explicitly models RL as two separate but interconnected processes, concerned with data collection and knowledge inference respectively. We discuss implications of the paradigm, how its ideas are reflected in the literature, and how it can guide future research into data efficient RL.

翻译:本立场文件建议从数据效率的角度重新审视加强学习(RL),数据效率RL经历了三个主要阶段:纯在线RL,其中每个数据点只考虑一次;RL,带有一个回放缓冲,其中根据部分经验进行更多的学习;最后,基于过渡记忆的RL,从概念上讲,所有过渡都储存起来,并在每个更新步骤中重新使用。虽然从所有明确储存的经验中推断出知识已导致数据效率的极大提高,但如何收集这些数据的问题却未得到充分研究。我们说,数据效率只有通过认真审议这两个方面才能实现。我们提议,通过我们称之为“聚合和推论”的模式来明确阐述这一洞见,这个模式明确地将RL作为两个分别与数据收集和知识推论有关的独立但相互关联的过程。我们讨论了范例的影响,如何在文献中反映其想法,以及如何指导今后对数据效率的研究。