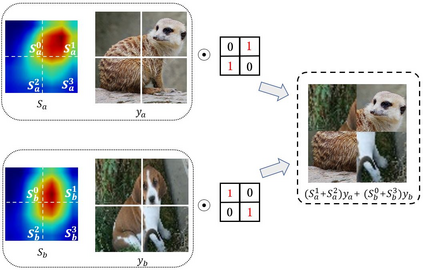

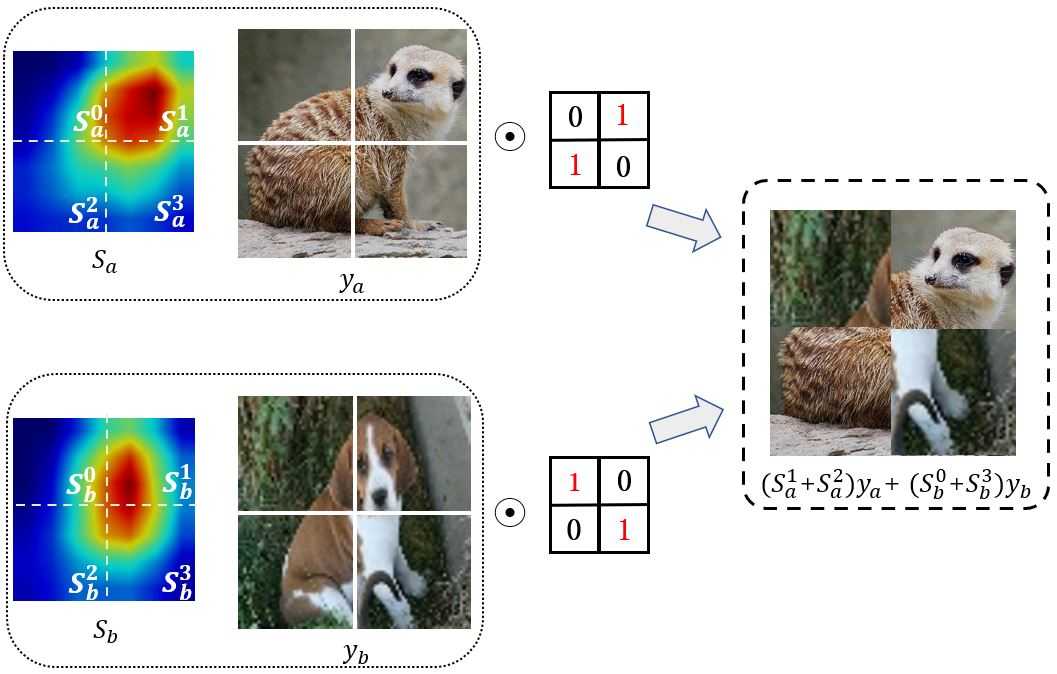

Few-shot learning aims to classify unseen classes with only a limited number of labeled data. Recent works have demonstrated that training models with a simple transfer learning strategy can achieve competitive results in few-shot classification. Although excelling at distinguishing training data, these models are not well generalized to unseen data, probably due to insufficient feature representations on evaluation. To tackle this issue, we propose Semantically Proportional Patchmix (SePPMix), in which patches are cut and pasted among training images and the ground truth labels are mixed proportionally to the semantic information of the patches. In this way, we can improve the generalization ability of the model by regional dropout effect without introducing severe label noise. To learn more robust representations of data, we further take rotate transformation on the mixed images and predict rotations as a rule-based regularizer. Extensive experiments on prevalent few-shot benchmarks have shown the effectiveness of our proposed method.

翻译:最近的工作表明,采用简单的转让学习战略的培训模式可以在几发分解中取得竞争性结果。虽然在区分培训数据方面成绩突出,但这些模式并不十分普遍,但可能由于评价的特征说明不足,这些模式并不完全与隐蔽数据相同。为了解决这一问题,我们提议在培训图像中切除和粘贴补丁,地面真相标签与补丁的语义信息成比例地混合。这样,我们可以通过不引入严重标签噪音的方式提高该模式的普及能力。为了了解更强有力的数据表述,我们进一步将混合图像的旋转转换作为基于规则的常规调节器。关于流行的几发基准的广泛实验显示了我们拟议方法的有效性。