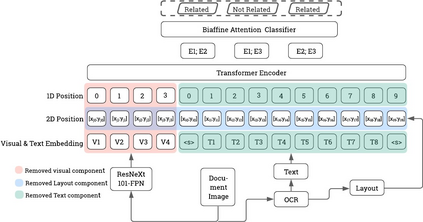

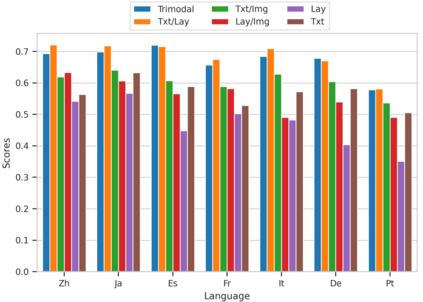

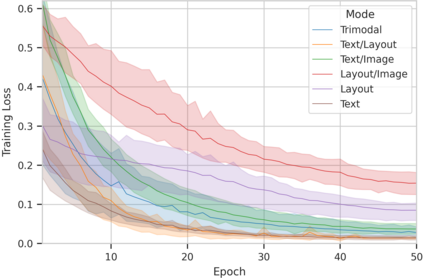

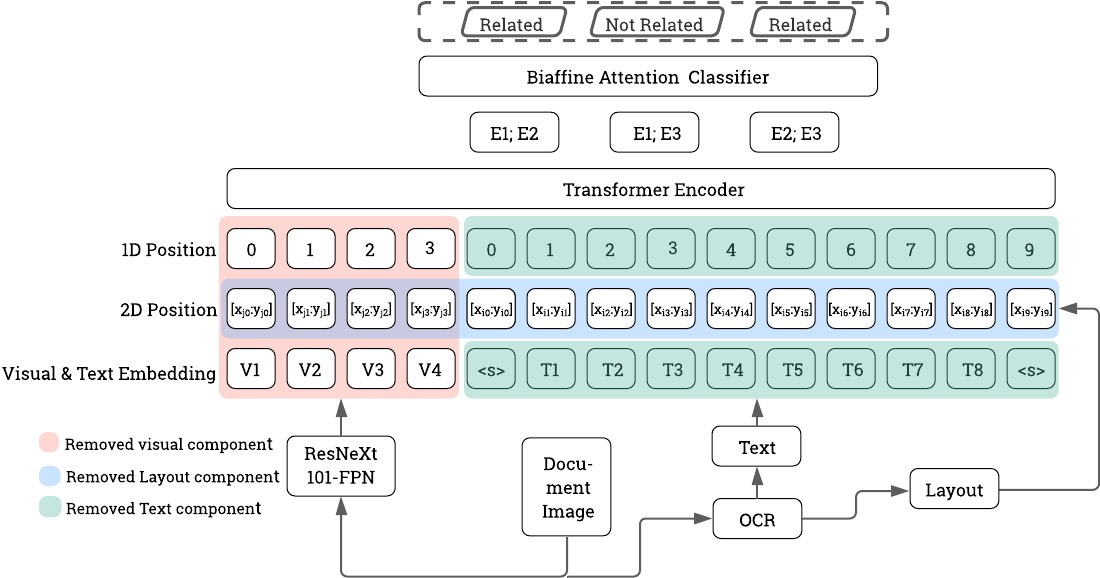

Multimodal integration of text, layout and visual information has achieved SOTA results in visually rich document understanding (VrDU) tasks, including relation extraction (RE). However, despite its importance, evaluation of the relative predictive capacity of these modalities is less prevalent. Here, we demonstrate the value of shared representations for RE tasks by conducting experiments in which each data type is iteratively excluded during training. In addition, text and layout data are evaluated in isolation. While a bimodal text and layout approach performs best (F1=0.684), we show that text is the most important single predictor of entity relations. Additionally, layout geometry is highly predictive and may even be a feasible unimodal approach. Despite being less effective, we highlight circumstances where visual information can bolster performance. In total, our results demonstrate the efficacy of training joint representations for RE.

翻译:文本、布局和视觉信息的多式整合已经实现了SOTA在视觉上丰富的文件理解(VrDU)任务,包括关系提取(RE)方面的成果。然而,尽管这些模式的重要性很重要,对这些模式的相对预测能力的评价并不那么普遍。在这里,我们通过在培训期间反复排除每种数据类型的实验,展示了RE任务共同表述的价值。此外,对文本和布局数据进行了单独评估。双式文本和布局方法表现得最好(F1=0.684),但我们显示,文本是实体关系最重要的单一预测者。此外,布局几何方法具有高度的预测性,甚至是一种可行的单一方式方法。尽管效果较小,我们强调视觉信息能够促进业绩的环境。总体而言,我们的成果显示了RE培训联合表述的效果。