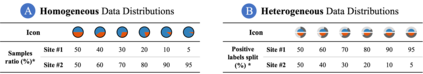

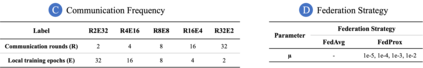

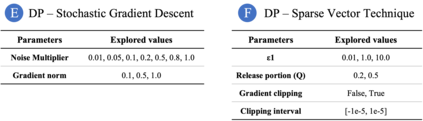

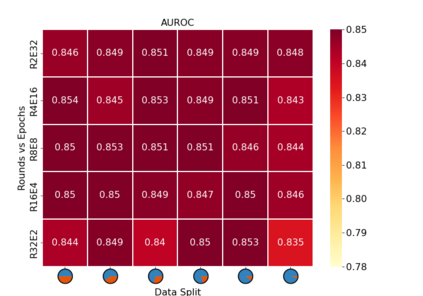

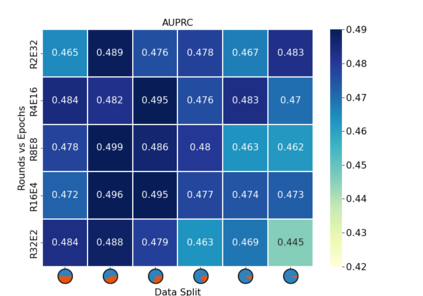

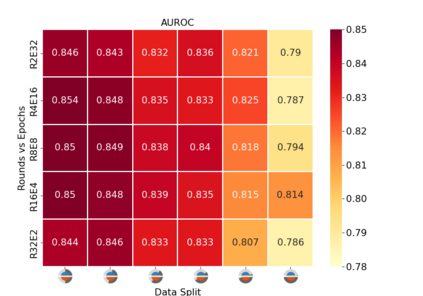

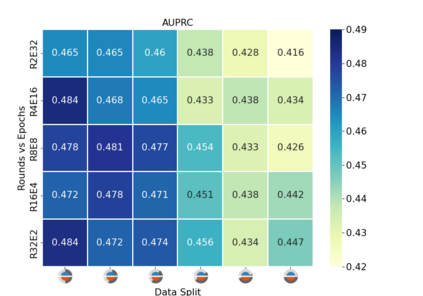

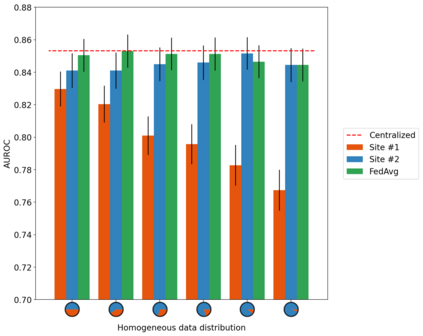

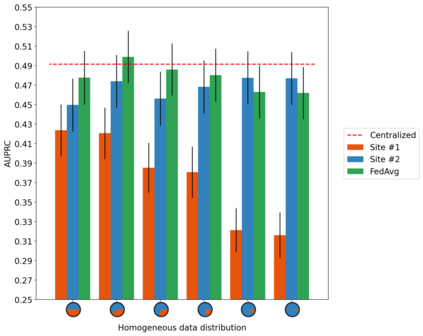

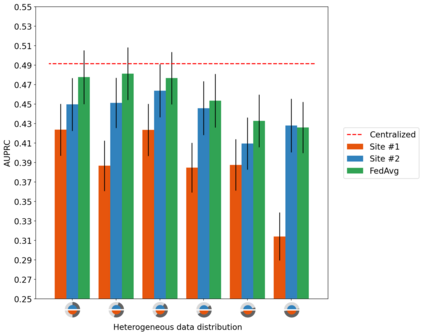

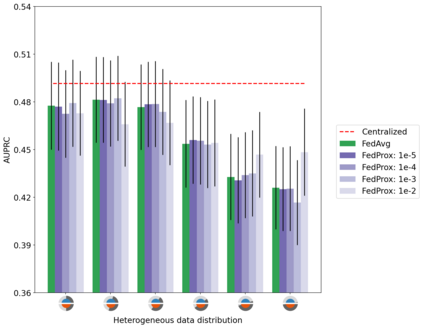

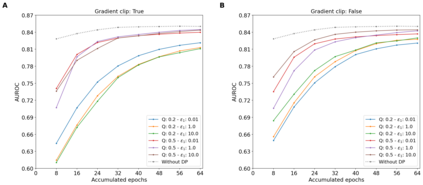

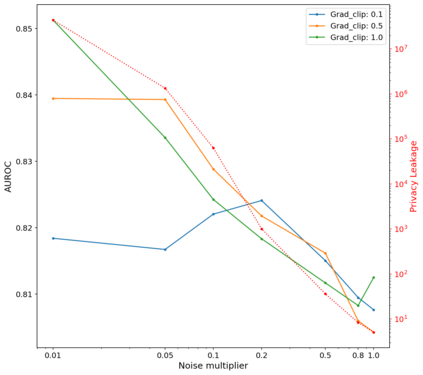

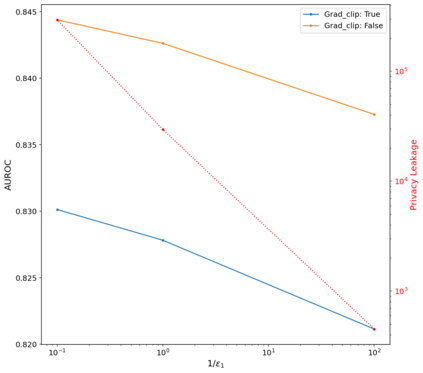

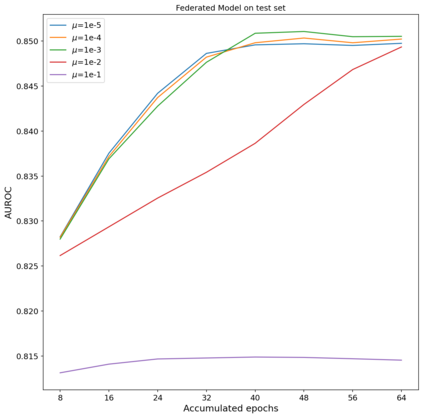

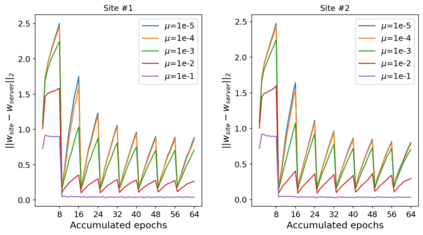

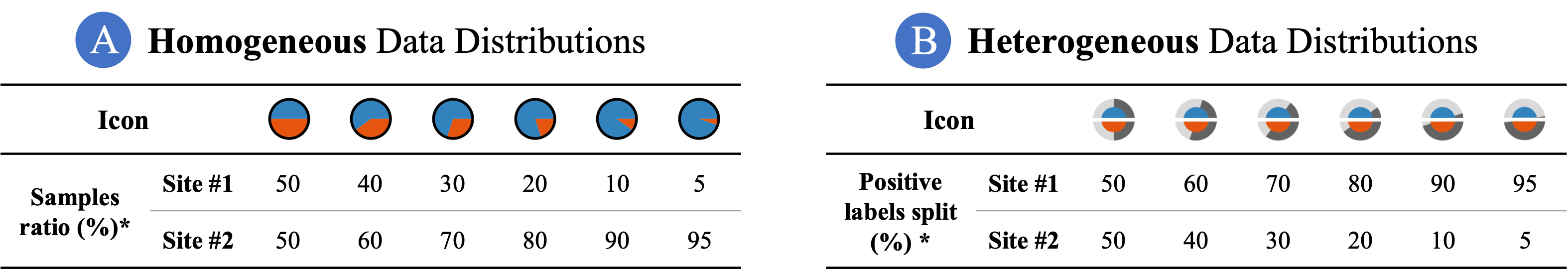

Background: Federated learning methods offer the possibility of training machine learning models on privacy-sensitive data sets, which cannot be easily shared. Multiple regulations pose strict requirements on the storage and usage of healthcare data, leading to data being in silos (i.e. locked-in at healthcare facilities). The application of federated algorithms on these datasets could accelerate disease diagnostic, drug development, as well as improve patient care. Methods: We present an extensive evaluation of the impact of different federation and differential privacy techniques when training models on the open-source MIMIC-III dataset. We analyze a set of parameters influencing a federated model performance, namely data distribution (homogeneous and heterogeneous), communication strategies (communication rounds vs. local training epochs), federation strategies (FedAvg vs. FedProx). Furthermore, we assess and compare two differential privacy (DP) techniques during model training: a stochastic gradient descent-based differential privacy algorithm (DP-SGD), and a sparse vector differential privacy technique (DP-SVT). Results: Our experiments show that extreme data distributions across sites (imbalance either in the number of patients or the positive label ratios between sites) lead to a deterioration of model performance when trained using the FedAvg strategy. This issue is resolved when using FedProx with the use of appropriate hyperparameter tuning. Furthermore, the results show that both differential privacy techniques can reach model performances similar to those of models trained without DP, however at the expense of a large quantifiable privacy leakage. Conclusions: We evaluate empirically the benefits of two federation strategies and propose optimal strategies for the choice of parameters when using differential privacy techniques.

翻译:联邦学习方法提供了在隐私敏感数据集方面培训机器学习模型的可能性,这些模型是不能轻易共享的; 多种条例对保健数据的储存和使用提出了严格的要求,对保健数据的储存和使用提出了严格的要求,导致数据处于筒仓状态(即在保健设施内锁定); 在这些数据集上应用联邦算法可以加速疾病诊断、药物开发以及改善病人护理; 方法: 我们广泛评价了不同联邦和不同隐私技术的影响,在开放源代码MIMIMIC-III数据集培训模型时,我们分析了影响联合式模型性能的一套参数,即数据分配(混合和混合的参数)、通信战略(通信回合与当地培训),联邦战略(FedAvg诉FedProx)。 此外,我们在模型培训中评估和比较了两种差异性隐私(DP)技术:基于血压梯度的梯度血统差异隐私权模型(DP-SGD),以及稀释病媒差异性能评估(DP-SVT) 。 结果:我们的实验表明,在不使用数据模型的极端数据分布在各站点(在经过培训的大型成本评估后,在使用经过精细化后,使用联邦的变变换的策略中,可以显示这些变换病人的成绩比率。