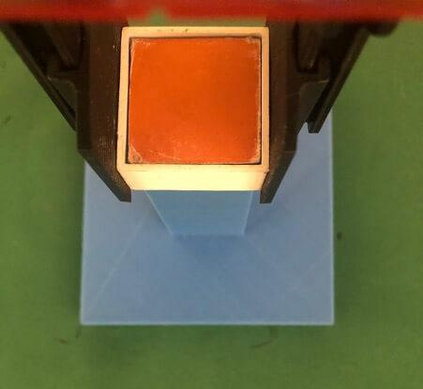

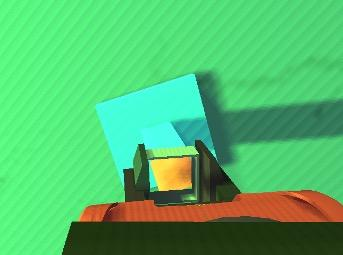

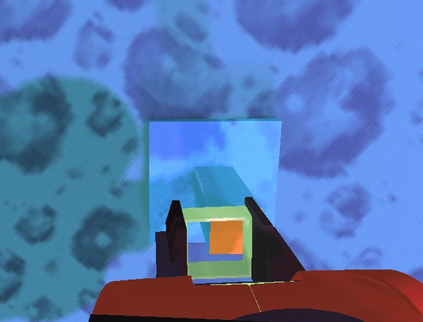

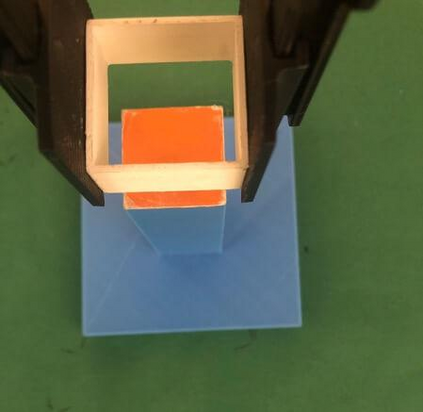

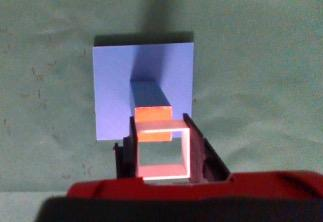

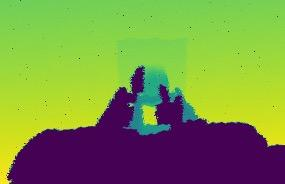

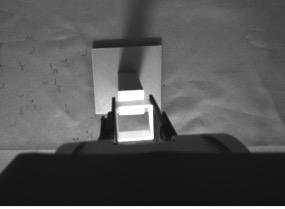

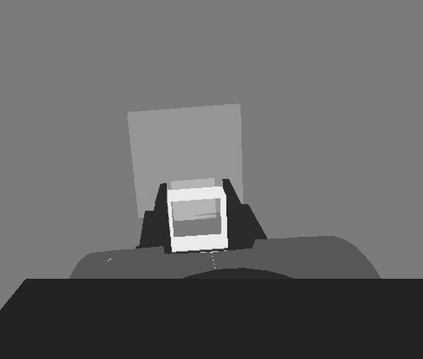

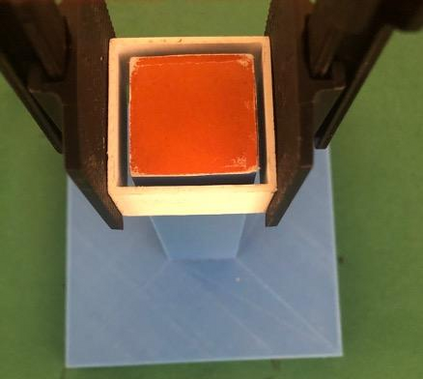

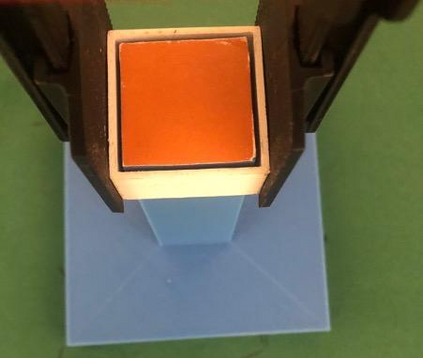

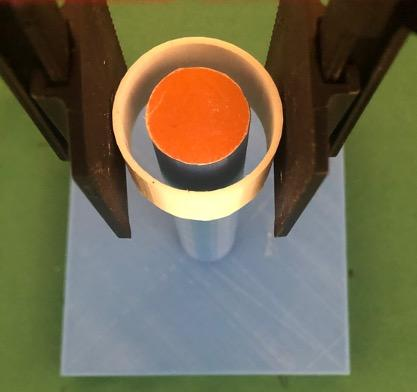

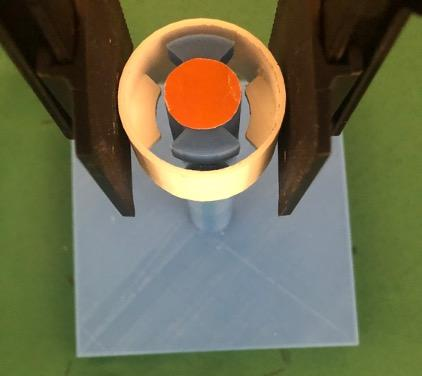

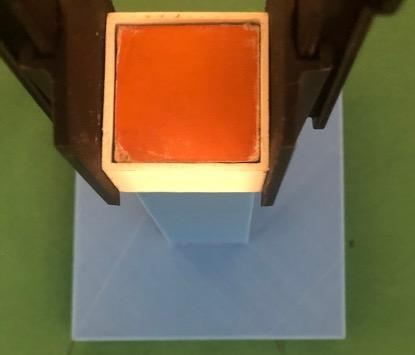

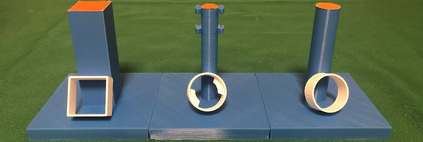

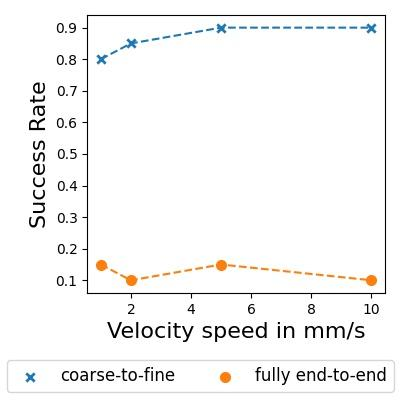

When training control policies for robot manipulation via deep learning, sim-to-real transfer can help satisfy the large data requirements. In this paper, we study the problem of zero-shot sim-to-real when the task requires both highly precise control, with sub-millimetre error tolerance, and full workspace generalisation. Our framework involves a coarse-to-fine controller, where trajectories initially begin with classical motion planning based on pose estimation, and transition to an end-to-end controller which maps images to actions and is trained in simulation with domain randomisation. In this way, we achieve precise control whilst also generalising the controller across the workspace and keeping the generality and robustness of vision-based, end-to-end control. Real-world experiments on a range of different tasks show that, by exploiting the best of both worlds, our framework significantly outperforms purely motion planning methods, and purely learning-based methods. Furthermore, we answer a range of questions on best practices for precise sim-to-real transfer, such as how different image sensor modalities and image feature representations perform.

翻译:当通过深层学习进行机器人操纵的培训控制政策能够帮助满足大型数据要求时,模拟到真实传输可以帮助满足大型数据要求。在本文中,我们研究任务既需要高度精确的控制,也需要使用亚毫米误差容忍度,也需要全工作空间的全貌化。我们的框架包括粗到软控制器,在这种控制器中,轨迹首先从传统的运动规划开始,先基于对面的估测,过渡到将图像映射到行动的端到端控制器,然后通过域随机化进行模拟培训。这样,我们就能实现精确控制,同时将控制器推广到整个工作空间,并保持基于视觉、端到端的控制的普遍性和稳健性。关于一系列不同任务的现实世界实验表明,通过利用两个世界的最佳手段,我们的框架大大超越了纯粹的动作规划方法和纯粹基于学习的方法。此外,我们回答了一系列关于精确的模拟到真实传输的最佳做法的问题,例如不同的图像传感器模式和图像特征表现方式如何。