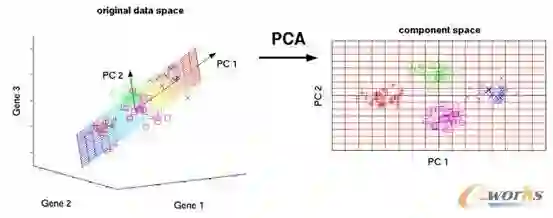

We analyze the classical method of principal component regression (PCR) in a high-dimensional error-in-variables setting. Here, the observed covariates are not only noisy and contain missing values, but the number of covariates can also exceed the sample size. Under suitable conditions, we establish that PCR identifies the unique linear model parameter with minimum $\ell_2$-norm, and derive non-asymptotic $\ell_2$-rates of convergence that show its consistency. Furthermore, we develop an algorithm for out-of-sample predictions in the presence of corrupted data that uses PCR as a key subroutine, and provide its non-asymptotic prediction performance guarantees. Notably, our results do not require the out-of-samples covariates to follow the same distribution as that of the in-sample covariates, but rather that they obey a simple linear algebraic constraint. We provide simulations that illustrate our theoretical results.

翻译:我们在高维误差变量设置中分析主元元回归的经典方法。 在这里, 观察到的共变器不仅噪音而且含有缺失值, 共变器的数量也可以超过样本大小。 在适当条件下, 我们确定 PCR 以最小 $\ $_ 2 $- norm 来识别独有的线性模型参数, 并得出显示其一致性的非非无线性值 $\ ell_ 2 $- 趋同率。 此外, 我们开发了一种算法, 用于在存在腐败数据的情况下进行超标预测, 该数据使用 PCR 来作为关键的子例, 并提供其非非随机性预测性性绩效保证 。 值得注意的是, 我们的结果并不要求外型共变体的参数遵循与恒定共变体相同的分布, 而是要遵守简单的线性变数限制 。 我们提供模拟来说明我们的理论结果 。