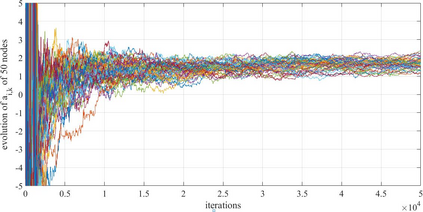

This paper addresses a distributed optimization problem in a communication network where nodes are active sporadically. Each active node applies some learning method to control its action to maximize the global utility function, which is defined as the sum of the local utility functions of active nodes. We deal with stochastic optimization problem with the setting that utility functions are disturbed by some non-additive stochastic process. We consider a more challenging situation where the learning method has to be performed only based on a scalar approximation of the utility function, rather than its closed-form expression, so that the typical gradient descent method cannot be applied. This setting is quite realistic when the network is affected by some stochastic and time-varying process, and that each node cannot have the full knowledge of the network states. We propose a distributed optimization algorithm and prove its almost surely convergence to the optimum. Convergence rate is also derived with an additional assumption that the objective function is strongly concave. Numerical results are also presented to justify our claim.

翻译:本文处理通信网络中分布式优化问题, 节点是活跃的, 零星的。 每个活动节点都应用某种学习方法来控制其行动, 以最大限度地实现全球通用功能, 即被定义为活动节点的本地通用功能之和。 我们处理随机优化问题, 设置通用功能受到某些非额外随机过程的干扰。 我们认为, 一个更具挑战性的情况是, 学习方法只能基于对通用功能的斜线近似, 而不是其封闭式表达方式来进行, 从而无法应用典型的梯度下移方法。 当网络受到某些随机和时间对调过程的影响时, 这个设置非常现实, 每个节点无法完全掌握网络状态的知识。 我们提出一个分布式优化算法, 并证明它几乎肯定与最佳的趋同。 趋同率也得出一个额外的假设, 即目标函数非常直线。 数字结果也用来证明我们的要求是合理的 。