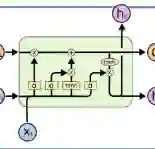

Because multimodal data contains more modal information, multimodal sentiment analysis has become a recent research hotspot. However, redundant information is easily involved in feature fusion after feature extraction, which has a certain impact on the feature representation after fusion. Therefore, in this papaer, we propose a new multimodal sentiment analysis model. In our model, we use BERT + BiLSTM as new feature extractor to capture the long-distance dependencies in sentences and consider the position information of input sequences to obtain richer text features. To remove redundant information and make the network pay more attention to the correlation between image and text features, CNN and CBAM attention are added after splicing text features and picture features, to improve the feature representation ability. On the MVSA-single dataset and HFM dataset, compared with the baseline model, the ACC of our model is improved by 1.78% and 1.91%, and the F1 value is enhanced by 3.09% and 2.0%, respectively. The experimental results show that our model achieves a sound effect, similar to the advanced model.

翻译:因为多模态数据包含更多的模态信息,所以多模态情感分析已成为最近的研究热点。然而,在特征提取后进行特征融合时,冗余信息很容易参与其中,这对融合后的特征表示有一定的影响。因此,在本文中,我们提出了一种新的多模态情感分析模型。在我们的模型中,我们使用BERT+BiLSTM作为新的特征提取器来捕获句子中的长距离依赖,并考虑输入序列的位置信息以获得更丰富的文本特征。为了消除冗余信息并使网络更多地关注图像和文本特征之间的相关性,我们在拼接文本特征和图片特征之后添加了CNN和CBAM注意力,以提高特征表示能力。在MVSA-single数据集和HFM数据集上,与基线模型相比,我们的模型的ACC分别提高了1.78%和1.91%,F1值分别提高了3.09%和2.0%。实验结果表明,我们的模型实现了良好的效果,类似于先进的模型。