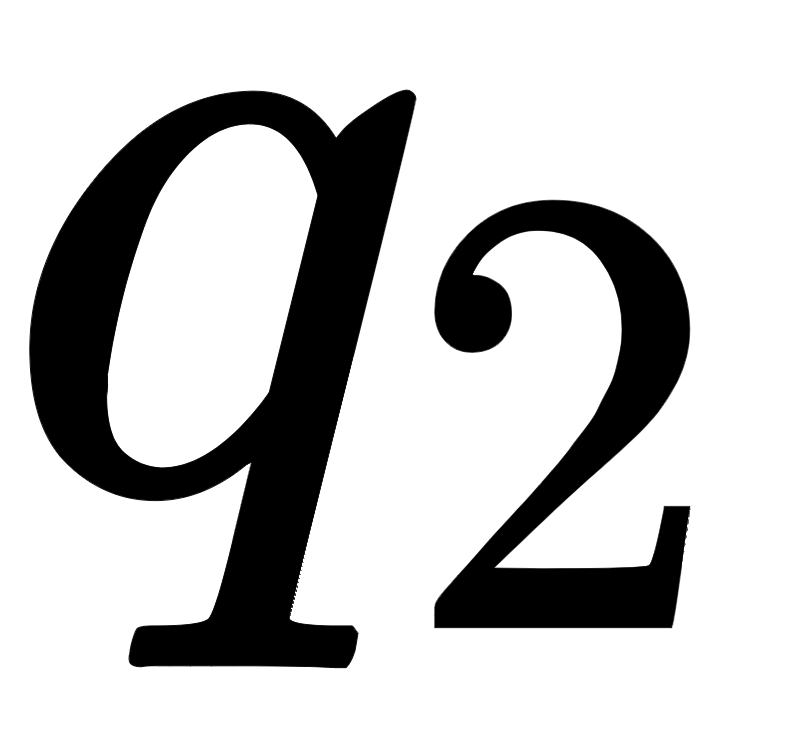

Recent improvements in the performance of state-of-the-art (SOTA) methods for Graph Representational Learning (GRL) have come at the cost of significant computational resource requirements for training, e.g., for calculating gradients via backprop over many data epochs. Meanwhile, Singular Value Decomposition (SVD) can find closed-form solutions to convex problems, using merely a handful of epochs. In this paper, we make GRL more computationally tractable for those with modest hardware. We design a framework that computes SVD of \textit{implicitly} defined matrices, and apply this framework to several GRL tasks. For each task, we derive linear approximation of a SOTA model, where we design (expensive-to-store) matrix $\mathbf{M}$ and train the model, in closed-form, via SVD of $\mathbf{M}$, without calculating entries of $\mathbf{M}$. By converging to a unique point in one step, and without calculating gradients, our models show competitive empirical test performance over various graphs such as article citation and biological interaction networks. More importantly, SVD can initialize a deeper model, that is architected to be non-linear almost everywhere, though behaves linearly when its parameters reside on a hyperplane, onto which SVD initializes. The deeper model can then be fine-tuned within only a few epochs. Overall, our procedure trains hundreds of times faster than state-of-the-art methods, while competing on empirical test performance. We open-source our implementation at: https://github.com/samihaija/isvd

翻译:最新最先进的图表教学方法( SOTA) 的性能最近有所改善。 我们设计了一个框架, 计算出\ textit{ 模糊地} 定义的矩阵, 并将这个框架应用到数项 GRL 任务。 对于每一项任务, 我们从SONTA模型的线性近似上得出SOTA模型, 在那里我们设计( Excentive- to- storate) 矩阵 $\ mathf{M} (SVD) 来训练模型, 仅使用几个小节点。 在本文中, 我们使 GRL( SOT) 工具的性能更加可进行计算。 我们设计了一个框架, 计算出 $\ mathfef 的SVD 参数, 定义的SVD 定义矩阵的SVD, 并应用一个不独特的点, 以更高级的性能测试S- developmental 模式, 以更高级的性能显示我们更高级的性能 。