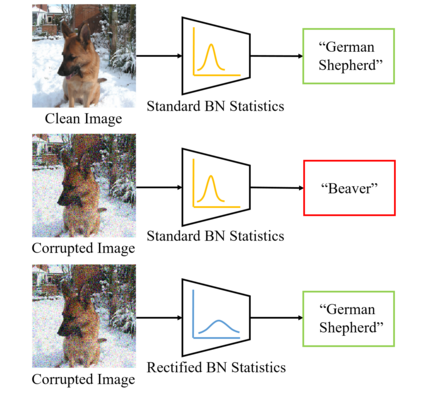

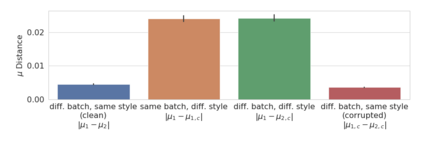

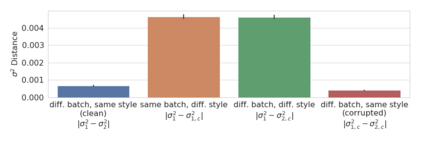

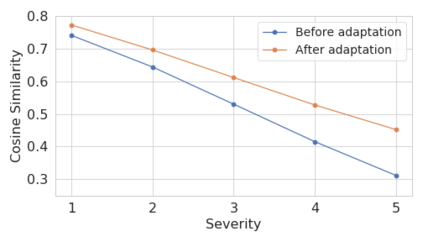

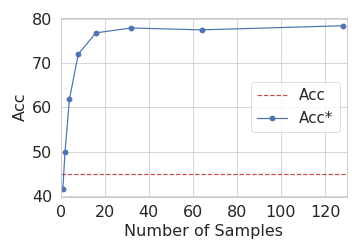

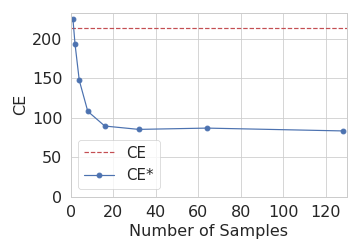

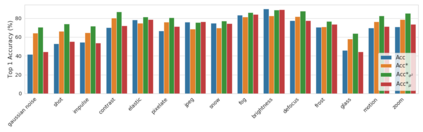

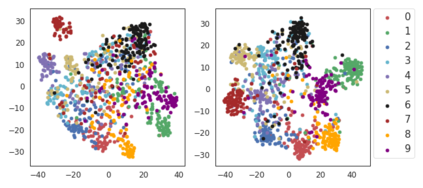

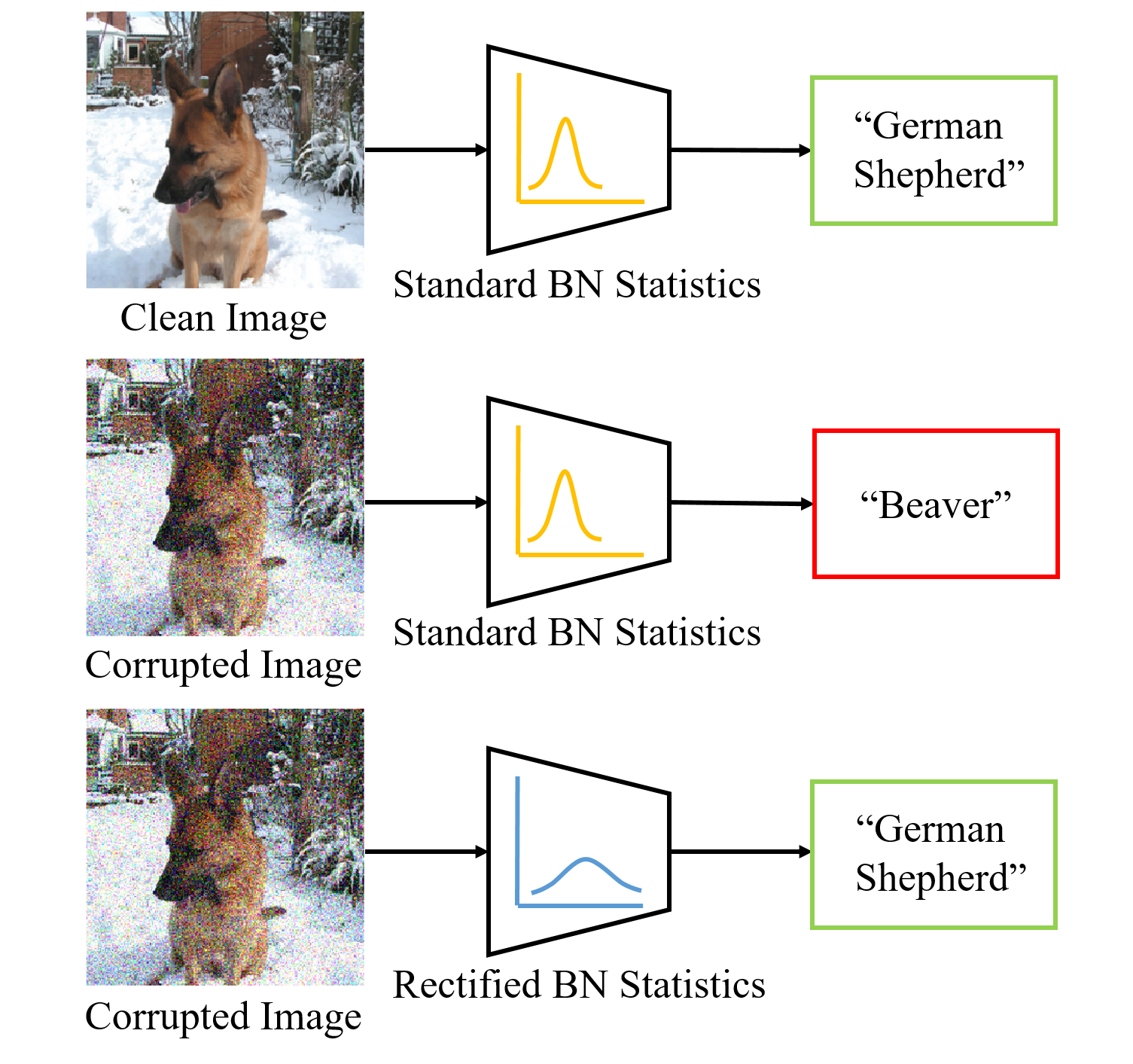

The performance of DNNs trained on clean images has been shown to decrease when the test images have common corruptions. In this work, we interpret corruption robustness as a domain shift and propose to rectify batch normalization (BN) statistics for improving model robustness. This is motivated by perceiving the shift from the clean domain to the corruption domain as a style shift that is represented by the BN statistics. We find that simply estimating and adapting the BN statistics on a few (32 for instance) representation samples, without retraining the model, improves the corruption robustness by a large margin on several benchmark datasets with a wide range of model architectures. For example, on ImageNet-C, statistics adaptation improves the top1 accuracy of ResNet50 from 39.2% to 48.7%. Moreover, we find that this technique can further improve state-of-the-art robust models from 58.1% to 63.3%.

翻译:在测试图像存在常见腐败时,经过清洁图像培训的DNN的性能被证明会下降。在这项工作中,我们将腐败稳健性解释为一个域变,并提议纠正批次正常化(BN)统计数据,以改进模型稳健性。这的动机是将从清洁域向腐败域的转变视为一种风格变换,以BN统计数据为代表。我们发现,仅仅根据少数(例如32个)代表样本来估计和调整BN统计数据,而不对模型进行再培训,就能通过在多个模型结构广泛的基准数据集上大幅提高腐败稳健性。例如,在图像网-C上,统计数据的调整使ResNet50的顶端1精确度从39.2%提高到48.7%。此外,我们发现,这一技术可以进一步改进最新强健模式,从58.1%提高到63.3%。