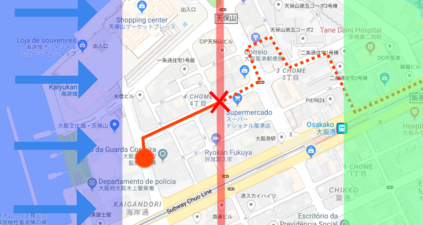

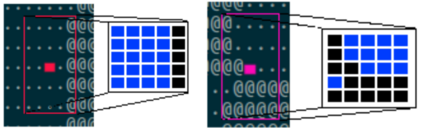

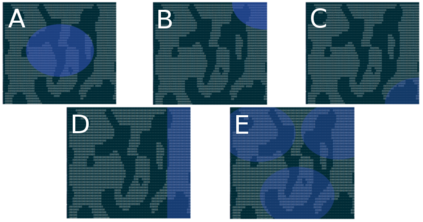

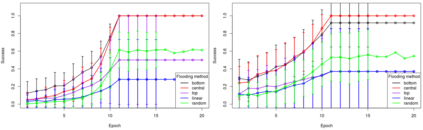

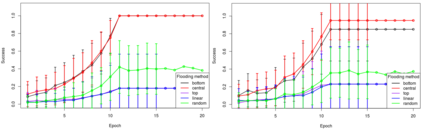

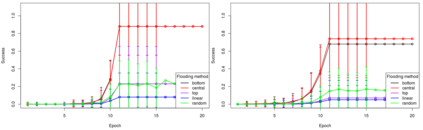

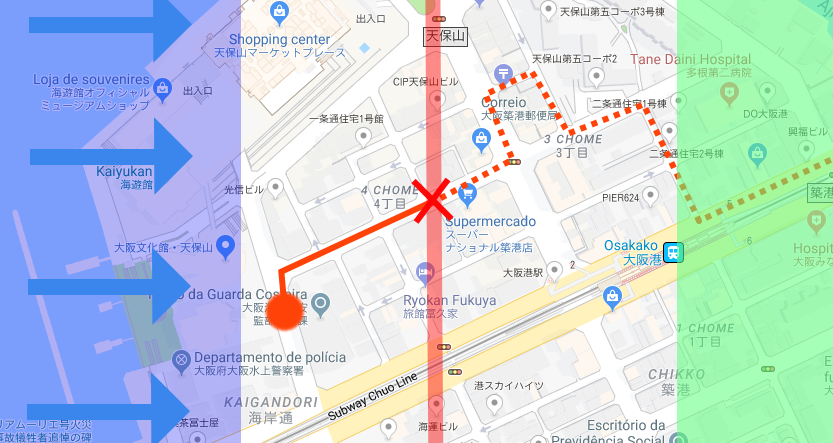

Natural disasters can cause substantial negative socio-economic impacts around the world, due to mortality, relocation, rates, and reconstruction decisions. Robotics has been successfully applied to identify and rescue victims during the occurrence of a natural hazard. However, little effort has been taken to deploy solutions where an autonomous robot can save the life of a citizen by itself relocating it, without the need to wait for a rescue team composed of humans. Reinforcement learning approaches can be used to deploy such a solution, however, one of the most famous algorithms to deploy it, the Q-learning, suffers from biased results generated when performing its learning routines. In this research a solution for citizen relocation based on Partially Observable Markov Decision Processes is adopted, where the capability of the Double Q-learning in relocating citizens during a natural hazard is evaluated under a proposed hazard simulation engine based on a grid world. The performance of the solution was measured as a success rate of a citizen relocation procedure, where the results show that the technique portrays a performance above 100% for easy scenarios and near 50% for hard ones.

翻译:由于死亡、迁移、比例和重建决定,自然灾害可在全世界造成巨大的社会经济负面影响。机器人在自然灾害发生期间成功地用于识别和救援受害者。然而,没有作出什么努力来部署解决方案,使自主机器人能够自行拯救公民的生命,而不必等待由人类组成的救援小组。强化学习方法可用于部署这样的解决方案,然而,部署这种解决方案的最著名的算法之一,即Q学习,在开展学习常规时产生偏差结果。在这项研究中,采用了基于部分可观测的Markov决策程序的公民搬迁解决方案,根据基于电网世界的拟议危险模拟引擎,对在自然灾害期间公民搬迁的双Q学习能力进行了评估。解决方案的绩效被测量为公民搬迁程序的成功率,其结果显示,在简单情景下,该技术显示业绩超过100%,硬情景下,接近50%。