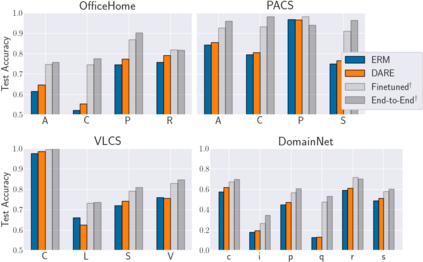

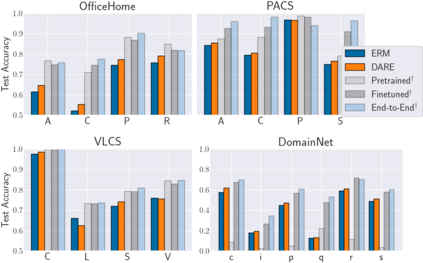

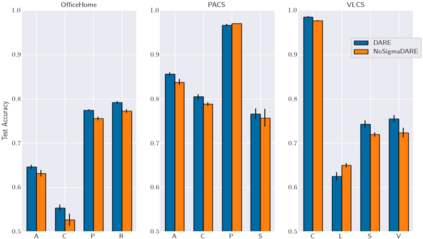

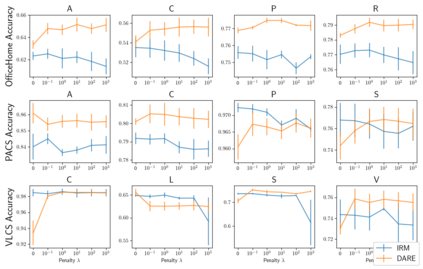

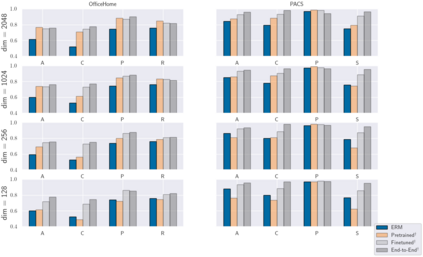

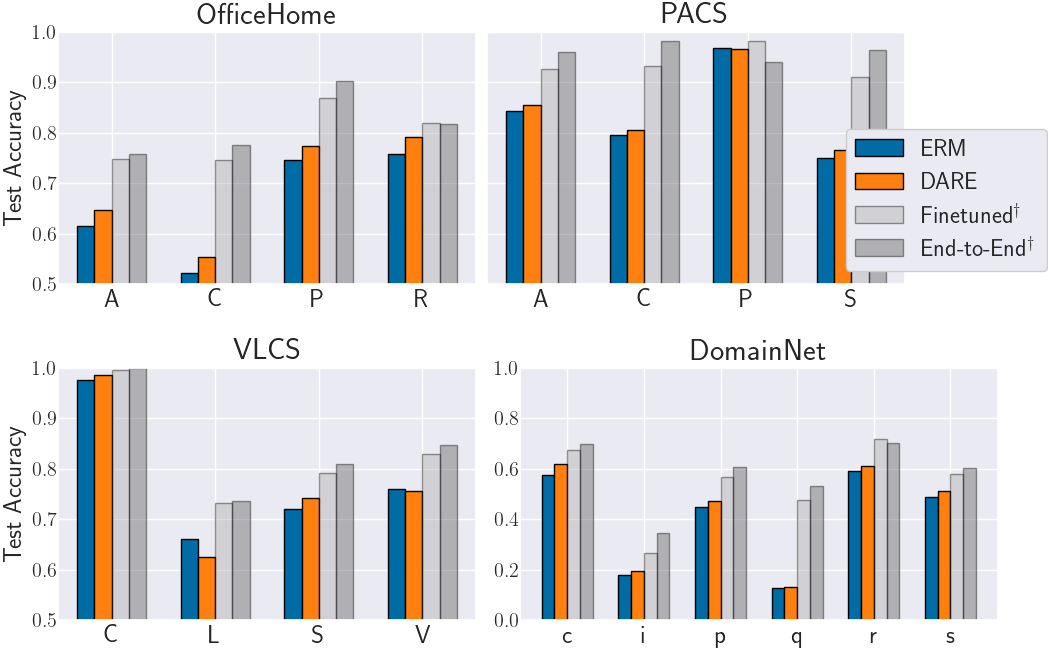

A common explanation for the failure of deep networks to generalize out-of-distribution is that they fail to recover the "correct" features. Focusing on the domain generalization setting, we challenge this notion with a simple experiment which suggests that ERM already learns sufficient features and that the current bottleneck is not feature learning, but robust regression. We therefore argue that devising simpler methods for learning predictors on existing features is a promising direction for future research. Towards this end, we introduce Domain-Adjusted Regression (DARE), a convex objective for learning a linear predictor that is provably robust under a new model of distribution shift. Rather than learning one function, DARE performs a domain-specific adjustment to unify the domains in a canonical latent space and learns to predict in this space. Under a natural model, we prove that the DARE solution is the minimax-optimal predictor for a constrained set of test distributions. Further, we provide the first finite-environment convergence guarantee to the minimax risk, improving over existing results which show a "threshold effect". Evaluated on finetuned features, we find that DARE compares favorably to prior methods, consistently achieving equal or better performance.

翻译:深海网络未能推广分布外移的常见解释是,它们未能恢复“ 正确” 功能。 聚焦于域的概括化设置, 我们用简单的实验来挑战这个概念, 这表明机构风险管理已经学会了足够的特征, 而目前的瓶颈并不是学习的特征, 而是有力的回归。 因此, 我们争辩说, 设计更简单的方法来学习现有特征的预测器是未来研究的一个有希望的方向。 为此, 我们引入了 Domain- Addjarded Regression (DARE), 这是学习线性预测器的一个连接目标, 在新的分布转移模式下, 该预测器非常可靠。 DARE 不是学习一个函数, 而是执行一个特定领域的调整, 以统一一个可支配的潜在空间中的域, 并学会在这个空间里预测。 在自然模型下, 我们证明 DARE 解决方案是一套受限制的测试分布的微缩成- 最佳预测器。 此外, 我们为微负负风险提供了第一个有限- 环境趋同保证, 改进现有结果, 显示“ 高度稳定效果 ” 。