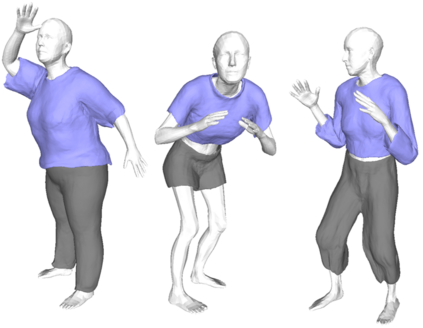

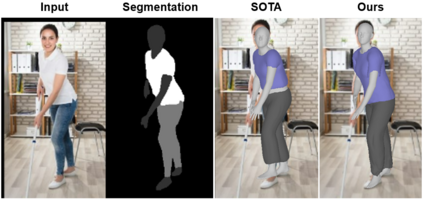

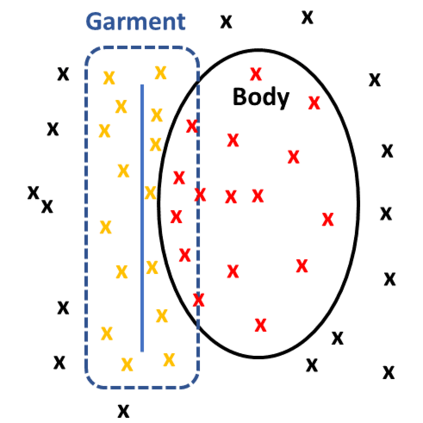

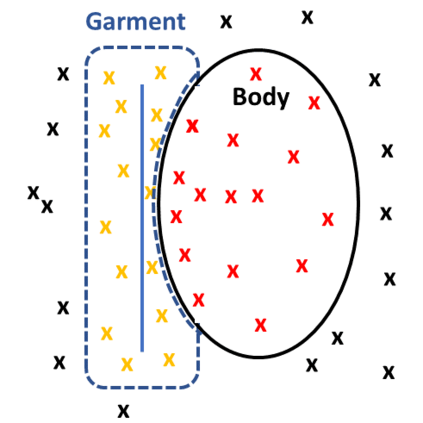

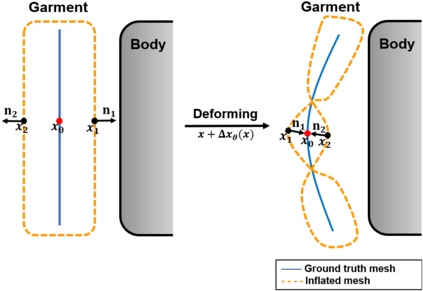

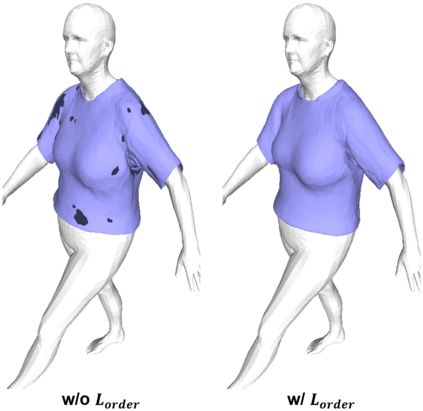

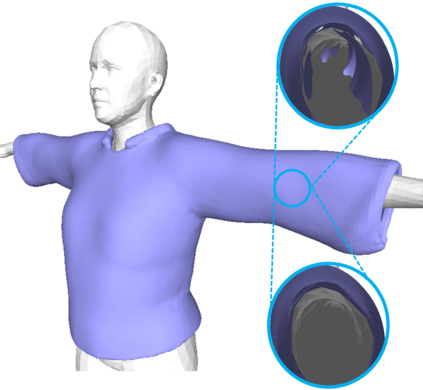

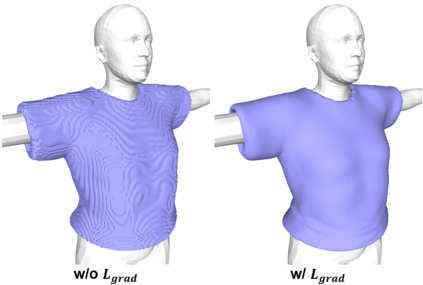

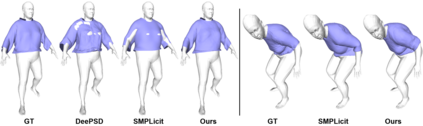

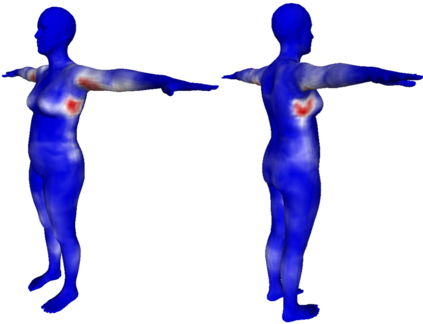

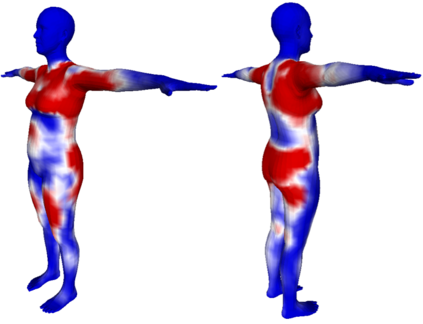

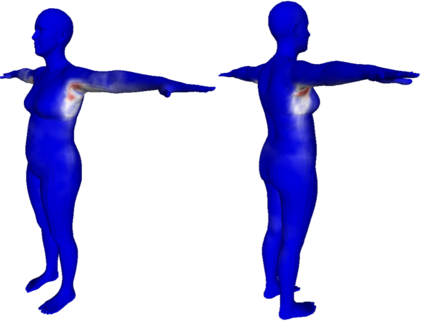

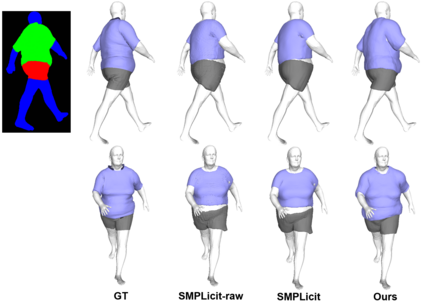

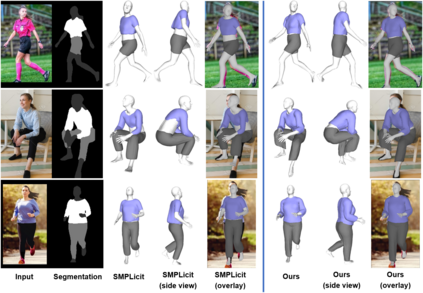

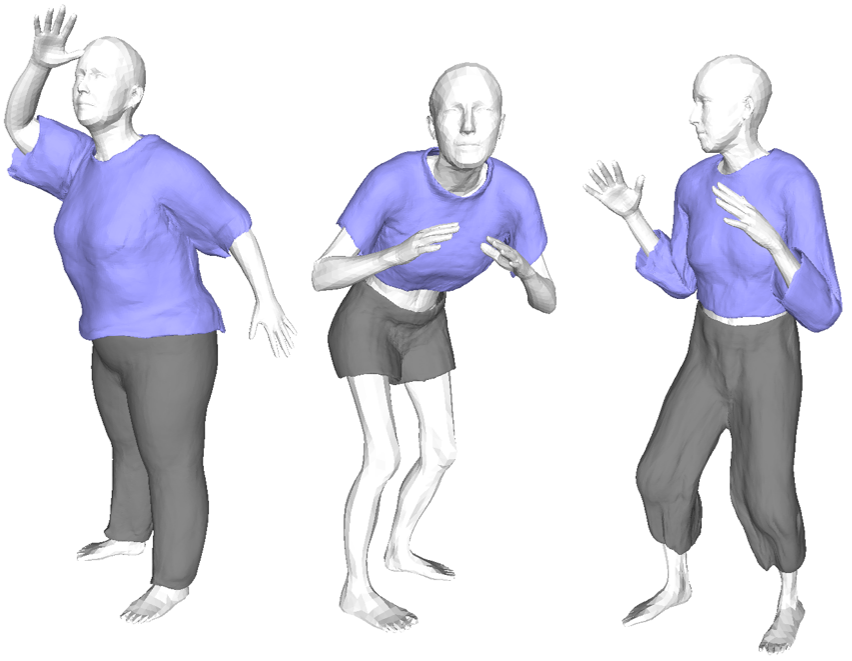

Existing data-driven methods for draping garments over human bodies, despite being effective, cannot handle garments of arbitrary topology and are typically not end-to-end differentiable. To address these limitations, we propose an end-to-end differentiable pipeline that represents garments using implicit surfaces and learns a skinning field conditioned on shape and pose parameters of an articulated body model. To limit body-garment interpenetrations and artifacts, we propose an interpenetration-aware pre-processing strategy of training data and a novel training loss that penalizes self-intersections while draping garments. We demonstrate that our method yields more accurate results for garment reconstruction and deformation with respect to state of the art methods. Furthermore, we show that our method, thanks to its end-to-end differentiability, allows to recover body and garments parameters jointly from image observations, something that previous work could not do.

翻译:尽管现有数据驱动的人体衣服处理方法有效,但无法处理任意的地形服装,而且通常无法从端到端区别开来。为解决这些限制,我们提议了一种端到端的可区别管道,它代表着使用隐含表面的服装,并学习了以形状和清晰体形模型的参数为条件的皮肤外观场面。为了限制体形间穿透和人工制品,我们提议了一种对训练数据进行预先处理的相互渗透战略,以及一种新的培训损失,这种培训损失惩罚了自我隔开的服装,同时也惩罚了他们。我们证明,我们的方法在服装的重建和变形方面产生了更准确的结果,与艺术的状态有关。此外,我们还表明,由于其端到端的可变性,我们的方法允许从图像观察中共同恢复体形和服装参数,而以前的工作是无法做到的。