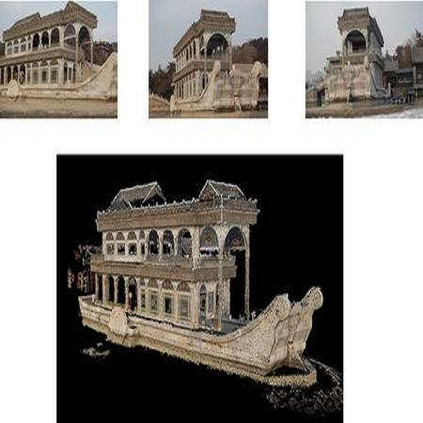

Inferring 3D structure of a generic object from a 2D image is a long-standing objective of computer vision. Conventional approaches either learn completely from CAD-generated synthetic data, which have difficulty in inference from real images, or generate 2.5D depth image via intrinsic decomposition, which is limited compared to the full 3D reconstruction. One fundamental challenge lies in how to leverage numerous real 2D images without any 3D ground truth. To address this issue, we take an alternative approach with semi-supervised learning. That is, for a 2D image of a generic object, we decompose it into latent representations of category, shape and albedo, lighting and camera projection matrix, decode the representations to segmented 3D shape and albedo respectively, and fuse these components to render an image well approximating the input image. Using a category-adaptive 3D joint occupancy field (JOF), we show that the complete shape and albedo modeling enables us to leverage real 2D images in both modeling and model fitting. The effectiveness of our approach is demonstrated through superior 3D reconstruction from a single image, being either synthetic or real, and shape segmentation.

翻译:从 2D 图像中推断出通用对象的 3D 结构是计算机视觉的长期目标。 常规方法要么从 CAD 生成的合成数据中完全学习,这些数据很难从真实图像中推断出来,要么通过内在分解生成 2.5D 深度图像,这与完全 3D 重建相比是有限的。 一个基本的挑战是如何利用许多真实的 2D 图像,而没有任何 3D 地面真相。 为了解决这个问题,我们采取了半监督学习的替代方法。 也就是说,对于一个通用对象的 2D 图像,我们将其分解成类别、 形状和反照、 照明和相机投影矩阵的潜在表达形式, 解码显示显示显示显示为3D 的分解形状和反照, 并把这些组件结合到与输入图像相近。 使用一个 3D 3D 类联合占用场, 我们用完整的形状和反照模型模型让我们在建模和模型安装中利用真实的 2D 。 我们的方法的有效性通过从单一图像( 合成的或真实的形状和形状) 3D 的高级重建得到证明。