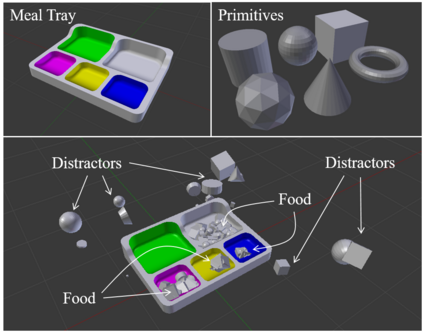

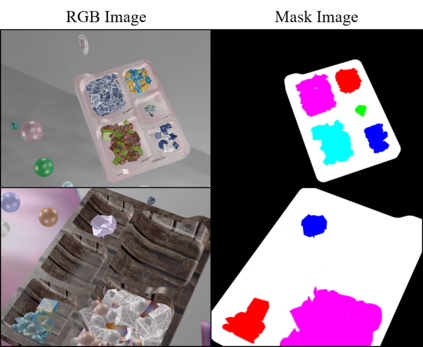

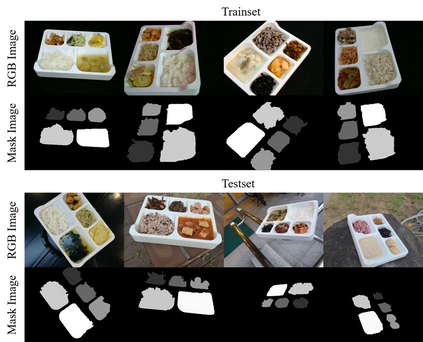

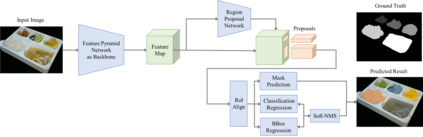

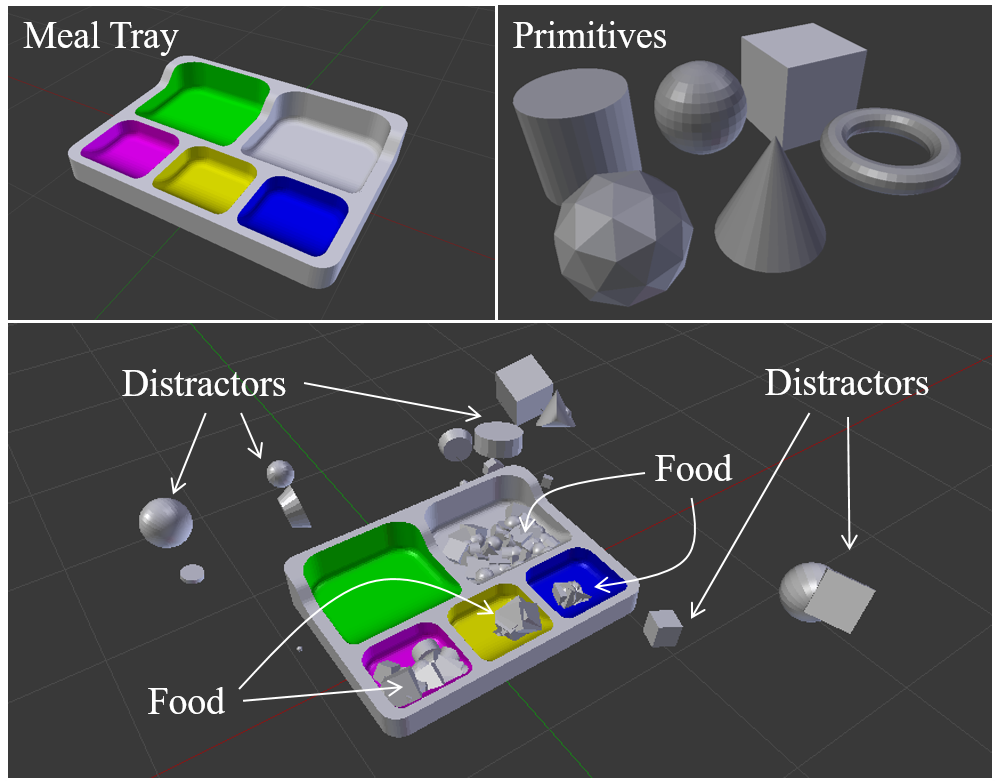

In the process of intelligently segmenting foods in images using deep neural networks for diet management, data collection and labeling for network training are very important but labor-intensive tasks. In order to solve the difficulties of data collection and annotations, this paper proposes a food segmentation method applicable to real-world through synthetic data. To perform food segmentation on healthcare robot systems, such as meal assistance robot arm, we generate synthetic data using the open-source 3D graphics software Blender placing multiple objects on meal plate and train Mask R-CNN for instance segmentation. Also, we build a data collection system and verify our segmentation model on real-world food data. As a result, on our real-world dataset, the model trained only synthetic data is available to segment food instances that are not trained with 52.2% mask AP@all, and improve performance by +6.4%p after fine-tuning comparing to the model trained from scratch. In addition, we also confirm the possibility and performance improvement on the public dataset for fair analysis. Our code and pre-trained weights are avaliable online at: https://github.com/gist-ailab/Food-Instance-Segmentation

翻译:在利用营养管理、数据收集和网络培训标签的深神经网络对食品进行智能分割图象的过程中,利用饮食管理、数据收集和网络培训标签等深神经网络对食物进行智能分割的过程非常重要,但这是一项劳动密集型的任务。为了解决数据收集和说明方面的困难,本文件建议了通过合成数据对真实世界适用的食物分割方法。为了对医疗机器人系统,如食品援助机器人手臂等进行食品分割,我们使用开放源3D图形软件Blender将多个物体放在餐盘上并培训Mask R-CNN 例如进行分割,我们生成合成数据。此外,我们建立了一个数据收集系统,并核实了我们关于真实世界食品数据的分割模型。因此,在现实世界数据集中,仅对未受过52.2%蒙蔽 AP@all 培训的部分食品案例提供经过培训的合成数据,并在与从零开始培训的模型进行微调后通过+6.4%p改进性能。此外,我们还确认公共数据集上的可能性和性改进性能,以便进行公平分析。我们的代码和预培训前重量是可验证的在线数据:https://githubbb.com-fals-fals-sailment/malimment/Fismalimment/falmentmentmentment/formentmentmentmentmentment/formentmentmentment/pmentalmentmentmentment