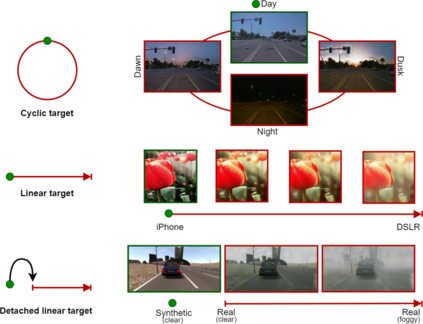

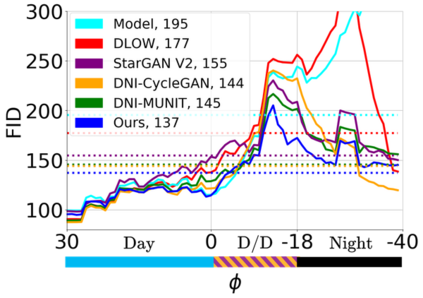

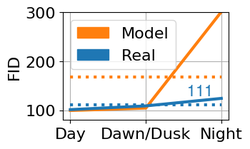

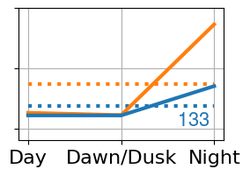

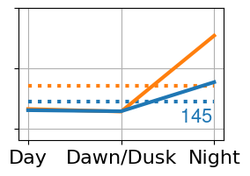

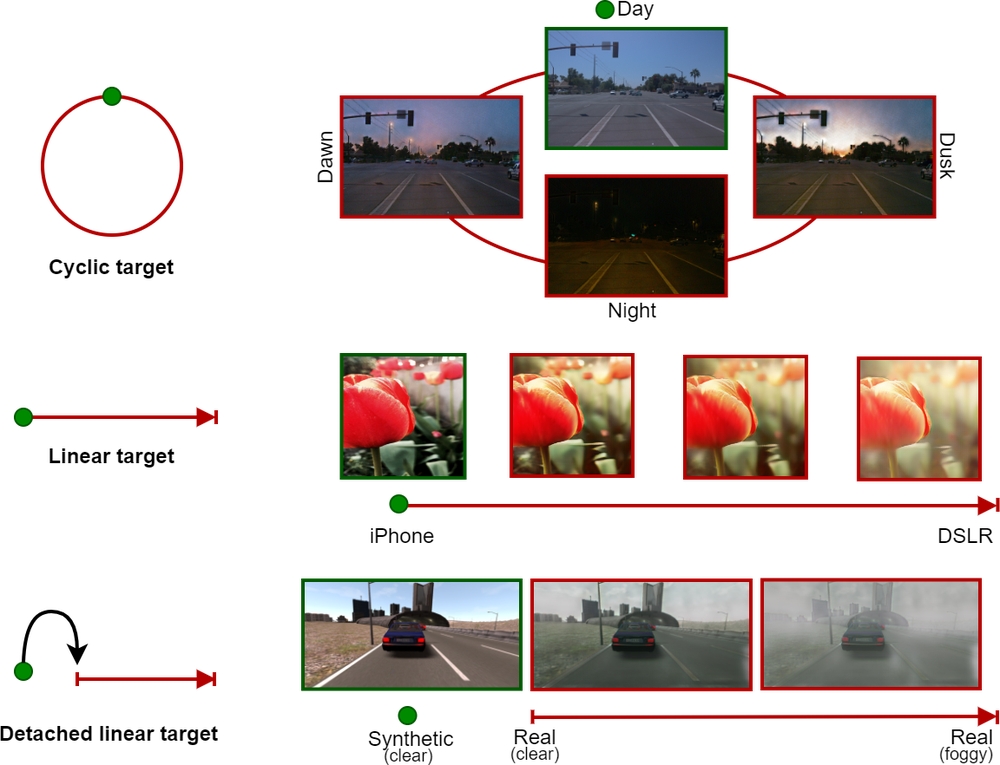

CoMoGAN is a continuous GAN relying on the unsupervised reorganization of the target data on a functional manifold. To that matter, we introduce a new Functional Instance Normalization layer and residual mechanism, which together disentangle image content from position on target manifold. We rely on naive physics-inspired models to guide the training while allowing private model/translations features. CoMoGAN can be used with any GAN backbone and allows new types of image translation, such as cyclic image translation like timelapse generation, or detached linear translation. On all datasets, it outperforms the literature. Our code is available at http://github.com/cv-rits/CoMoGAN .

翻译:COMOGAN是一个连续的GAN,依靠在功能方方面面对目标数据进行不受监督的重组。 在这方面,我们引入了新的功能性机构正常化层和留置机制,将图像内容与目标方方面面的位置分离开来。我们依靠天真物理学启发型模型来指导培训,同时允许私人模式/翻译特征。 CoMOGAN可以使用任何GAN主干线,允许新的图像翻译类型,如像时间折叠生成那样的循环图像翻译,或独立的线性翻译。在所有数据集上,它优于文献。我们的代码可以在http://github.com/cv-rits/CoMoGAN上查阅。

相关内容

Source: Apple - iOS 8