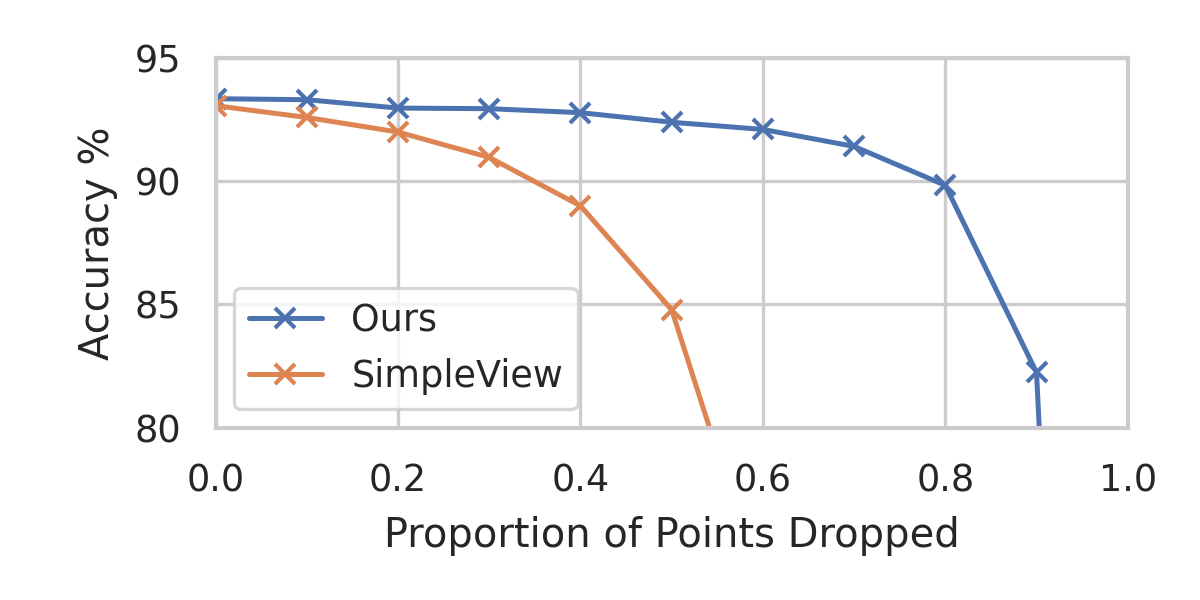

In recent years graph neural network (GNN)-based approaches have become a popular strategy for processing point cloud data, regularly achieving state-of-the-art performance on a variety of tasks. To date, the research community has primarily focused on improving model expressiveness, with secondary thought given to how to design models that can run efficiently on resource constrained mobile devices including smartphones or mixed reality headsets. In this work we make a step towards improving the efficiency of these models by making the observation that these GNN models are heavily limited by the representational power of their first, feature extracting, layer. We find that it is possible to radically simplify these models so long as the feature extraction layer is retained with minimal degradation to model performance; further, we discover that it is possible to improve performance overall on ModelNet40 and S3DIS by improving the design of the feature extractor. Our approach reduces memory consumption by 20$\times$ and latency by up to 9.9$\times$ for graph layers in models such as DGCNN; overall, we achieve speed-ups of up to 4.5$\times$ and peak memory reductions of 72.5%.

翻译:近年来,基于图形神经网络(GNN)的方法已成为处理点云数据的流行战略,定期在各种任务中实现最先进的表现;迄今为止,研究界主要侧重于改进模型的表达性,第二考虑如何设计能够高效率运行在资源有限的移动设备(包括智能手机或混合现实耳机)上的模型;在这项工作中,我们通过观察这些GNN模型由于其第一个、特征提取、层的代表性而受到严重限制,为提高这些模型的效率迈出了一步。我们发现,只要特征提取层保持最低限度的退化以模拟性能,这些模型就有可能彻底简化;此外,我们发现,通过改进特征提取器的设计,有可能改善模型网络40和S3DIS的总体业绩。我们的方法是将DGCNN模型中的图形层的记忆消耗量减少20美元,将存储量减少至9.9美元;总体而言,我们实现了高达4.5美元时间的快速增长,最高记忆量减少72.5%。