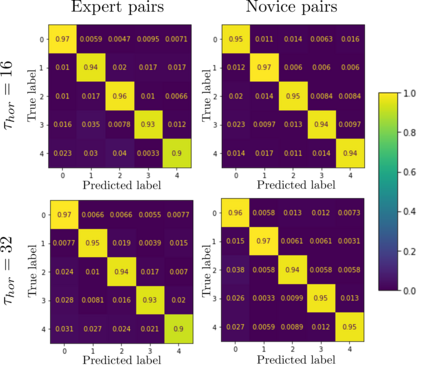

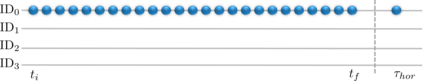

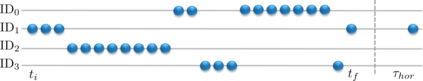

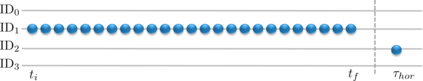

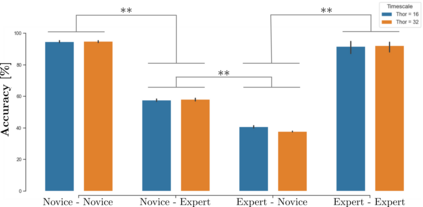

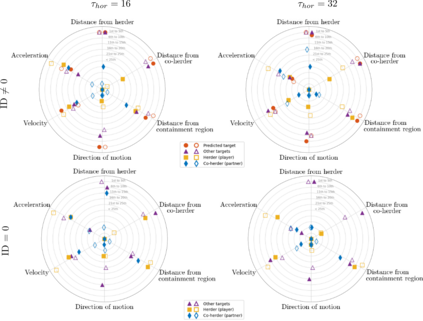

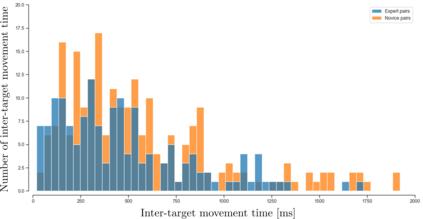

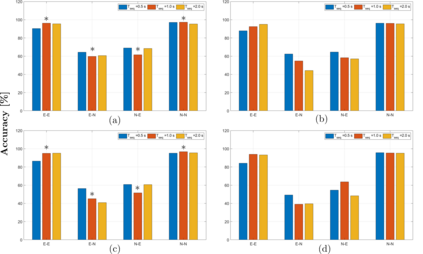

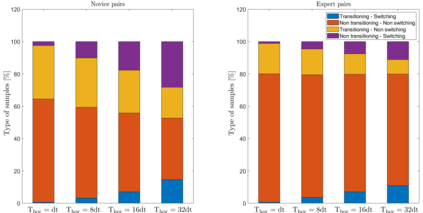

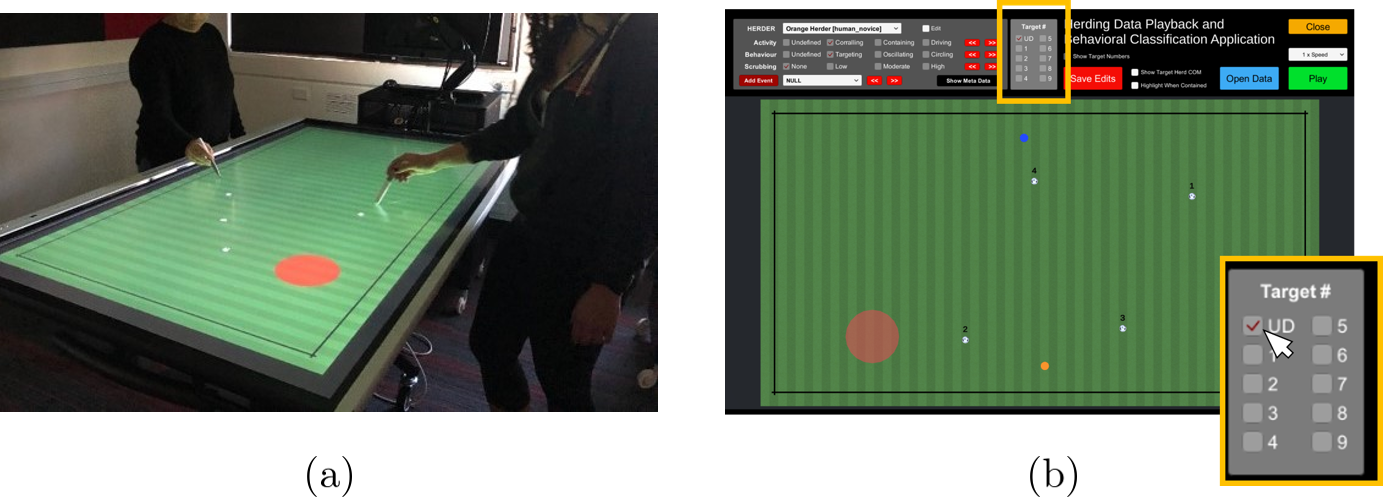

This study uses supervised machine learning (SML) and explainable artificial intelligence (AI) to model, predict and understand human decision-making during skillful joint-action. Long short-term memory networks were trained to predict the target selection decisions of expert and novice actors completing a dyadic herding task. Results revealed that the trained models were expertise specific and could not only accurately predict the target selection decisions of expert and novice herders but could do so at timescales that preceded an actor's conscious intent. To understand what differentiated the target selection decisions of expert and novice actors, we then employed the explainable-AI technique, SHapley Additive exPlanation, to identify the importance of informational features (variables) on model predictions. This analysis revealed that experts were more influenced by information about the state of their co-herders compared to novices. The utility of employing SML and explainable-AI techniques for investigating human decision-making is discussed.

翻译:这项研究利用监督的机器学习(SML)和可解释的人工智能(AI)来模拟、预测和理解在熟练联合行动期间的人类决策。长期的短期记忆网络接受了培训,以预测完成三角放牧任务的专家和新手的目标选择决定。研究结果显示,经过培训的模型具有专长,不仅能够准确预测专家和新牧民的目标选择决定,而且能够在行为者有意识的意图之前的时间尺度上作出;为了了解专家和新手的目标选择决定有何区别,我们随后采用了可解释的A技术,Shanapley Addipive Explanation,以确定模型预测信息特征(可变性)的重要性。这一分析显示,专家更受与其共同牧民状况相比与新手相比的信息的影响。讨论了使用SML和可解释的AI技术调查人类决策的效用。