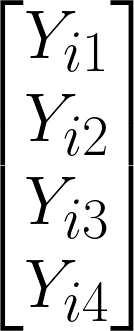

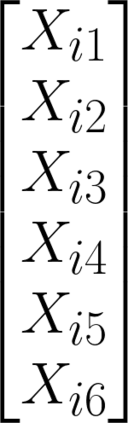

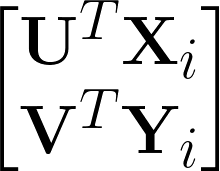

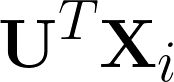

Effective understanding of a disease such as cancer requires fusing multiple sources of information captured across physical scales by multimodal data. In this work, we propose a novel feature embedding module that derives from canonical correlation analyses to account for intra-modality and inter-modality correlations. Experiments on simulated and real data demonstrate how our proposed module can learn well-correlated multi-dimensional embeddings. These embeddings perform competitively on one-year survival classification of TCGA-BRCA breast cancer patients, yielding average F1 scores up to 58.69% under 5-fold cross-validation.

翻译:有效理解癌症等疾病需要多式数据在物理尺度上收集多种信息来源。 在这项工作中,我们提议了一个新颖的嵌入模块,该模块来自康美相关分析,以说明内部和现代相互关系。模拟和真实数据的实验表明,我们提议的模块如何能学习与良好气候有关的多维嵌入。这些嵌入在TCGA-BRCA乳腺癌患者的一年生存分类上具有竞争力,导致平均F1得分高达58.69%,低于5倍的交叉校验。